The Big Data and Extreme-scale Computing (BDEC) workshop that took place in February in Fukuoka, Japan, brought together luminaries from industry, academia and government to discuss today’s big data challenges in the context of extreme-scale computing. Attendees to this invitation-only event include some of the world’s foremost experts on algorithms, computer system architecture, operating systems, workflow middleware, compilers, libraries, languages and applications.

The BDEC workshop series emphasizes the importance of systematically addressing “the ways in which the major issues associated with big data intersect with, impinge upon, and potentially change the national (and international) plans that are now being laid for achieving exascale computing.”

Among the diverse research to come out of this event was a white paper from Director of the Computation Institute in Chicago, Illinois, and grid computing pioneer, Ian Foster. In “Extreme-scale computing for new instrument science,” Foster asserts that data volumes and velocities in the experimental and observational science communities are advancing so fast, in some cases faster than Moore’s law, such that that these communities will soon require exascale-class computational environments to be productive. But what if some of the analysis work could be integrated into the instruments themselves?

The crux of Foster’s argument is that “instrument science…has the potential to greatly expand the impact of exascale technologies.”

Foster lays out an exciting future in which scientific instruments – light sources, tokomaks, telescopes, accelerators, genome sequencers, and the like – become part of a simulation model in which instrument output is used to guide experimentation. Instead of the typical linear science process where experiments involve sequential steps that can take months, exascale technologies point the way to a far more rapid process in which prior research feeds a knowledge base that is used to guide current models. In this scenario, “interesting” features can be flagged as data are being generated, and then that output is assimilated as the experiment is running, further evolving the simulation model.

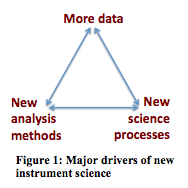

Realizing an intelligent, dynamic model such as this not only requires exascale-oriented hardware and software advances says Foster, there are three specific areas that relate more directly to scientific knowledge. These are:

1. Knowledge management and fusion, to permit the rapid integration of large quantities of diverse data and the transformation of that data into actionable knowledge.

2. Rapid knowledge-based response, to enable the use of large knowledge bases to guide fully or partially automated decisions within data-driven research activities.

3. Human-centered science processes, to enable rapid specification, execution, and guidance of science processes that will often span many resources and engage many participants.

While much work has been done in the field of knowledge-based science, the coming era of instrument science is faced with unique challenges relating to “data volume, variety, and complexity, and the need to balance the complementary capabilities of human experts, computational methods, and sensor technologies,” says Foster.

“The integration of simulation and instrument represents an important example of emerging new science processes within instrument science,” explains Foster. “In general terms, the opportunity is this: computational simulations capture the best available, but imperfect, theoretical understanding of reality; data from instruments provide the best available, but imperfect, observations of reality. Confronting one with the other can help advance knowledge in a variety of ways.”

Foster points to cosmology and materials science as leading application drivers for this emerging paradigm. Whether the need is to compare virtual skies with the “real sky” via digital sky surveys or to determine defect structures in disordered material, large-scale computation is required to process instrument data and prepare the simulated realities with which observations are compared.