Hyperion Research’s Steve Conway on the State of AI and the HPC Connection

August 10, 2021

Looking for an AI refresher to beat the summer heat? In this Q&A, Hyperion Research Senior Adviser Steve Conway surveys the AI and analytics marketplace in a time of intense activity and financial backing. Just last week, the NSF announced it had expanded the National AI Research Institutes program... Read more…

Japan to Debut Integrated Fujitsu HPC/AI Supercomputer This Spring

February 25, 2021

The integrated Fujitsu HPC/AI Supercomputer, Wisteria, is coming to Japan this spring. The University of Tokyo is preparing to deploy a heterogeneous computing Read more…

HPC + AI Wall Street Spotlights Cryptocurrencies, Infrastructure, More

September 8, 2020

High performance computing has long been a driver of competitive advantage in banking and finance. Some of the first supercomputers deployed by industry were us Read more…

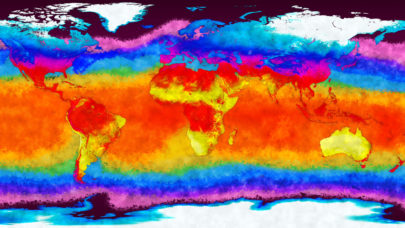

NOAA Updates Its Massive, Supercomputer-Generated Climate Dataset

January 15, 2020

As Australia burns, understanding and mitigating the climate crisis is more urgent than ever. Now, by leveraging the computing resources at the National Energy Research Scientific Computing Center (NERSC), the U.S. National Oceanic and Atmospheric Administration (NOAA) has updated its 20th Century Reanalysis Project (20CR) dataset... Read more…

Convergence of HPC, AI and Cloud Computing Charted at PEARC19 Keynote

August 1, 2019

A trio of keynote presentations from Intel, Google and Microsoft at the PEARC19 conference in Chicago on July 31 charted out the likely future of academic and h Read more…

At ASF 2019: The Virtuous Circle of Big Data, AI and HPC

April 18, 2019

We've entered a new phase in IT -- in the world, really -- where the combination of big data, artificial intelligence, and high performance computing is pushing Read more…

A Big Data Journey While Seeking to Catalog our Universe

January 16, 2019

It turns out, astronomers have lots of photos of the sky but seek knowledge about what the photos mean. Sound familiar? Big data problems are often characterize Read more…

AWS Debuts Lustre as a Service, Accelerates Data Transfer

November 28, 2018

From the Amazon re:Invent main stage in Las Vegas today, Amazon Web Services CEO Andy Jassy introduced Amazon FSx for Lustre, citing a growing body of applicati Read more…

- Click Here for More Headlines

Whitepaper

Transforming Industrial and Automotive Manufacturing

In this era, expansion in digital infrastructure capacity is inevitable. Parallel to this, climate change consciousness is also rising, making sustainability a mandatory part of the organization’s functioning. As computing workloads such as AI and HPC continue to surge, so does the energy consumption, posing environmental woes. IT departments within organizations have a crucial role in combating this challenge. They can significantly drive sustainable practices by influencing newer technologies and process adoption that aid in mitigating the effects of climate change.

While buying more sustainable IT solutions is an option, partnering with IT solutions providers, such and Lenovo and Intel, who are committed to sustainability and aiding customers in executing sustainability strategies is likely to be more impactful.

Learn how Lenovo and Intel, through their partnership, are strongly positioned to address this need with their innovations driving energy efficiency and environmental stewardship.

Download Now

Sponsored by Lenovo

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.