Material Simulation with Quantum Accuracy Wins 2023 ACM Gordon Bell Prize

November 20, 2023

Accurately calculating interactions among electrons has been a significant obstacle to reliable material exploration and design through computer modeling. Recen Read more…

Exascale Frontier Supercomputer Hosts Trio of New Cosmological Codes

April 27, 2023

Oak Ridge National Laboratory's exascale Frontier supercomputer – the first public exascale system in the world – debuted almost a year ago. Now, more and m Read more…

2023 Winter Classic Oak Ridge Blast Challenge Revealed

April 7, 2023

The close of the 2023 Winter Classic Invitational Student Cluster Competition is coming up fast, and I have to get some material out to you, our vast viewing au Read more…

At ORNL, Jeff Smith Becomes Interim Director, as Search for Permanent Lab Chief Continues

January 20, 2023

UT-Battelle, which manages Oak Ridge National Laboratory (ORNL) for the U.S. Department of Energy, has appointed Jeff Smith as interim director for the lab as t Read more…

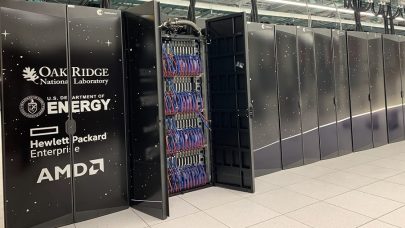

From Exasperation to Exascale: HPE’s Nic Dubé on Frontier’s Untold Story

December 2, 2022

The Frontier supercomputer – still fresh off its chart-topping 1.1 Linpack exaflops run and maintaining its number-one spot on the Top500 list – was still v Read more…

Frontier Keeps Top Supercomputer Spot, Nvidia’s H100 Debuts on List

November 14, 2022

The 60th edition of the Top500 list, revealed today at SC22 in Dallas, Texas, showcases many of the same systems as the previous installment, with Frontier stil Read more…

Survey Results: PsiQuantum, ORNL, and D-Wave Tackle Benchmarking, Networking, and More

September 19, 2022

The are many issues in quantum computing today – among the more pressing are benchmarking, networking and development of hybrid classical-quantum approaches. Read more…

DOE and ORNL Dedicate Frontier Supercomputer

August 17, 2022

“It is my privilege to welcome you to the dedication of Frontier, the supercomputer that broke the exascale barrier.” That was the introduction by Oak Ridge National Laboratory Director Thomas Zacharia, at a small, public event on August 17 to officially dedicate the supercomputer, which in May became the first system to achieve over 1.0 exaflops of 64-bit performance on the... Read more…

- Click Here for More Headlines

Whitepaper

Transforming Industrial and Automotive Manufacturing

In this era, expansion in digital infrastructure capacity is inevitable. Parallel to this, climate change consciousness is also rising, making sustainability a mandatory part of the organization’s functioning. As computing workloads such as AI and HPC continue to surge, so does the energy consumption, posing environmental woes. IT departments within organizations have a crucial role in combating this challenge. They can significantly drive sustainable practices by influencing newer technologies and process adoption that aid in mitigating the effects of climate change.

While buying more sustainable IT solutions is an option, partnering with IT solutions providers, such and Lenovo and Intel, who are committed to sustainability and aiding customers in executing sustainability strategies is likely to be more impactful.

Learn how Lenovo and Intel, through their partnership, are strongly positioned to address this need with their innovations driving energy efficiency and environmental stewardship.

Download Now

Sponsored by Lenovo

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.