Apple Buys DarwinAI Deepening its AI Push According to Report

Apple has purchased Canadian AI startup DarwinAI according to a Bloomberg report today. Apparently the deal was done early this year but still hasn’t been publicly announced according to the re …

Intel’s Strategy to Free Server Capacity by Pushing AI Inference to PCs

AI is here to stay and is becoming a larger part of the workload processed on servers and PCs. That's why Nvidia is seeing success as a chipmaker, and there is excitement around large language mo …

HPCwire’s 2024 People to Watch

Find out which 12 HPC luminaries are being recognized this year for driving innovation within their particular fields.

Qubit Roundup – Quantum Zoo Grows, Rigetti’s QPU Play, Google’s New Algorithm, QuEra’s EC Advance, and More

December 11, 2023

While the IBM Quantum Summit and the QC Ware’s Q2B Silicon Valley conference dominated last week’s news flow, there was no shortage of other quantum news em Read more…

The IBM-Meta AI Alliance Promotes Safe and Open AI Progress

December 5, 2023

IBM and Meta have co-launched a massive industry-academic-government alliance to shepherd AI development. The new group has united under the AI Alliance banner Read more…

Gaudi2, 4th Gen Xeon Show Strength in MLPerf Training 3.1, but still Trail Nvidia

November 10, 2023

Price, sufficient performance, and availability has become Intel’s mantra in the AI chip supply wars. Intel’s Gaudi2 accelerator and 4th gen Xeon CPU both p Read more…

Nvidia Showcases Domain-specific LLM for Chip Design at ICCAD

October 31, 2023

This week Nvidia released a paper demonstrating how generative AI can be used in semiconductor design. Nvidia chief scientist Bill Dally announced the new paper Read more…

How AMD May Get Across the CUDA Moat

October 5, 2023

When discussing GenAI, the term "GPU" almost always enters the conversation and the topic often moves toward performance and access. Interestingly, the word "GPU" is assumed to mean "Nvidia" products. (As an aside, the popular Nvidia hardware used in GenAI are not technically... Read more…

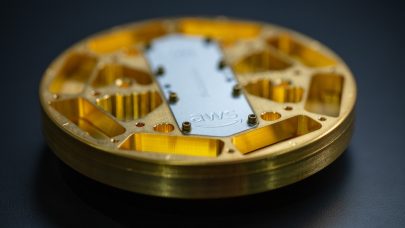

AWS Survey Showcases Quantum Algorithms and Applications

October 5, 2023

Somewhat quietly, while quantum hardware developers have been steadily improving today's early quantum computers (scale and error correction), developers of qua Read more…

IonQ Announces 2 New Quantum Systems; Suggests Quantum Advantage is Nearing

September 27, 2023

It’s been a busy week for IonQ, the quantum computing start-up focused on developing trapped-ion-based systems. At the Quantum World Congress today, the compa Read more…

Intel’s Gelsinger Lays Out Vision and Map at Innovation 2023 Conference

September 20, 2023

Intel’s sprawling, optimistic vision for the future was on full display yesterday in CEO Pat Gelsinger’s opening keynote at the Intel Innovation 2023 confer Read more…

- Click Here for More Headlines

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Whitepaper

Transforming Industrial and Automotive Manufacturing

Divergent Technologies developed a digital production system that can revolutionize automotive and industrial scale manufacturing. Divergent uses new manufacturing solutions and their Divergent Adaptive Production System (DAPS™) software to make vehicle manufacturing more efficient, less costly and decrease manufacturing waste by replacing existing design and production processes.

Divergent initially used on-premises workstations to run HPC simulations but faced challenges because their workstations could not achieve fast enough simulation times. Divergent also needed to free staff from managing the HPC system, CAE integration and IT update tasks.

Download Now

Sponsored by TotalCAE

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.