One of the most exciting developments in parallel programming over the past few years has been the availability and advancement of programmable graphics cards. A high end graphics card costs less than a high end CPU and provides tantalizing peak performance approaching, or exceeding, one teraflop. Since microprocessor peak performance tops out at about 25 gigaflops/core (single precision), this potential, at such low cost, is worth exploring. Harnessing this performance, however, is problematic.

It’s important to note that the GPUs powering the graphics cards are designed to do specific jobs very well. They are not designed as general purpose processors, and in fact will do a very poor job on many programs, even highly parallel applications. The key is to determine whether your application can fit into a programming model that maps well onto the GPU. I’m going to discuss the GPU architecture, but I’m going to start with an analogy, and probably stretch the analogy to the breaking point; let’s discuss airline travel.

Suppose your job is to transport several dozen large tour groups between London and Seattle, each group with 30-60 members. Your most likely choice is to use jet aircraft for a flight of about 5,000 miles or 7,700 km. Going for the most parallelism, you could use a new Airbus A380 to move 600 people in about 9 hours. One problem you have with these jumbo jets is they don’t fit at the main terminal, so you have to take an airport train out to the remote terminal where the plane is parked; the train can only carry so many people at a time, but let’s be optimistic and say it will take 90 minutes to move everyone out to the remote gate and load them, and another 90 minutes at the other end for unloading. This gives us a 24 hour round trip. If you regularly fill the plane, this could be a good investment (though, at $300M, a bit more than a GPU). However, if you only have a half-load, it doesn’t get them there twice as fast or at half the cost, though it does reduce the load/unload time. Measuring performance as passenger-miles, the performance comes from parallelism (many passengers), not from latency reduction.

Alternatively, you could opt for a few smaller planes, say two to four Boeing 787s. Each can carry about 200-250 passengers at the same speed, so you can move the same number of people at the same rate. You also have the advantage of parking at the main terminal, so you can reduce the passenger load/unload time to about half an hour, so your total round trip time is only 20 hours. Of course, you have to arrange for more crew, more landing slots at the airports, and so forth. Your total capital investment is about the same, but it gives you some flexibility. If you only have 200 people to move, you can leave all but one of the planes behind, saving on fuel and crew costs.

Or, perhaps you could invest in the (future) hypersonic transport, which some believe may be able to travel at speeds of Mach 6, 7-8 times faster than the current subsonic airliners. Assuming it takes time to get up to speed and to slow down for landing, the total flight time might drop from 9 hours to 2.5 hours, or 7 hours round trip. If the capacity of your hypothetical hypersonic transport is 200 passengers, you can transport 600 people in each direction in just 21 hours. Even better, if you only have to transport 400 people, you can get the same work done in two-thirds the time. Of course, you are buying a higher cost transport, probably paying more for fuel, and so on.

Very fast CPUs are more like the hypersonic transport; they are designed to provide extremely high performance for small tasks, but still offer adequate performance for large tasks. Multicore processors are more like the middle option, several smaller, lower-capacity devices, each quite capable, and you can save power by shutting down one or more of them. The GPU is more like the super-jumbo jet; it only gets high performance (passenger-miles) when you have lots of passengers. It doesn’t do so well getting just one or a few passengers across the ocean.

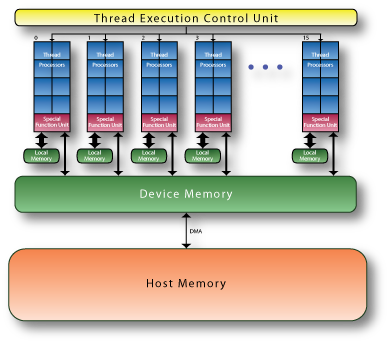

So now to GPU architecture. GPUs were originally hardwired for specific tasks; as transistor budgets and demand for flexibility grew, the hardware became more programmable. They still contain special hardware and functional units specific to graphics tasks, but I’ll ignore those and view today’s GPUs as compute accelerators. A typical design, shown in the figure above, is abstracted from information put out by NVIDIA. I’ll use both NVIDIA’s terms and more standard computer architecture descriptions of the various parts. The key to the performance is all those thread processors; in the figure, there are eight thread processors in each of 16 multiprocessors, for 128 TPs total. NVIDIA delivers GPUs with up to 30 multiprocessors and 240 TPs. In each clock, each TP can produce a result, giving this design a very high peak performance rating.

Each multiprocessor executes in SIMD mode, meaning each thread processor in that multiprocessor executes the same instruction simultaneously. If one thread processor is executing a floating point add, they all are; if one is doing a fetch, they all are. Moreover, each instruction is quad-clocked, meaning the SIMD width is 32, even though there are only eight thread processors. Unlike classical SIMD machines, there isn’t a distinction between the scalar and parallel operations, or mono and poly operations, to borrow terms from C* and Dataparallel C, and Cn for the Clearspeed card. Instead, the model is that of many scalar threads that just happen to be executing in SIMD mode, something NVIDIA calls SIMT execution. Careful orchestration of the 32 threads that execute in SIMD mode is necessary for best performance.

Stretching our analogy, think of each thread processor like a seat in our superjumbo jet, and each multiprocessor as a tour group. Imagine that when the flight attendant serves a meal, the whole tour group must be served at once (synchronously). The whole tour group must watch the same movie on the seat-back screens at the same time, or they all must read books at the same time, though some may be napping at any time. When one person wants to use the restroom, they all must go at once, even if not everyone needs to. Note that this is a long latency operation, which will correspond to the long latency memory.

The multiprocessors themselves execute asynchronously, and without communication. This last point is quite important. In a multicore or multiprocessor system, the cores or processors can communicate through the memory. If one thread stores a value in variable A then sets a FLAG, the hardware will guarantee that another thread on the same or another core or processor will not see the updated FLAG without seeing the updated value for A. The hardware supports a memory model that preserves the store order. No such memory model is supported on the GPU; a program could store a set of values on one multiprocessor and read the same locations on another, but there is no guarantee that the value fetched will be consistent (in the formal sense) with the values stored. Relaxing the memory model allows the hardware to reorder the stores from a multiprocessor, allowing more throughput.

In this GPU, each multiprocessor has a special function unit, which handles infrequent, expensive operations, like divide, square root, and so on; it operates more slowly than other operations, but since it’s infrequently used, it doesn’t affect performance. There is a high bandwidth, low latency local memory attached to each multiprocessor. The threads executing on that multiprocessor can communicate among themselves using this local memory. In the current NVIDIA generation, the local memory is quite small (16KB).

There is also a large global device memory (over 1GB in some models). This is physical, not virtual; paging is not supported, so all the data has to fit in the memory. The device memory has very high bandwidth, but high latency to the multiprocessors. The device memory is not directly accessible from the host, nor is the host memory directly visible to the GPU. Data from the host that needs to be processed by the GPU must be moved via DMA (across the IO bus) from the host to the device memory, and the results moved back — like loading jumbo jets using thin airport trains.

So, there is a hierarchy of parallelism on this GPU; threads executing within a multiprocessor can share and communicate using the local memory, while threads executing on different multiprocessors cannot communicate or synchronize. This hierarchy is explicit in the programming model as well. Parallelism comes in two flavors; outer, asynchronous parallelism between thread groups or thread blocks, and inner, synchronous parallelism within a thread block. All the threads of a thread block will always be assigned as a group to a single multiprocessor, while different thread blocks can be assigned to different multiprocessors.

Because of the high latency to the device memory, the multiprocessors are highly multithreaded as well. When one set of SIMD threads executes a memory operation, rather than stall, the multiprocessor will switch to execute another set of SIMD threads. The other SIMD threads may be part of the same thread block, or may come from a different thread block assigned to the same multiprocessor. Think of this as the flight attendant serving another tour group while one group gets up to visit the restrooms.

This GPU is designed as a throughput engine; it’s designed to get a lot of work done on a lot of data, all very quickly, all in parallel (lots of tour groups flying in the same direction). To get high performance, your program needs enough parallelism to keep the thread processors busy (lots of customers). Each of your thread blocks needs enough parallelism to fill all the thread processors in a multiprocessor (big tour groups), and at least as many thread blocks as you have multiprocessors (many big tour groups). In addition, you need even more parallelism to keep the multiprocessors busy when they need to thread-switch past a long latency memory operation (many many big tour groups).

However, lots of parallelism isn’t quite sufficient. The GPU is designed for structured data accesses, which graphic processing naturally uses. (Here’s where my analogy falls apart.) The memory is designed for efficient access to contiguous blocks of memory, meaning adjacent threads using adjacent data. Your program will run noticeably slower if it does random memory fetches, or (shades of vector processing) simultaneous accesses to the same memory bank. But if your program is structured to use contiguous data, the memory can run at full bandwidth.

The program model reflects this structure as well. Consider a simple graphics shader; you want to compute the color at each pixel on the screen. Generally, the color at each pixel is more or less independent of the color at every other pixel (natural parallelism), and the problem has a natural index set (the two-dimensional pixel coordinate). So the program model is a scalar program to be executed at each pixel coordinate, replicated and parallelized over the index set. The scalar program is a kernel and the index set is the domain. The indices for each instance of the kernel determine which pixel is being processed and are used to fetch and store data.

When you write a general purpose program for a GPU, you must currently follow this model as well. You must split out the computational kernels and the corresponding domains. You have to identify which data will live in the local memory, and move data between the local and device memory, keeping in mind that the local memory data only has a lifetime of a thread block.

Each kernel will complete its entire domain before the next kernel starts. In our airline analogy, you have to complete the eastbound flight for all the tour groups on board before starting the next flight westbound. The parallelism comes from the domain of a single kernel (data parallelism, all tour groups flying East at the same time), not from running many different kernels at once (task parallelism, lots of tour groups flying all over the country or world at once). This naturally limits, or focuses users on, the applications for which a GPU is appropriate.

Another key difference between GPUs and more general purpose multicore processors is hardware support for parallelism. GPUs don’t try to address all the possible forms of parallelism, but they do solve their target range quite well. We’ve already mentioned the SIMD instruction sets within a multiprocessor and hardware multithreading. There is also a hardware thread control unit that manages the distribution and assignment of thread blocks to multiprocessors. There is additional hardware support for synchronization within a thread block. Your common multicore processor depends on software and the OS to provide these features, so GPU has an advantage.

Programming GPUs Today

So far I haven’t really discussed how these highly parallel GPU architectures are programmed. Past accelerators were often programmed by offloading functions or subroutines; the user or compiler would marshall the arguments, send them to the accelerator, launch the function on the accelerator, and wait for completion, perhaps doing other useful work in the meantime. GPUs don’t fit this model; they aren’t fully functional, separately programmable devices. They really can only execute kernels, comprising a scalar kernel program and an index domain over which to apply it. If your function were that simple (matrix multiplication, SGEMM), it could look like a subroutine engine. Anything more complex, and the host has to manage the GPU execution by selecting and ordering a sequence of kernels to execute, and performing any scalar operations and conditionals along the way. In my next column, I’ll discuss the sometimes superhuman efforts necessary to decompose a program into kernels and manage the data movement between the host and the GPU.