In the past decade, the prevailing wisdom would have you believe that tape storage was a dead or dying breed, soon-to-be usurped by the sexier, speedier disk. Now that particular hype cycle has run its course and logic and common sense have returned to the storage conversation, prodded no doubt by a the latest buzzwords du jour, big data and cloud computing. At any rate, there’s no doubt that tape storage is as relevant as ever, and perhaps more relevant than ever. Indeed, this was the prevailing theme circulated by a group of prominent storage analysts at a recent Spectra Logic event in Boulder, Colo.

In 2009 Spectra celebrated its 30-year anniversary, and like its storage capacity, Spectra is also expanding. Their fiscal 2011 figures reflected a 30 percent YoY growth overall and Spectra’s enterprise tape libraries posted revenue growth of 49 percent in the same period. Growth like that explains why the company recently outgrew their office, lab and manufacturing space for the second time in three years. But they won’t be cramped for long; they just purchased a 55,000-square-foot building in downtown Boulder next door to the 80,712-square-foot building they acquired in 2009.

In 2009 Spectra celebrated its 30-year anniversary, and like its storage capacity, Spectra is also expanding. Their fiscal 2011 figures reflected a 30 percent YoY growth overall and Spectra’s enterprise tape libraries posted revenue growth of 49 percent in the same period. Growth like that explains why the company recently outgrew their office, lab and manufacturing space for the second time in three years. But they won’t be cramped for long; they just purchased a 55,000-square-foot building in downtown Boulder next door to the 80,712-square-foot building they acquired in 2009.

It’s no surprise that data growth is exploding. In 1999, humankind had amassed 11 exabytes of digital information. By 2010 the figure had jumped to over 1 zettabyte. In light of this exponential growth, it’s imperative that data-intensive markets adopt storage solutions that demonstrate reliability, density, scalability and energy efficiency. They will also need tools to extract value from all this created and stored data.

On the occasion of a big Spectra announcement earlier this week, Addison Snell, CEO of Intersect360 Research, explained that “the ‘Big Data’ trend is driving technology requirements in applications ranging from enterprise datacenters to academic supercomputing labs.” He went on to say that “the next generation of exascale data will require capabilities that not only provide sufficient capacity, but also deliver the speed, reliability, and data integrity features to match the needs of these environments.”

On the occasion of a big Spectra announcement earlier this week, Addison Snell, CEO of Intersect360 Research, explained that “the ‘Big Data’ trend is driving technology requirements in applications ranging from enterprise datacenters to academic supercomputing labs.” He went on to say that “the next generation of exascale data will require capabilities that not only provide sufficient capacity, but also deliver the speed, reliability, and data integrity features to match the needs of these environments.”

Consider these statistics (source: Making IT Matter – Chalfant/Toigo, 2009):

- Up to 70 percent of capacity of every disk drive installed today is misused.

- 40 percent of data is inert.

- 15 percent is allocated but unused.

- 10 percent is orphan data.

- 5 percent is contraband data.

Currently, disk storage makes up between 33 and 70 cents of every dollar spent on IT hardware, and this trend is accelerating.

Before delving into tape’s role in the cloud space, it’s helpful to first review the major storage categories:

- Backup: enables restoration of the most recent clean copy of the primary dataset available.

- Archive: a repository designed to store, retain, and preserve data over time, regardless of its relation to the primary dataset.

- Active Archive: An archive that manages both production and archive data in an online-accessible environment across multiple storage mediums including disk and tape.

It may seem obvious, but it’s important to point out that the value of data changes over time. With backup data, the information is more valuable today than it will be a month from now. Consider files stored on a computer that you will use to deliver a presentation five minutes from now. What if the data was lost or corrupted? With archive data, on the other hand, the value is measured over time. As our data habit grows, so does the need to prioritize it.

At the Spectra Logic event, which took place at the end of October, Chief Marketing Officer Molly Rector delivered a presentation on the state of the tape market, in which she shared the 2011 INSIC Tape Applications report. It looks at the ways tape is evolving to keep pace with the business realities of the 21st century:

“Tape has been shifting from its historical role of serving as a medium dedicated primarily to short-term backup, to a medium that addresses a much broader set of data storage goals, including:

Active archive (the most promising segment of market growth).

Regulatory compliance (approximately 20% – 25% of all business data created must be retained to meet compliance requirements for a specified and often lengthy period).

Disaster recovery, which continues in its traditional requirements as a significant use of tape.”

Rector explained that for practical reasons, a copy of all or most data stored in the cloud on disk is typically migrated to tape, if only for the extra reliability that tape provides. Tape is also used in cloud storage environments for the cost savings it confers.

Many people are naturally going to associate cloud storage with online storage, with spinning disks, but in reality most data that is created in or by the cloud will end up in a tape storage mechanism. In this video, Mark Peters, Senior Analyst for Enterprise Strategy Group, examines how tape’s role in the cloud parallels its importance in traditional IT. He details the following benefits:

- Low CAPEX and OPEX cost per GB.

- Reliable long-term storage with great media longevity and support for multiple media types.

- It’s verifiable, searchable and scalable (with an active archive solution).

In Peters’ words, “[tape] remains the ultimate backup, and increasingly archival, device.” He goes on to note that tape is especially relevant for cloud in the areas of tiering and secure multi-tenancy, that is the sharing resources but not data. Keeping data secure is particularly important since privacy is cited as one of the major concerns with cloud. Portability is yet another plus for organizations looking to seed an offsite location, execute bulk DR restores, and even serves as an exit strategy to return data to a customer.

Active archiving is a major part of Spectra’s strategy to fulfill the requirements of an increasingly cloud-centered computing infrastructure. In an active archive, all production data, no matter how old or infrequently accessed, can still be retrieved online. According to company literature, Spectra’s Active Archive platform, announced in April 2010, offers cost-effective, online, file-based solutions that enable user access to all created data. With it, a petabyte of data can be presented as if it were a C drive. Additionally, management is simplified; business users simply set SLAs with their customers on the time to data, which may be 90-120 seconds coming from tape, or 2-3 seconds with a disk array.

|

|

Click on image to enlarge. |

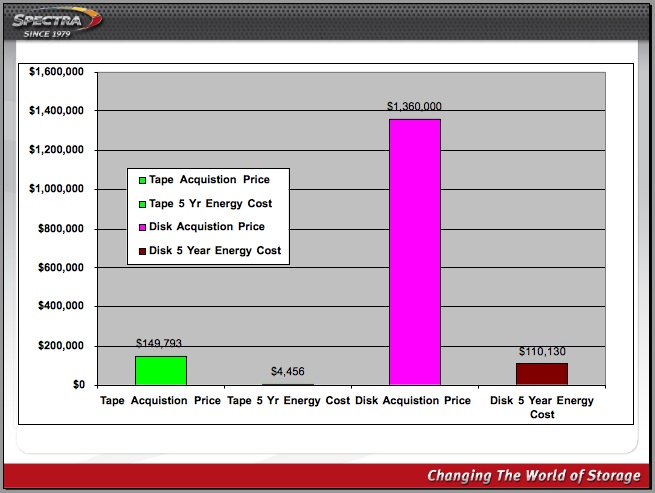

Archiving also reduces overall data volumes. It can turn 2.6 petabyte of data into 760 MB of managed data (as explained further in the set of slides on the right) and when you look at acquisition and energy costs, tape comes out ahead (as illustrated in the first slide). There are also financial and personnel costs involved with migration. Following best practices involves migrating data every 7 ½ years to new media in the same library with tape, or moving data to a new disk array every 3 – 4 years. As for portability, the quickest way to transport a petabyte of data is to send it through the air in a jumbo jet.

It certainly seems that Spectra is positioning itself as a big data storage provider, which it also refers to as exabyte storage. To that end, Spectra just announced a high-capacity T-Finity enterprise tape library capable of storing more than 3.6 exabytes of data. This represents the world’s largest data storage system – providing the highest capacity single library and the highest capacity library complex. A single T-Finity library will now expand to 40 frames for a capacity of up to 50,000 tape cartridges, and in a library complex, up to eight libraries can be clustered together for a capacity of up to 400,000 tape cartridges.

The jumbo storage solution will run the company’s recently-upgraded BlueScale management software, the latest version of which, BlueScale 12, delivers 35 to 60 percent faster robotic performance across Spectra’s enterprise and mid-range tape libraries, plus 15 to 20 times quicker library ‘power on’ times, as well as improved barcode scanning times. Another new advance, the Redundant Arrays of Independent Tapes (RAIT) architecture, developed with Spectra partner HPSS, “significantly improves data reliability in high performance, big data environments,” according to Jason Alt, senior software engineer, National Center for Supercomputing Applications.

Chairman, CEO and Founder Nathan C. Thompson originally designed Spectra’s storage systems for high-density, not speed, but customers were not willing to make that tradeoff, explains Rector. Active archiving strikes a balance between keeping data visible in online disk arrays and moving the data to more cost-effective near-line or off-line tape.

So who needs all that storage? Spectra sees this solution as a natural fit for a wide range of communities, from medicine and genomics to nuclear physics and petroleum exploration, in addition to supercomputing, media and entertainment, Internet storage, surveillance and, of course, cloud computing. As an interesting side note, when it comes to archiving data, Spectra notes that the general enterprise can archive about 80 percent of its data, but with HPC this figure is closer to 90-95 percent.

Spectra has well over 150 petascale-class customers, including Argonne National Laboratory, NCSA, NASA and Entertainment Tonight, as well as many that do not want to be named. Whether storing petabytes or exabytes, value and density are paramount. Organizations looking to meet their specific storage ought to be concerned with finding the right balance of speed, density and cost. Then there’s the long-term outlook: scalability. Tape libraries can stay active for 10 years, 15 years, or longer, incorporating new technologies as they become available.

Of course tape is not an all or nothing proposition, in most instances, a tiered storage approach provides an optimal cost/benefit profile, a concept summarized by Chris Marsh, Spectra’s IT market and development manager:

Cloud storage providers often implement a tiered storage approach that provides upfront performance to customers via performance disk, while relying on tape storage on the back end. This is an effective way of meeting their customers’ requirements and driving more profitability for their organization. So, regardless of whether it’s a business or technology decision, tape adds value to cloud storage and should be considered when reviewing any specific cloud storage service provider.

Marsh wrote an in-depth 3-part feature on the value of tape for cloud storage providers. In it, he lists the key characteristics for a cloud service, including multi-tenancy, security, data integrity verification, retrieval expectations, and exit strategies.

For anyone wondering why this shift is happening now and not sooner, the simple answer is there wasn’t this much data before! There is a belief that data is growing faster than storage capacity, so we need a shift, one that includes more optimal efficiencies that give users access to the data they need, when they need it, and does so in a reliable, cost-effective, and energy-efficient manner.