As the name implies, this new feature highlights the top research stories of the week, hand-selected from prominent science journals and leading conference proceedings. This week brings us a wide-range of topics from stopping the spread of pandemics, to the latest trends in programming and chip design, and tools for enhancing the quality of simulation models.

Heading Off Pandemics

Virgina Tech researchers Keith R. Bisset, Stephen Eubank, and Madhav V. Marathe presented a paper at the 2012 Winter Simulation Conference that explores how high performance informatics can enhance pandemic preparedness.

The authors explain that pandemics, such as the recent H1N1 influenza, occur on a global scale and affect large swathes of the population. They are closely aligned with human behavior and social contact networks. The ordinary behavior and daily activities of individuals operating in modern society (with its large urban centers and reliance on international travel) provides the perfect environment for rapid disease propagation. Change the behavior, however, and you change the progression of a disease outbreak. This maxim is at the heart of public health policies aimed at mitigating the spread of infectious disease.

The authors explain that pandemics, such as the recent H1N1 influenza, occur on a global scale and affect large swathes of the population. They are closely aligned with human behavior and social contact networks. The ordinary behavior and daily activities of individuals operating in modern society (with its large urban centers and reliance on international travel) provides the perfect environment for rapid disease propagation. Change the behavior, however, and you change the progression of a disease outbreak. This maxim is at the heart of public health policies aimed at mitigating the spread of infectious disease.

Armed with this knowledge, experts can develop effective planning and response strategies to keep pandemics in check. According to the authors, “recent quantitative changes in high performance computing and networking have created new opportunities for collecting, integrating, analyzing and accessing information related to such large social contact networks and epidemic outbreaks.” The Virginia Tech researchers have leveraged these advances to create the Cyber Infrastructure for EPIdemics (CIEPI), an HPC-oriented decision-support environment that helps communities plan for and respond to epidemics.

Next >> Help for the “Average Technologist”

Help for the “Average Technologist”

Another paper in the Proceedings of the Winter Simulation Conference addresses methods for boosting the democratization of modeling and simulation. According to Senior Technical Education Evangelist at The MathWorks Justyna Zander (who also affiliated with Gdansk University of Technology in Poland) and Senior Research Scientist at The MathWorks Pieter J. Mosterman (who is also an adjunct professor at McGill University), the list of practical applications associated with computational science and engineering is expanding. It’s common for people to use search engines, social media, and aspects of engineering to enhance their quality of life.

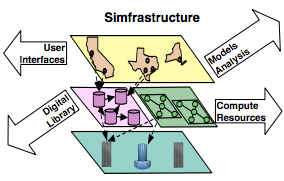

The researchers are proposing an online modeling and simulation (M&S) platform to assist the “average technologist” with making predictions and extrapolations. In the spirit of “open innovation,” the project will leverage crowd-sourcing and social-network-based processes. They expect the tool to support a wide range of fields, for example behavioral model analysis, big data extraction, and human computation.

In the words of the authors: “The platform aims at connecting users, developers, researchers, passionate citizens, and scientists in a professional network and opens the door to collaborative and multidisciplinary innovations.”

OpenStream and OpenMP

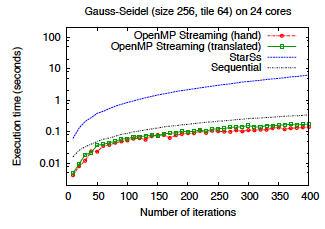

OpenMP is an API that provides shared-memory parallel programmers with a method for developing parallel applications on a range of platforms. A recent paper in the journal ACM Transactions on Architecture and Code Optimization (TACO) explores a streaming data-flow extension to the OpenMP 3.0 programming language. In this well-structured 26-page paper, OpenStream: Expressiveness and data-flow compilation of OpenMP streaming programs, researchers Antoniu Pop and Albert Cohen of INRIA and École Normale Supérieure (Paris, France) present an in-depth evaluation of their hypothesis.

The work addresses the need for productivity-oriented programming models that exploit multicore architectures. The INRIA researchers argue the strength of parallel programming languages based on the data-flow model of computation. More specifically, they examine the stream programming model for OpenMP and introduce OpenStream, a data-flow extension of OpenMP that expresses dynamic dependent tasks. As the INRIA researchers explain, “the language supports nested task creation, modular composition, variable and unbounded sets of producers/consumers, and first-class streams.”

The work addresses the need for productivity-oriented programming models that exploit multicore architectures. The INRIA researchers argue the strength of parallel programming languages based on the data-flow model of computation. More specifically, they examine the stream programming model for OpenMP and introduce OpenStream, a data-flow extension of OpenMP that expresses dynamic dependent tasks. As the INRIA researchers explain, “the language supports nested task creation, modular composition, variable and unbounded sets of producers/consumers, and first-class streams.”

“We demonstrate the performance advantages of a data-flow execution model compared to more restricted task and barrier models. We also demonstrate the efficiency of our compilation and runtime algorithms for the support of complex dependence patterns arising from StarSs benchmarks,” notes the abstract.

Next >> The Superconductor Promise

The Superconductor Promise

There’s no denying that the pace of Moore’s Law scaling has slowed as transistor sizes approach the atomic level. Last week, DARPA and a semiconductor research coalition unveiled the $194 million STARnet program to address the physical limitations of chip design. In light of this recent news, this research out of the Nagoya University is especially relevant.

Quantum engineering specialist Akira Fukimaki has authored a paper on the advancement of superconductor digital electronics that highlights the role of the Rapid Single Flux Quantum (RSFQ) logic circuit. Next-generation chip design will need to minimize power demands and gate delay and this is the promise of RSFQ circuits, according to Fukimaki.

Quantum engineering specialist Akira Fukimaki has authored a paper on the advancement of superconductor digital electronics that highlights the role of the Rapid Single Flux Quantum (RSFQ) logic circuit. Next-generation chip design will need to minimize power demands and gate delay and this is the promise of RSFQ circuits, according to Fukimaki.

“Ultra short pulse of a voltage generated across a Josephson junction and release from charging/discharging process for signal transmission in RSFQ circuits enable us to reduce power consumption and gate delay,” he writes.

Fukimaki argues that RSFQ integrated circuits (ICs) have advantages over semiconductor ICs and energy-efficient single flux quantum circuits have been proposed that could yield additional benefits. And thanks to advances in the fabrication process, RSFQ ICs have a proven role in mixed signal and IT applications, including datacenters and supercomputers.

Next >> Working Smarter, Not Harder

Working Smarter, Not Harder

Is this a rule to live by or an overused maxim? A little of both maybe, but it’s also the title of a recent journal paper from researchers Susan M. Sanchez of the Naval Postgraduate School, in Monterey, Calif., and Hong Wan of Purdue University, West Lafayette, Ind.

Is this a rule to live by or an overused maxim? A little of both maybe, but it’s also the title of a recent journal paper from researchers Susan M. Sanchez of the Naval Postgraduate School, in Monterey, Calif., and Hong Wan of Purdue University, West Lafayette, Ind.

“Work smarter, not harder: a tutorial on designing and conducting simulation experiments,” published in the WSC ’12 proceedings, delves into one of the scientist’s most important tasks, creating simulation models. Such models not only greatly enhance scientific understanding, they have implications that extend to national defense, industry and manufacturing, and even inform public policy.

Creating an accurate model is complex work, involving thousands of factors. While realistic, well-founded models are based on high-dimensional design experiments, many large-scale simulation models are constructed in ad hoc ways, the authors claim. They argue that what’s needed is a solid foundation in experimental design. Their tutorial includes basic design concepts and best practices for conducting simulation experiments. Their goal is to help other researchers transform their simulation study into a simulation experiment.