A couple months ago, Lawrence Livermore National Laboratory’s Sequoia supercomputer broke the million core barrier for a real-world application; now it’s done it again. This time, not only has the Sequoia surpassed the 1.5 million mark, but researchers have successfully harnessed all 1,572,864 of the machine’s cores for one impressive simulation.

|

|

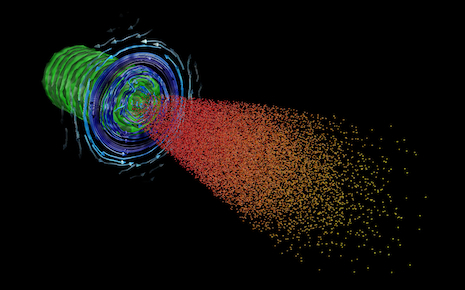

| OSIRIS simulation on Sequoia of the interaction of a fast-ignition-scale laser with a dense DT plasma. The laser field is shown in green, the blue arrows illustrate the magnetic field lines at the plasma interface and the red/yellow spheres are the laser-accelerated electrons that will heat and ignite the fuel. | |

Frederico Fiuza, a physicist and Lawrence Fellow at LLNL, used what are known as particle-in-cell (PIC) code simulations on Sequoia as part of a fusion research project. The simulations provide a detailed look at the interaction of powerful lasers with dense plasmas.

According to LLNL:

These simulations are allowing researchers, for the first time, to model the interaction of realistic fast-ignition-scale lasers with dense plasmas in three dimensions with sufficient speed to explore a large parameter space and optimize the design for ignition. Each simulation evolves the dynamics of more than 100 billion particles for more than 100,000 computational time steps. This is approximately an order of magnitude larger than the previous largest simulations of fast ignition.

The code used in these simulations is OSIRIS, a PIC code that was developed over the last 10 years through a collaboration between the University of California, Los Angeles and Portugal’s Instituto Superior Técnico. Extending the code to all 1.6 million cores of Sequoia was an exercise in extreme scaling and parallel performance.

There are two ways to implement scaling. Increasing the number of cores for a problem of fixed size is called “strong scaling.” Using this approach, OSIRIS obtained 75 percent efficiency on the full machine. The second method, called “weak scaling,” involves increasing the total problem size – this approach led to 97 percent efficiency.

To illustrate the principle in real-world terms, Fiuza states that “a simulation that would take an entire year to perform on a medium-size cluster of 4,000 cores can be performed in a single day [on Sequoia]….Alternatively, problems 400 times greater in size can be simulated in the same amount of time.”

Thermonuclear fusion research, which essentially seeks to recreate the power of the sun in a laboratory setting, is considered the holy grail of green energy. It’s a very difficult proposition but the payoff is huge. If such a feat is achievable, and scientists generally agree that it is, it will require these kinds of advances.

As Fiuza reports: “The combination of this unique supercomputer and this highly efficient and scalable code is allowing for transformative research.”

Sequoia is a National Nuclear Security Administration (NNSA) machine, installed at Lawrence Livermore National Laboratory. With a top theoretical speed of 20.1 petaflops and a benchmarked (Linpack) performance of 16.32 petaflops, the IBM Sequoia supercomputer replaced the K computer as the world’s fastest in June 2012. In November 2012, Sequoia dropped into second place, ousted from the top spot by the 17.6 petaflop (Linpack) Titan installed at Oak Ridge National Laboratory.