Whether a car on the highway, a plane flying through the air, or a ship in the ocean, all of these transport systems move through fluids. And in nearly all cases, the fluid flowing around these vehicles will be turbulent.

With over 20% of global energy consumption expended on transportation, the large fraction of the energy expended in moving goods and people that is mediated by wall-bounded turbulence is a significant component of the nation’s energy budget. However, despite the energy impact, scientists do not possess a sufficiently detailed understanding of the physics of turbulent flows to permit reliable predictions of the lift or drag of these system.

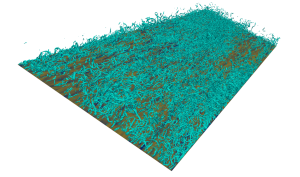

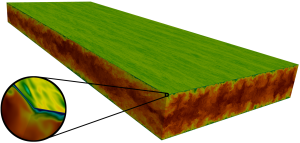

In order to probe the physics of wall-bounded turbulent flows, a team of scientists at the University of Texas are conducting the largest ever Direct Numerical Simulation (DNS) of wall-bounded turbulence at Ret = 5200. With 242 billion degrees of freedom, this simulation is fifteen times larger than the previously largest channel DNS of Hoyas and Jimenez, conducted in 2006.

In a DNS of turbulence, the equations of fluid motion (the Navier-Stokes equations) are solved, without any modeling, at sufficient resolution to represent all the scales of turbulence. In general, the full three-dimensional data fields of turbulent flow are difficult to obtain experimentally. On the other hand, computer simulations provide exquisitely detailed and highly reliable data, which have driven a number of discoveries regarding the nature of wall-bounded turbulence.

However, the use of DNS to study high speed flows has been hindered by the significant computational expense of the simulations. Resolving all the essential scales of turbulence introduces enormous computational and memory requirements, requiring DNS to be performed on the largest supercomputers. For this reason, DNS is a challenging HPC problem, and is a commonly used application to evaluate the performance of Top-500 systems. Due to the great expense of running a DNS, improving efficiencies in computation allows the simulation of more realistic scenarios (higher Reynolds numbers and larger domains) than would otherwise be possible.

M.K.(Myoungkyu) Lee, the lead developer of the new DNS code used in the simulations, will present the results of numerous software optimizations during the Extreme-Scale Applications Session at SC13, on Tuesday, Nov 19th, 1:30PM – 2:00PM. The presentation will detail scaling results across a variety of Top-500 platforms, such as the Texas Advanced Computing Center’s Lonestar and Stampede, the National Center for Supercomputing Applications’ Blue Waters, and Argonne Leadership Computing Facility’s Blue Gene/Q Mira, where the full scientific simulation was conducted.

The results demonstrate that performance is highly dependent on characteristics of the communication network and memory bandwidth, rather than single core performance. On Blue Gene/Q, for instance, the code exhibits approximately 80% strong scaling parallel efficiency at 786K cores relative to performance on 65K cores. The largest benchmark case uses 2.3 trillion grid points and the corresponding memory requirement is 130 Terabytes.

The code was developed using Fourier spectral methods, which are typically preferred for turbulence DNS because of the superior resolution properties, despite the resulting algorithmic need for expensive communication. Optimization was performed to address several major issues: efficiency of banded matrix linear algebra, cache reuse and memory access, threading efficiency and communication for the global data transposes.

A special linear algebra solver was developed, based on a custom matrix data structure in which non-zero elements are moved to otherwise empty elements, reducing the memory requirement by half, which is important for cache management. In addition, it is found that compilers inefficiently optimized the low-level operations on matrix elements for the LU decomposition. As a result, loops were unrolled by hand to improve reuse of data in cache.

FFTs, on-node data reordering and the time advance were all threaded using OpenMP to enhance single-node performance. These were very effective, with the code demonstrating nearly perfect OpenMP scalability (99%).

The talk will also discuss how replacing the existing library for 3D global Fast Fourier Transforms (P3DFFT) with a new library developed using the FFTW 3.3-MPI library and lead to substantially improved communication performance.

The full scientific simulation used 300 million core hours on ALCF’s BG/Q Mira from the Department of Energy Early Science Program and the Innovative and Novel Computational Impact on Theory and Experiment (INCITE) 2013 Program. Each restart file generated by the simulation is 1.8 TB in size, with approximately eighty such files archived for long term postprocessing and investigation. Postprocessing this large an amount of data is also a supercomputing challenge.

Presentation Information

Title : Petascale Direct Numerical Simulation of Turbulent Channel Flow on up to 786K Cores

Location : Room 201/203

Session : Extreme-Scale Applications

Time : Tuesday, Nov 19th, 1:30PM – 2:00PM

Presenter : M.K.(Myoungkyu) Lee

SC13 Scheduler : http://sc13.supercomputing.org/schedule/event_detail.php?evid=pap689

About

M.K. (Myoungkyu) Lee is a Ph.D student in Department of Mechanical Engineering at the University of Texas at Austin.

[email protected]

Nicholas Malaya is a researcher in the Center for Predictive Engineering and Computational Sciences (PECOS) within the Institute for Computational Engineering and Sciences (ICES) at The University of Texas at Austin.

[email protected]

Robert D. Moser holds the W. A. “Tex” Moncrief Jr. Chair in Computational Engineering and Sciences and is professor of mechanical engineering in thermal fluid systems. He serves as the director of the ICES Center for Predictive Engineering and Computational Sciences (PECOS) and deputy director of the Institute for Computational Engineering and Sciences(ICES).

[email protected]