The DOE and the National Nuclear Security Administration (NNSA) plan to develop and deploy exascale technology by 2023 received strong backing yesterday from an Advanced Scientific Computing Advisory Committee (ASCAC) sub-committee but with caveats in the form of seven recommendations for strengthening management of the Exascale Computing Initiative (ECI).

Subcommittee chair, Dan Reed, vice president for research and economic development at the University of Iowa, presented the report and findings to an ASCAC meeting. “Like any ambitious undertaking, DOE’s proposed exascale computing initiative (ECI) involves some risks. Despite the risks, the benefits of the initiative to scientific discovery, national security and U.S. economic competitiveness are clear and compelling,” he said.

The subcommittee draft report was approved with a final full ASCAC version expected in August. Reed called the ECI well-crafted and noted DOE’s demonstrated ability to manage complicated, multi-stakeholder projects. Perhaps surprisingly, technology challenges were a subordinate part of the report. Instead, the report focused on project management.

Reed said, “We chose and we think appropriately to focus primarily on the organization and management issues because the technical issues and application issues have been reviewed so extensively for so many years (the by-now-familiar 10 technical challenges defined by DOE are listed further below).” Technology challenges clearly remain, he agreed.

The sub-committee’s detailed recommendations include:

- Develop a detailed management and execution plan that defines clear responsibilities and decision-making authority to manage resources, risks, and dependencies appropriately across vendors, DOE laboratories, and other participants.

- As part of the execution plan, clearly distinguish essential system attributes (e.g., sustained performance levels) from aspirational ones (e.g., specific energy consumption goals) and focus effort accordingly.

- Given the scope, complexity, and potential impact of the ECI, conduct periodic external reviews by a carefully constituted advisory board.

- Mitigate software risks by developing evolutionary alternatives to more innovative, but risky alternatives.

- Unlike other elements of the hardware/software ecosystem, application performance and stability are mission critical, necessitating continued focus on hardware/software co-design to meet application needs.

- Remain cognizant of the need for the ECI to support for data intensive and computation intensive workloads.

- Where appropriate, work with other federal research agencies and international partners on workforce development and long-term research needs, while not creating dependences that could delay or imperil the execution plan.

Reed emphasized the need to be realistic in approaching the project and cautioned when setting expectations, particularly since the project is receiving wider attention in Congress.

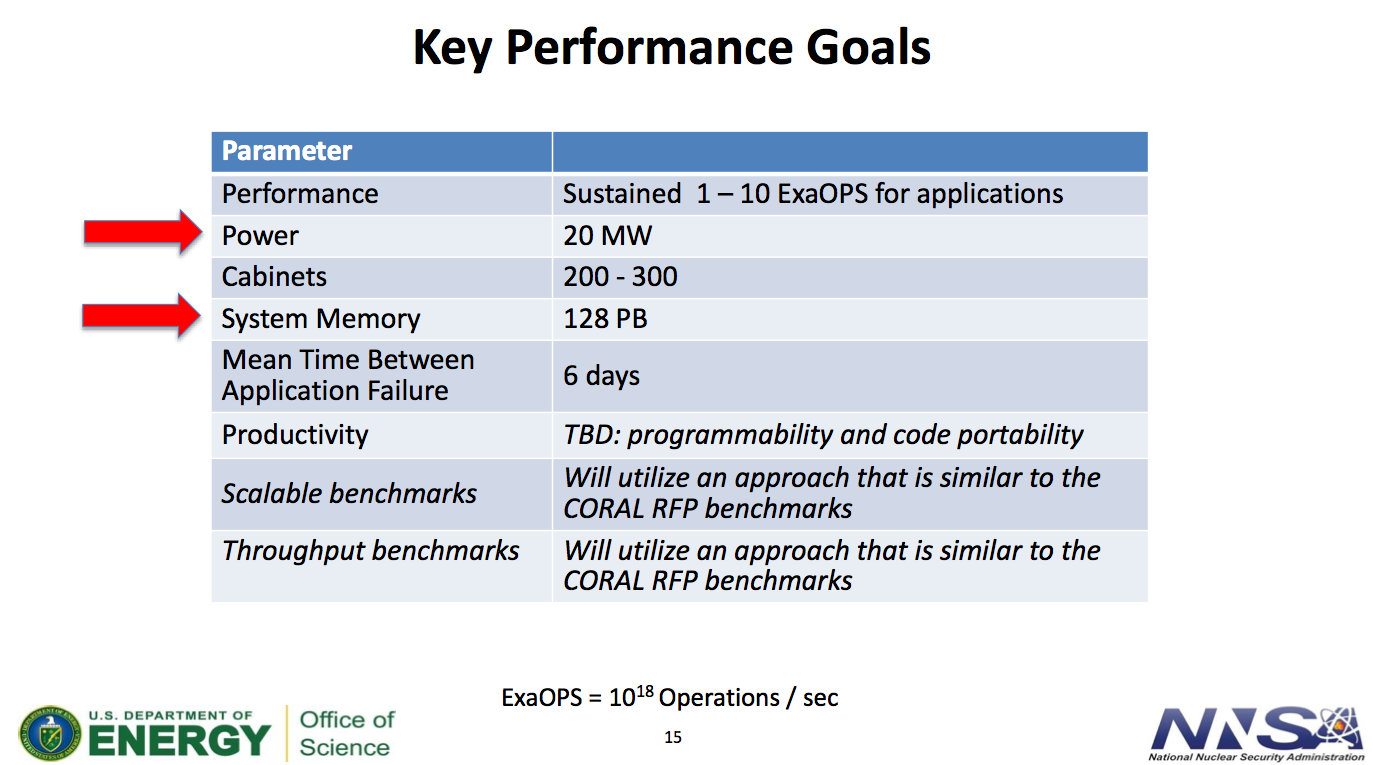

“There’s a lot of uncertainty about the enabling technology still because this is a multi-year R&D plan. Innovation is still required. One of the things we want to ensure is that people don’t focus on the subsidiary metrics at the risk of those becoming part of the public perception of what success criteria should be. People latch onto figures of merit, sometimes rightly and sometimes wrongly. This is as much a political guidance as a technical one,” said Reed.

Co-design, productive use of applications (legacy and new), and focusing on DOE and NNSA goals to advance science, enhance national competitiveness and assure nuclear stockpile stewardship are all emphasized in the report. Extending the benefits of extreme scale computing beyond these rather exclusive communities was also a theme.

“The whole point [of ECI] is to do a revolutionary leap forward. But it’s also important as part of that to the extent possible that we build broad ecosystems because the economic pull from a broad ecosystem will bring in more applications developers, it will lead to not just exascale laboratory systems, but also petascale research lab systems [used by] a much broader user base and shift the economics as well,” said Reed.

The sub-committee also recognized the growth of data-intensive computing to near equal footing with compute-intensive. Reed emphasized the DOE should, “Keep in mind in that data intensive and computationally intensive workflows both matter and in fact most of the time they are the same thing. They are intertwined pretty deeply [and] draw on the same ecosystems of hardware and software. Both matter. That drives as a corollary some focus on a new generation of analysis tools and libraries that will be needed to interpret that data.”

ASCAC had been charged by DOE and NNSA to review the “conceptual design for the Exascale Computing Initiative” and to deliver a report by September. Sub-committee members included: Reed; Martin Berzins, University of Utah; Bob Lucas, Livermore Software Technology Corporation; Satoshi Matsuoka, Tokyo Institute of Technology; Rob Pennington, University of Illinois, retired; Vivek Sarkar, Rice University; and Valerie Taylor, Texas A&M University.

The ECI’s goal is to deploy by 2023, capable exascale computing systems. This is defined as a hundred-fold increase in sustained performance over today’s computing capabilities, enabling applications to address next-generation science, engineering, and data problems to advance Department of Energy (DOE) Office of Science and National Nuclear Security Administration (NNSA) missions.

The plan includes three distinct components: Exascale Research, Development and Deployment (ExaRD); Exascale Application Development (ExaAD) to take full advantage of the emerging exascale hardware and software technologies from ExaRD; and Exascale Platform Deployment (ExaPD) to prepare for and acquire two or more exascale computers.

Given the many technical issues remaining, ECI mission adjustments are inevitable said Reed. Establishing an external advisory board – coordinated by a single individual or a group – and leveraging other collaborations to help monitor and advise the project was strongly recommended. Reed also said, “On interagency and international collaboration, seek collaborations that don’t imperil the execution plan. This is not an open ended research project; it’s an outcome driven project.”

Included in the report was a restatement of the top ten exascale challenges as identified by DOE, shown here:

- Energy efficiency: Creating more energy-efficient circuit, power, and cooling technologies.

- Interconnect technology: Increasing the performance and energy efficiency of data movement.

- Memory technology: Integrating advanced memory technologies to improve both capacity and bandwidth.

- Scalable system software: Developing scalable system software that is power- and resilience-aware.

- Programming systems: Inventing new programming environments that express massive parallelism, data locality, and resilience

- Data management: Creating data management software that can handle the volume, velocity and diversity of data that is anticipated.

- Exascale algorithms: Reformulating science problems and redesigning, or reinventing, their solution algorithms for exascale systems.

- Algorithms for discovery, design, and decision: Facilitating mathematical optimization and uncertainty quantification for exascale discovery, design, and decision making.

- Resilience and correctness: Ensuring correct scientific computation in face of faults, reproducibility, and algorithm verification challenges.

- Scientific productivity: Increasing the productivity of computational scientists with new software engineering tools and environment

The full ASCAC report is expected to be completed in August. Here is a link to the slides presented by Reed: http://science.energy.gov/~/media/ascr/ascac/pdf/meetings/20150727/Exascale_Computing_Initiative_Review.pdf