This week during the lead up to SC15 the OpenACC standards group announced several new developments including the release and ratification of the 2.5 version of the OpenACC API specification, member support for multiple new OpenACC targets, and other progress with the standard.

“The 2.5 specification addresses an essential challenge of profiling code where a few simple directives transform serial instructions and spread the work across thousands of cores,” said Duncan Poole, president of OpenACC-Standard.org and director of platform alliances, accelerated computing, at NVIDIA. “Using tools that support OpenACC, developers have an important lead in creating code that performs well across a variety of multi-core host devices and accelerators, including Titan, and the upcoming DOE Coral systems.”

OpenACC simplifies the programming of accelerated computing systems through the use of directives, which identify compute intensive code to a compiler for acceleration or offload, while preserving a single code base. The key aim of OpenACC is to enable performance portability across a growing number of HPC processor types, including GPUs, manycore coprocessors and multicore CPUs. In addition to being available from different compiler vendors, the standard is supported by an expanding range of debuggers, profilers and other programming tools.

This year brought the addition of ARM CPU and x86 CPU code generation using OpenACC, noted Poole. The PGI compiler can now accelerate applications across multiple x86 cores, while the PathScale compiler now supports acceleration across the 48 cores of a Cavium ThunderX ARM processor. In 2016, the standards body and its partners will be focusing on OpenACC running on POWER with GPUs, ARM with GPUs and Xeon Phi. “Basically we’re fulfilling the mission that we set out,” said Poole, “which was to be able to build portable code and work with all of the relevant architectures that are either here or emerging.”

Poole also talked up the OpenACC Toolkit, which was introduced in July to give scientists and researchers the tools and documentation they need to be successful with OpenACC. “If you want to see some pickup by the academic and research community, you need a free, but robust compiler,” he said. “NVIDIA put together a combination of the PGI compiler coupled with some key profiling tools and other information that would help a new academic get started and created a toolkit that is free for academic and student use.”

This is one of the ways that OpenACC is extending its developer base. Adoption has grown to some 10,000+ OpenACC developers, a conservative estimate according to the team. Training courses are well-received too, with Cal Poly, for example, having added the OpenACC curriculum as a four-credit course. Further, around 1,850 participants have registered for the OpenACC online course and OpenACC Hackathons are over-subscribed.

“We’re seeing a mix of direct site enthusiasm coupled with support coming from labs and compiler developers,” Poole said.

Key codes being ported during the 2015 Hackathons span a variety of disciplines, including computational fluid dynamics (INCOM3D, HiPSTAR and Numeca), cosmology and astrophysics (CASTRO and MAESTRO), quantum chemistry (LSDALTON), computational physics (Nek-CEM) and many more.

At the NCSA Hackathon, a team successfully accelerated an advanced MRI reconstruction model using NVIDIA GPUs. The challenge for the team was to take serial code and get it running on Blue Waters. Naturally, runtime took a dip at first, but speedups ensued as directives were added. In just a few days, the team managed to reduce reconstruction time for a single high-resolution MRI scan from 40 days to a couple of hours.

“Now that we’ve seen how easy it is to program the GPU using OpenACC and the PGI compiler, we’re looking forward to translating more of our projects,” said Brad Sutton, associate professor of bioengineering and technical director of the Biomedical Imaging Center University of Illinois at Urbana-Champaign. The implementation may even be suitable for powering clinical work, an exciting idea for the staff at Blue Waters.

OpenACC 2.5 and Beyond

Michael Wolfe, PGI compiler engineer and OpenACC technical committee chair, characterized the just-ratified 2.5 spec as somewhat of an interim release. The group has been working on both highly significant features in addition to a number of minor features, he said, and the aim of 2.5 was to take all the features that they could complete for this deadline and put those out. Beyond making some clarifications and fixing some spelling errors, the new release adds the following features:

• Asynchronous Data Movement

• Queue Management Routines

• Kernel Construct Size Clauses

• Profile and Trace Interface

The biggest feature in OpenACC 2.5, according to Wolfe, is the final bullet point — profile and trace interface — which will allow third-party tools vendors to tie into OpenACC runtime so they can access and present the performance related information. The functionality was initially part of a prototype implementation in the PGI OpenACC compiler and now with some minor changes based on feedback from that effort, the standards body has added this capability to the specification with the expectation that it will start being supported this year. TU Dresden has been using the PGI interface for profiling work and will be running a demonstration in their booth (#1351) at SC next week.

The following major features, however, are still going to take some extra effort, said Wolfe:

• Deep Copy – Nested Dynamic Data Structures

+ Substantial User Feedback

+ Builds on 2014 Tech Report

• Exposed Memory Hierarchy Management

• Multiple Device Support

According to Wolfe, deep copy is the signature feature that OpenACC is pushing to have ready for the 3.0 release. He said users have been asking for this for years and there are current machines that could really benefit, such as Piz Daint and Titan. “It’s a way to handle nested dynamic data structures,” Wolfe explained. “If you have a large array and you’re going to compute this on a device like a GPU, you’d want to move the array over to the device. But what if the array is actually an array of struct and each element of that array is a struct that has a sub-member that’s another allocatable array and each one of those sub-members is a different size and you want to move this whole deep data structure and maybe each of those sub-members is an array that is another struct that has another allocatable sub-member? And yes, we’ve seen this at least three levels deep in real scientific applications, weather applications in particular.

“So we need a way to manage the data traffic between host and device for basically pointer following on these deep data structures. It’s the number one most-requested features we’ve had from users from the past couple of years and in particular at the Hackathons,” said Wolfe. “What we’ve been working on is a way to express this in a way that provides all the functionality that we need, so we capture the cases that we know about in a way that is general enough to capture those cases but simple enough that it’s relatively easy to express and to use.”

Moving on to the next bullet point, Wolfe said OpenACC is getting ready for the exposed memory hierarchies associated with upcoming systems. “Think Knights Landing, Xeon Phi with near and far memory, or AMD high-bandwidth memory systems, or NVIDIA in the Pascal Volta timeframe where you’ve got a true unified address space but separate physical memories,” Wolfe prodded, “There is a need to manage data movement across what is basically an exposed memory hierarchy in a way that respects performance but is also as natural and portable as possible across all the different various systems.”

Another upcoming feature slated for a future release is improved multiple device support. While OpenACC already supports multiple devices, it’s not as convenient to use as people would like, said Wolfe. “We’ve had success with people doing multiple MPI ranks and multiple OpenMP threads and having each process, or each thread attach to a different device, and that works, but maybe there’s a better way to make it within a single thread use multiple devices,” Wolfe stated. “Compiling for an x86 multicore or an ARM multicore — if we treat that like a device and you have GPUs, now you have heterogeneous devices. Can we spread the work across all the devices counting the host multicore as a device in itself?”

“The challenge there is more about the data than it is about the compute,” he clarified. “It’s relatively easy to spread the computation across resources, it’s more of a challenge to make sure the data’s in the right place so we get the performance we want. That’s coming up in the next release or following releases.”

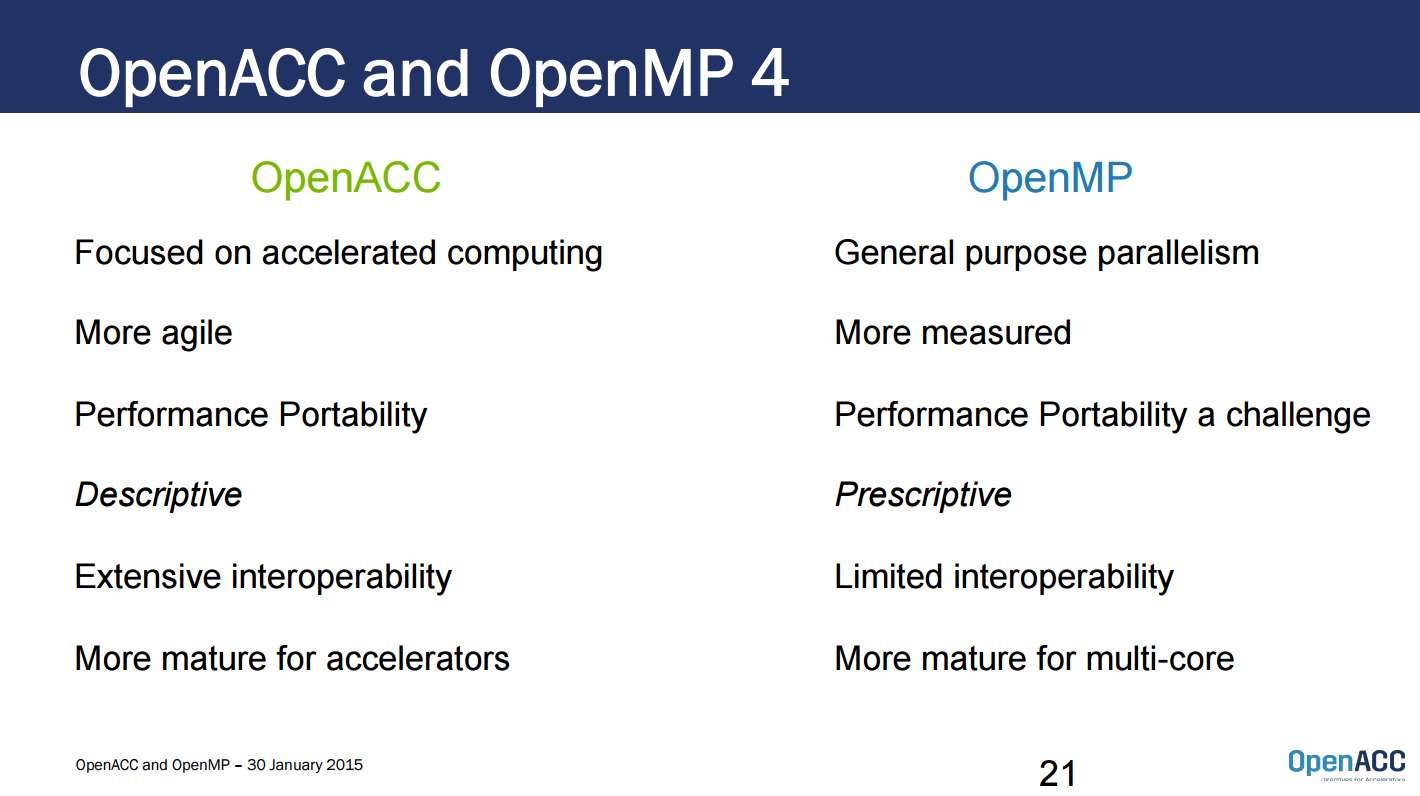

Comparisons to OpenMP

We also had a chance to discuss the relationship between OpenMP and OpenACC, and the potential for merging in light of the fact that there are members that are common to both organizations. “If you think about the act of parallelizing your code, just figuring out where those directives should go, is the hard part, and there is overlap in terms of location and placement of these directives,” said Poole. “Because of the cross-over in membership, there’s some value in having developers getting real-world experience, giving their feedback and having real production compilers implement a standard. I think all of that is goodness flowing back into OpenMP. In some ways OpenACC may be the best thing that ever happened to OpenMP in terms of giving that real feedback ahead of time.”

“From a technical perspective, there are certainly ways that OpenMP, MPI, OpenACC, even CUDA can interoperate, so these are not insurmountable challenges; it doesn’t have to be only a single way of programming as I would call the Highlander approach,” he added.

We’ll be diving deeper into this topic in a future piece, but as a teaser, here is a slide that was shared during the briefing:

For those of you headed to Austin for SC15 next week, OpenACC members will be participating in number of presentations, talks and discussions — more info is available here.