Perhaps the most eye-popping numbers in IDC’s HPC market report presented yesterday at its annual SC15 breakfast were ROI figures IDC has been developing as part of a DOE grant. The latest results indicated even higher ROI than shown in the pilot study. On average, according to the latest data, $514.7 in revenue are returned for every dollar of HPC invested. But first, let’s dig into the overall market numbers.

After slumping in 2014, the worldwide HPC server market is poised to return to growth, reported IDC. A stronger than expected first half of 2015, said Earl Joseph, IDC’s Program Vice President for High-Performance Computing (HPC) and Executive Director of the HPC User Forum, forced an upward revision of its earlier forecast. IDC now projects the market to finish at $11.4B, up nearly 12 percent from 2014’s $10.2B

Except for supercomputers, which are in a cyclical ‘bumpy’ market, there is broad strength throughout the HPC market according to IDC. Storage is again the fastest growing segment. The total 2015 HPC market (see figures below) is forecast to be $22.1B. Among prominent trends cited by IDC are: top 10 system purchases has slowed for ~2 years; the IBM/Lenovo deal also delayed many purchases; first half of 2015 is up over 12%.

Key points from the IDC report:

- Growing recognition of HPC’s strategic value is helping to drive high-end sales. Low-end buyers are back into a growth mode.

- HPC vendor market share positions will shift greatly in 2015.

- Recognition of HPC’s strategic/economic value will drive the exascale race, with 100PF systems in 2H 2015 and more in 2016. 20/30MW exascale systems will wait till 2022-2024.

- The HPDA market will continue to expand opportunities for vendors.

- Non-x86 processors and non-CPUs could alter the landscape – power, ARM, others; coprocessors, GPUs, FPGAs.

- China looms large(r). Lenovo, growing domestic market, export intentions. Other Chinese vendors are planning to extend to Europe.

- Growing influence of the data center in IT food chain will impact HPC technology options, perhaps providing new approaches.

- HPC in the Cloud Gaining Traction and the big questions are how much and how soon.

Pain points, of course, remain. Software is the number one roadblock – better management software is needed; parallel software is lacking for most users; and many applications will need a major redesign to run in HPC environments Clusters also remain hard to use and manage. Power, cooling and floor space are major issues. There’s still a lack of support for heterogeneous environment and accelerators. Storage and data management are becoming new bottlenecks.

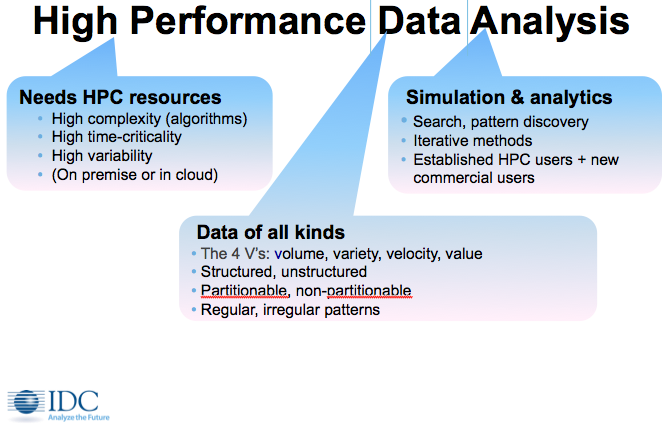

The ongoing collision of big data with HPC continues to force changes in the way IDC defines and monitors this emerging market. A while back IDC coined the term High Performance Data Analysis (HPDA). Joseph noted the convergence is ‘creating new solutions and adding many new users/buyers to the HPC space. The finance sector, for example, grew faster than “what we reporting over the last two years (by ~50% higher).”

Identifying the most appropriate buckets within the HPDA category has been an ongoing exercise. Currently, IDC singles out four verticals in HPDA:

- Fraud and anomaly detection – This “horizontal” workload segment centers on identifying harmful or potentially harmful patterns and causes using graph analysis, semantic analysis, or other high performance analytics techniques.

- Marketing – This segment covers the use of HPDA to promote products or services, typically using complex algorithms to discern potential customers’ demographics, buying preferences and habits.

- Business intelligence – The workload segment uses HPDA to identify opportunities to advance the market position and competitiveness of businesses, by better understanding themselves, their competitors, and the evolving dynamics of the markets they participate in.

- Other Commercial HPDA – This catchall segment includes all commercial HPDA workloads other than the three just described. Over time, IDC expects some of these workloads to become significant enough to split out, i.e. the use of HPDA to manage large IT infrastructures, and Internet-of-Things (IoT) infrastructures.

The next new HPDA segment, said Joseph, will be precision medicine. One example is what’s called outcomes-based medical diagnosis and treatment planning. In this paradigm, a patient’s history and symptomology are in a database. While a patient is still in the office, health physicians could sift through millions of archived patient records for relevant outcomes. The care provider  considers the efficacies of various treatments for “similar” patients but is not bound by the findings. In effect this is potentially a powerful decision-support tool.

considers the efficacies of various treatments for “similar” patients but is not bound by the findings. In effect this is potentially a powerful decision-support tool.

Public and private payer organizations have long promoted the development of similar evidence-based medicine approaches in which whole populations of patients’ records could be examined and evaluated to determine which drugs and therapies are worthwhile and should be approved. In theory, the result in both instances would be better outcomes and reduction of costly outlier practices.

There’s too much material to comprehensively review IDC’s full report. For example, cloud-based HPC computing is on the rise, both in sheer volume – from 13.8% of sites in 2011, to 23.5% in 2013, to 34.1% in 2015 – and number of workloads being run. It’s probably worth a look at a bit more of the ROI data. Three approaches were used: ROI based on revenues generated (similar to GDP) divided by HPC investment; ROI based on profits generated (or costs saved) divided by HPC investment; ROI based on jobs created.

At the breakfast, IDC also announced the ninth round of recipients of the HPC Innovation Excellence Award at the SC15 supercomputer industry conference in Austin, Texas. This year, winners come from around the globe: US-based Argonne National Laboratory, the Korean Institute of Science and Technology from the ROK, and a recent start-up, Sardinia Systems from Estonia.

At the breakfast, IDC also announced the ninth round of recipients of the HPC Innovation Excellence Award at the SC15 supercomputer industry conference in Austin, Texas. This year, winners come from around the globe: US-based Argonne National Laboratory, the Korean Institute of Science and Technology from the ROK, and a recent start-up, Sardinia Systems from Estonia.

The HPC Innovation Excellence Award recognizes noteworthy achievements by users of high performance computing (HPC) technologies. The program’s main goals are to showcase return on investment (ROI) and scientific success stories involving HPC; to help other users better understand the benefits of adopting HPC and justify HPC investments, especially for small and medium-size businesses (SMBs); to demonstrate the value of HPC to funding bodies and politicians; and to expand public support for increased HPC investments.

“IDC research has shown that HPC can accelerate innovation cycles greatly and in many cases can generate ROI. The award program aims to collect a large set of success stories across many research disciplines, industries, and application areas,” said Bob Sorensen Research Vice President, High Performance Computing at IDC. “The winners achieved clear success in applying HPC to greatly improve business ROI, scientific advancement, and/or engineering successes. Many of the achievements also directly benefit society.”

The new award winners are:

- Argonne National Laboratory (US) developed ACCOLADES, a scalable workflow management tool that enables automotive design engineers to exploit the task parallelism using large-scale computing (e.g., GPGPUs, multicore architectures, or the cloud). By effectively harnessing such large-scale computing capabilities, a developer can concurrently simulate the drive cycle of thousands of vehicles in the wall-time it normally takes to complete a single empirical dyno test. According to experts from a leading automotive manufacturer, ACCOLADES in conjunction with dyno tests can greatly accelerate the test procedure yielding an overall saving of $500K-1 M during the R&D phase of an engine design/development. Lead: Shashi Aithal and Stefan Wild

- Korea Institute of Science and Technology (ROK) runs a modeling and simulation program that offers Korean SMEs the opportunity to develop high-quality products using the supercomputers. Through open calls, the project selects about 40 engineering projects of SMEs every year and provides technical assistance, access to the supercomputers, and the appropriate modeling and simulation software technology such as CFD, FEA, etc. From 2004 to date, the project has assisted about 420 SMEs. The project recently assisted the development a slow juicer made by NUC Corp. by improving the juice extraction rate from 75% to 82.5% through numerical shape optimization of a screw by using the “Tachyon II” supercomputer and fluid/structural analysis. As a result, sales dramatically increased from about $1.9 million in 2010 to $96 million 2014, and the company has hired 150 new employees through building the additional manufacturing lines. Lead Jaesung Kim

- Sardinia Systems (Tallinn, Estonia) developed a technology that automates HPC operations in large scale cloud data centers, such as collecting utilization metrics, driving scalable aggregation and consolidation of data, and optimizing resource demand to resource availability. The product, FishDirector, incorporates high performance parallel data aggregation and consolidation, coupled with high performance solvers which continuously solve for optimal layout of VMs across an entire compute facility, taking into account costs such as virtual machines (VM) movement/migration costs, constraints around placement of certain VMs, to drive higher overall server utilization and lower energy consumption. The firm states that it has demonstrated raising utilization from 20 percent to over 60 percent at one government facility by optimizing the performance of over 150,000 VMs. Lead: Kenneth Tan

The next round of HPC Innovation Excellence Award winners will be announced at ISC16 in June 2016.

http://www.hpcwire.com/2015-supercomputing-conference/