In Tuesday night’s TOP500 session, list co-creator and co-author Erich Strohmaier brought perspective to what could at first appear to be a land grab of unprecedented scale by China, when he shared that many of these new entrants were mid-lifecycle systems that were just now being benchmarked. But what is likely to be even more revealing is his reanalysis of what the TOP500 says about the apparent health of Moore’s law. Could Intel be right about this after all? And that’s not the only common wisdom that got trounced. Accelerator growth also came under scrutiny. Let’s dive in.

Joined onstage by his co-authors Horst Simon, Jack Dongarra and Martin Meuer, as well as HLRS research scientist Vladimir Marjanovic who would also present, Dr. Strohmaier, head of the Future Technologies Group at Berkeley Lab, began with a review of the top ten, which taken as a set comprise the most mature crop of elite iron in the list’s history. There were two new entrants to that camp, both Crays: the first-part of the Trinity install for Los Alamos and Sandia national laboratories with 8.1 petaflops LINPACK; and the Hazel-Hen system, installed at the HLRS in Germany, the most powerful PRACE machine with 5.6 petaflops LINPACK at number eight.

The biggest change on this November’s list was the number of systems from China — 109 installed systems up from 37 in July — cementing’s China’s number two in system share behind the United States, which only managed a list market share of 40 percent, down from a typical 50-60 percent footprint.

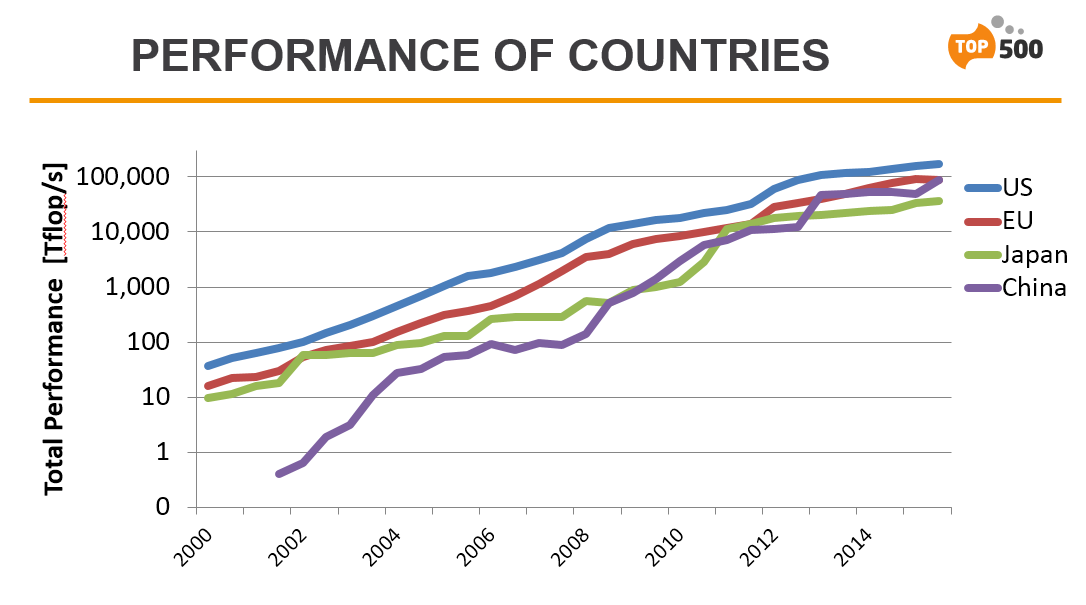

But what Strohmaier said he likes to look at more than pure system share is aggregate installed performance of systems, which provides a ranking of peak systems by size, filtering out the effect you might have from a lot of small systems.

“If you look instead at the development of installed performance over time, you see the last ten years that China had had a tremendous increase in terms of installed performance,” Strohmaier remarked. “It is just ahead of Japan now — clearly the second most important geographic region in terms of installed capability, but it’s not nearly as close to US [as when looking at number of systems].”

Going one step further, the list author clarified that the systems installed in China are actually on the small size, excepting their flagship Tianhe-2, the 33.86 petaflops supercomputer, developed by China’s National University of Defense Technology, which has been sitting in the number one spot for six list iterations.

“China has had a tremendous run in the last decade,” Strohmaier observed, “and it’s continuing, but it’s not as dramatic as a simple system count would suggest.”

By number of systems, HP clearly is dominant in terms of market share. Cray is number two, and third is Sugon, the surprise company on the list. Sugon has 49 systems on the latest TOP500 system, a 9.8 percent system share. As the TOP500 list co-author discussed during the TOP500 BoF, Sugon’s story from a performance perspective looks a little different. The company captured over 21 petaflops for a 5 percent market share, which positions them in seventh place, below Cray (25 percent), IBM (15 percent), HP (13 percent), NUDT (9 percent), SGI (7 percent), and Fujitsu (also rounded to 5 percent – but with a slightly higher 22 petaflops).

Strohmaier went on to make the point that Sugon is new to the TOP500 and had to learn how to run the LINPACK benchmark and submit to the list. The company increased its list share from 5 on the previous listing to 49 systems – since one fell off, that means 45 systems were added.

“Sugon really took the effort, and the energy and the work and ran the benchmark on all their installations, regardless of how well or badly they performed and gave us the number,” said Strohmaier, “They went to great length to figure out where they are in terms of supercomputing, in terms of what the systems can do and in terms of where they’ll be in the statistics.”

He gave due to the company and the individuals within it that made this possible. “Sugon is now number three, while before it had very little list presence,” he added.

The kicker here, however, is that these are not new systems, which really would be an extraordinary feat if they were. “Many of the additions are … two to three years old, which had never been measured or submitted until now,” Strohmaier clarified.

The list also reflects the shake-up from the IBM x86 offload to Lenovo, leaving a rather confusing four-way division represented by the following categories: IBM, Lenovo, Lenovo/IBM and IBM/Lenovo. These “artifacts” will disappear over time, but right now this arrangement that was worked out between the vendors and customers dilutes the original IBM and Lenovo categories.

Lenovo is of course a Chinese company with a mix of systems that they built and sold as well as previous IBM systems that they now hold title to. Then there’s Inspur with 15 systems, another Chinese vendor. In all, there are three Chinese companies which are now prominent in the TOP500 and that produced an influx of Chinese systems, said Strohmaier.

He went on to examine a vendor’s total FLOPS as a percentage of list share, which shows that “HP traditionally installs small systems, Cray installs large systems, and then there is Sugon, which is an exception, because they have smaller systems, thus their share of performance is much smaller.” IBM, which is closest in system share with 45 systems (mostly leftover BlueGenes), has a large market share in terms of performance because they kept custody of the large Blue Gene systems. Inspur and Lenovo both have below average list share, while Fujitsu and NUDT have much larger shares which are of course reflecting their flagship systems, K computer and Tianhe-2 respectively.

Switching back to looking at the list in general, Strohmaier addressed the low turnover of the last couple of years. Before 2008 the average system age was 1.27 years, now it’s a tick below three years, marginally better than the June list. The TOP500 author attributed this to the bolstering influence from Sugon and from the IBM-Lenovo offload. “Customers keep their systems longer than they used to; this has not changed other than that small upturn [which can be explained].”

Moore’s law is fine!

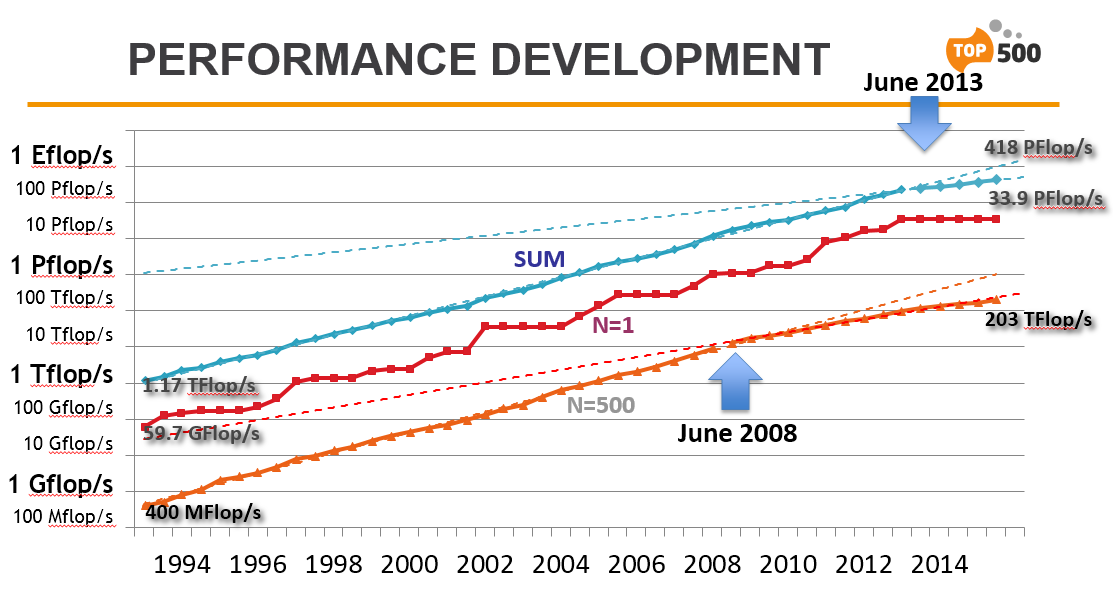

The classic slide from each list iteration is the one that shows how performance grows over time with the performance of the first, the last and the sum of the TOP500, which Strohmaier thinks of as “500 times the average.”

There has been impressive growth and for many years it was very accurate for predicting future growth, but in the last few years, the inflection points have appeared, 2008 and another in 2014, where the trajectory reduces.

This raises two important questions, says Strohmaier: why is there an inflection point and why is the inflection point in two timestamps?

“The nice thing is that the old growth rate before the inflection points were the same on both lines and the new growth rates are again the same on both lines. So the one effect is clearly technology, the other, in my opinion, is financial,” noted Strohmaier.

In the slide that shows the projections to the end of the decade before and after the inflection point, it can be seen that a seemingly small variance results in a significant 10X differential by the end of the decade.

“So instead of having an exascale computer by 2019 as we may have predicted ten years ago; we now think it’s going to be more like the middle of the next decade,” Strohmaier stated.

“Looking into what actually happened, we have to be more careful in how we construct the basis for our statistics,” he continued. “The TOP500 is an inventory based list, new and old technology are all mixed up. If you really want to see the changes in technology on the list, you have to apply filters filter out all the new systems with new technology coming into the list and analyze that subset.”

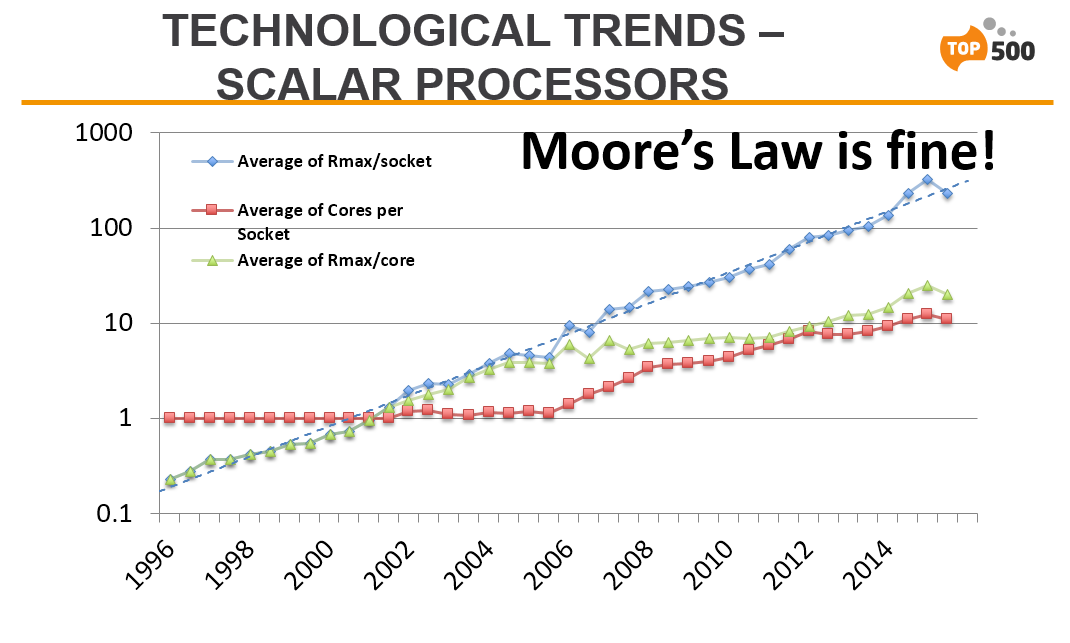

Strohmaier filtered out all the new systems and further filtered out all systems which only use traditional superscalar processes, so Nvidia chips and no Intel Phis. The point of this exercise was to tease out the track of traditional processor technology.

This is the result:

Strohmaier:

What you see is that the performance per core has taken a dramatic hit around 2005-2006, but it was compensated by our ability to put more and more cores on a single chip, which is the red curve, and if you multiple that out as in performance per socket per actual chip, you get to the blue curve, which is actually pretty much Moore’s law. So what you see is the sample there is no clear indication that there is anything wrong with Moore’s law.

So what caused the slow-down in the performance curve?

The other thing is over the decades we put more and more components into our very large systems. I tried to approximate that by looking at the number of chip sockets per scalar process we have on these large systems — that’s what you see on the red curve – while the average performance follows Moore’s law, the red line does not follow a clear exponential growth rate after about 2005-2006.

At that point, we seem to run out of steam and all out of money in our ability to put more components in the very large systems and the very large systems are not growing overall in size anymore as they have before. That is my interpretation of the data – that is why we have an inflection that is why the overall performance growth in the TOP500 has been reduced from its previous levels.

Right now supercomputing grows with Moore’s law, just like when supercomputing began and it does not which we had seen before.

So it’s clearly a technological reason, but it’s not a reason on a chip, it’s actually a reason on the facility and system level that is most likely related to either power or money or both.

Accelerator stagnation

Strohmaier, who has been one of the more ardent defenders of the benefits of LINPACK as a unified benchmark, went on to explore accelerator trends, acknowledging that they are responsible for a considerable share of petaflops. “But if you look at what fraction of the overall list those accelerators contribute,” he went on, “and if you focus on the last two years, their share has actually stagnated if not fallen.”

“That means there is a hurdle that is linked to market penetration of those accelerators that have not been able to penetrate the markets beyond scientific computing. They have not gotten into the mainstream of HPC computing,” he added.

Power efficiency is another metric covered in the BoF. Looking at the top ten in terms of average power efficiency, the course is uneven, but it’s growing. Highest power efficiency is much better, however. These power winners tend to either use accelerators or be BlueGeneQ systems, which are engineered to power efficiency.

The chart above shows the standouts for highest power efficiency, with new machines highlighted in yellow. TSUBAME KFC, installed at the Tokyo Institute of Technology and upgraded to NVIDIA K80s from K20xs for the latest benchmarking, came in first. What surprised Strohmaier were the number two and three machines terms of power – Sugon and Inspur, respectively. And once again, every system on this list ranked for megaflops-per-watt has an accelerator on it. (More on the greenest systems will be forthcoming in a future piece.)

The final slide presented by Dr. Strohmaier plots the best application performance from the Gordon Bell prize that is awarded each year at SC with TOP500 to show correlation. Since these are different applications with potentially different systems, a close tracking between these two trends over time could be taken to suggest that the LINPACK is still a useful reflection of real world performance. This is something to dive deeper into another time, but for now, here is that slide: