Intel® Scalable System Framework, an advanced approach for developing scalable, balanced and efficient HPC systems, is paving the path to Exascale by incorporating continually advancing processor, memory, storage, fabric and software technologies into a common, extensible platform.

Intel® Scalable System Framework, an advanced approach for developing scalable, balanced and efficient HPC systems, is paving the path to Exascale by incorporating continually advancing processor, memory, storage, fabric and software technologies into a common, extensible platform.

The Intel Scalable System Framework (Intel® SSF) is a direction for HPC systems that are not only balanced, power-efficient, and reliable, but are also able to run a wide range of workloads. The approach is intended to converge the system capabilities needed to support HPC, Big Data, machine learning, and visualization workloads, and enable HPC in the cloud. This should help increase the availability of HPC resources to more users, which in turn can create greater opportunities for discovery and insight.

From a hardware perspective, Intel SSF is designed to eliminate the traditional performance bottlenecks—the so called power, memory, storage, and I/O walls that system designers, builders and users have been fighting against for years. The new direction focuses on addressing the compute, memory/storage, fabric/communications, and software challenges of developing and deploying HPC systems. It does this in a more holistic way by taking a three-pronged approach through 1) breakthrough technologies, 2) a new architectural approach to integrating these technologies, and 3) a versatile and cohesive set of HPC system software stacks that can extract the full potential of the system.

Solving HPC Challenges

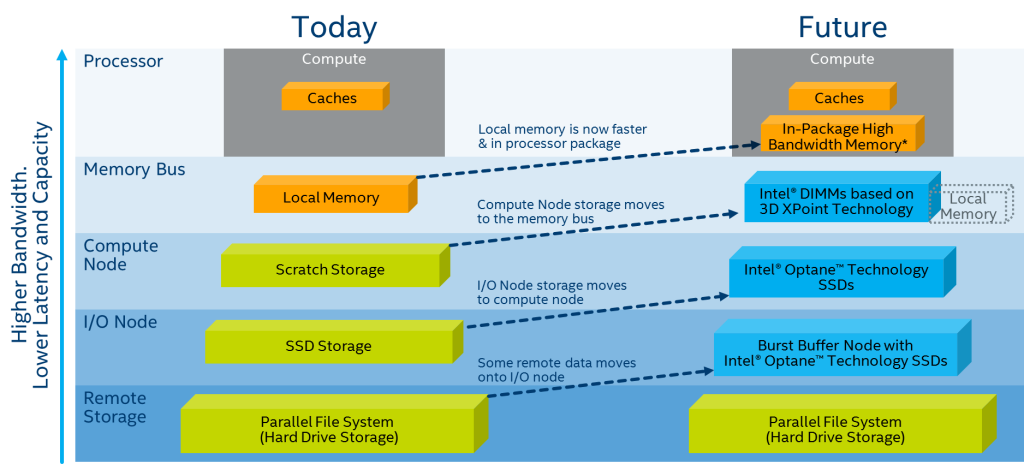

Intel SSF will combine new technologies—such as Intel® Omni-Path Architecture (Intel® OPA)—and incorporate existing compute and storage products, including the Intel® Xeon® processors, Intel® Xeon Phi™ processors, and Intel® Solutions for Lustre* software. Other innovative technologies, such as Intel® Silicon Photonics and Intel® Optane™ technology SSDs based on 3d XPoint™ technology will continue to improve performance.

The new direction, however, goes beyond these technologies designed to overcome the barriers mentioned above. Intel is tightly integrating the technologies at both the component and system levels, to create a highly efficient and capable infrastructure. One of the outcomes of this level of integration is how it scales across both the node and the system. The result is that it essentially raises the center of gravity of the memory pyramid and makes it fatter, which will enable faster and more efficient data movement.

Enabling HPC Opportunities through a Software Community

While Intel is creating breakthrough hardware for HPC, it is also helping enable the software community to take advantage of HPC more easily. Intel has joined with more than 30 other companies to found the OpenHPC Collaborative Project (www.openhpc.community), a community-led organization focused on developing a comprehensive and cohesive open source HPC software stack. Anyone who has worked with a team to build out an HPC system knows that it’s a huge challenge to integrate the individual components and validate an aggregate HPC software stack when developing these systems. It is a major logistical effort to maintain the multitude of codes, stay abreast of rapid release cycles, and address user requests for compatibility with additional software packages. With many users and system makers creating and maintaining their own stacks, there is a huge amount of duplicated work and related inefficiencies across the ecosystem. OpenHPC intends to help minimize these issues and improve the efficiency across the ecosystem.

An Exascale Foundation

While there’s still much more to be done in supercomputing to reach the exaflops era (or even 180 petaflops—the goal for the Aurora system), Intel’s approach to integration, new fabric, storage and software under the umbrella of Intel SSF will help form the foundation for an Exascale computing architecture.