The avalanche of data being produced by experimental instruments and sensors isn’t just a big (data) problem – it’s perhaps the biggest bottleneck in science today. What’s needed, say the distinguished authors of a new white paper from the Computing Community Consortium, is a community-wide agenda to develop cognitive computing tools that can perform scientific activities many of which are now mostly the domain of human scientists.

The just released white paper, Accelerating Science: A Computing Research Agenda, is a deep dive into computational tools and research focus needed to speed the scientific community’s ability to turn big data into meaningful scientific insight and activity. The authors contend innovation in cognitive tools will “leverage and extend the reach of human intellect, and partner with humans on a broad range of tasks in scientific discovery.”

The authors include Vasant Honavar (director, Center for Big Data Analytics and Discovery Informatics, and associate director, Institute for Cyberscience, Pennsylvania State University), Mark Hill (Amdahl professor and chair, Department of Computer Sciences, University of Wisconsin, Madison), and Katherine Yelick, professor, Computer Science Division, University of California at Berkeley and associate laboratory director of Computing Sciences, Lawrence Berkeley National Laboratory.

“My sense is that there are parts of the scientific community that are crying out for help. Cognitive tools are intended to work with human scientists, amplifying and augmenting their intellect, as part of collaborative human-machine systems. It is clear that we need such tools to realize the full potential of advances in measurement (and hence big data), data processing and simulation (HPC) to advance science,” said Honavar.

“My sense is that there are parts of the scientific community that are crying out for help. Cognitive tools are intended to work with human scientists, amplifying and augmenting their intellect, as part of collaborative human-machine systems. It is clear that we need such tools to realize the full potential of advances in measurement (and hence big data), data processing and simulation (HPC) to advance science,” said Honavar.

“But getting there from where we are is not easy,” he continued. “The computational abstractions and cognitive tools will need to be developed through close collaboration between disciplinary scientists and computer scientists. For both groups, this means getting out of their comfort zones and understanding enough about the other discipline to develop a common lingua franca to communicate with each other. It requires training new generation of scientists at the interface between computer and information sciences and other disciplines (e.g., life sciences; health sciences; environmental sciences).”

He notes there is already evidence that such efforts are paying off, such as the NSF IGERT program.

Conceptual tools such as mathematics as well has sophisticated instruments such as mass spectrometers and particle accelerators have always played transformative roles in science. Computing has been likewise transformative. Yet, the development of powerful cognitive computing could push computing into an deeper role working in areas commonly tackled by scientists’ intuition and qualitative judgment. Given the crush of data, this approach could accelerate science. At least that’s the idea.

Cognitive computing in its many guises is, of course, already a hot topic. Just this week IARPA just this week issued an RFI seeking training data sets to advance artificial intelligence (see article in HPCwire). Within industry deep learning, machine learning, and cognitive computing are all making headlines. IBM Watson is one of the more public embodiments. These technologies are already transforming or on the cusp of transforming many industry and daily activities – think autonomous cars, for example.

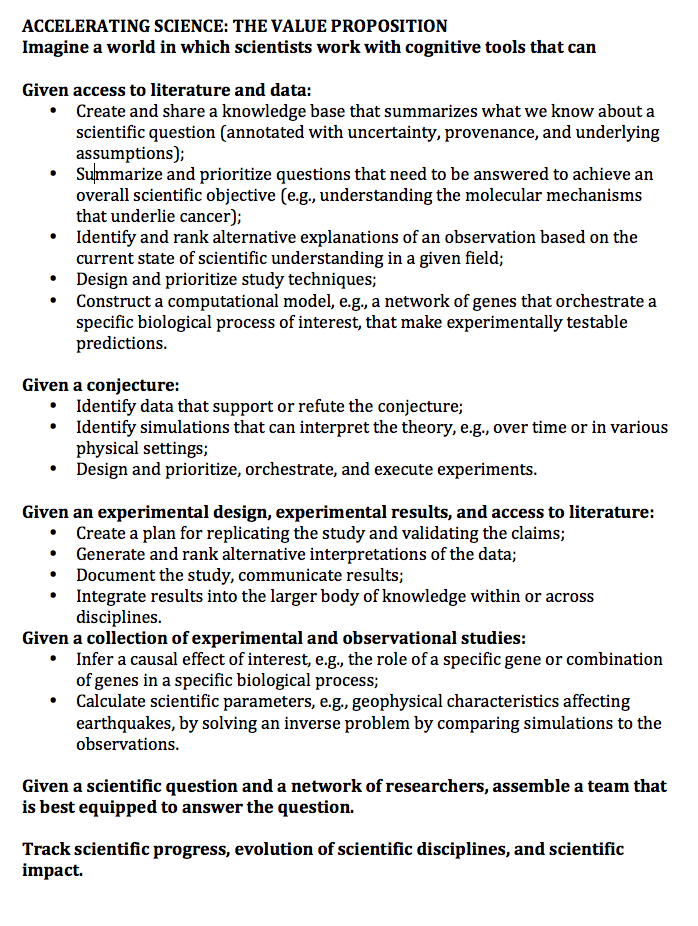

Turning Cognitive Computing into ‘smart assistants’ and ‘smart colleagues’ for human scientists is perhaps a natural step and a necessary paradigm change to deal with the proliferation of vast amounts of data, suggest the authors. It will also open up new modes for actually doing science. Consider the expansive, optimistic value proposition set out by the authors below:

Broadly speaking the CCC paper’s proposed agenda has two elements: 1) Development, analysis, integration, sharing, and simulation of algorithmic or information processing abstractions of natural processes, coupled with formal methods and tools for their analyses and simulation; 2) Spur innovations in cognitive tools that augment and extend human intellect and partner with humans in all aspects of science. There is much more spelled out in and best absorbed directly from the white paper.

Honavar noted: “The goal of the WP is to point out some of the current bottlenecks to scientific progress that computing research could help address and have an impact. While there are ongoing research efforts on a number of topics in relevant areas, there are also important gaps that need to be addressed. We tried not to be particularly prescriptive about how the ideas could be implemented.”

He said there is a need for fundamental research in multiple areas of computer science, infrastructure efforts aimed at demonstrating proof of concept in the context of specific scientific disciplines or sub-disciplines, as well as collaborative research that involves computing researchers and disciplinary scientists. Agencies such as NSF, NIH, DOD, etc. could help move things along through initiation of new programs or reorientation of existing programs, he contends.

“What is ‘reasonable’ in terms of advancing the agenda depends on what aspects of the agenda we are talking about. Aspects of the agenda that require basic research in computing are perhaps best conducted by investigator initiated projects like the ones that NSF funds. Infrastructure efforts or mission-focused projects are probably better suited for large-scale collaborations that involve research groups, national labs, and perhaps other partners. The efforts could draw on existing initiatives e.g., the National Big Data Initiative that one could draw on. It is important to engage both basic research focused agencies such as NSF as well as mission focused agencies such as NIH and DOE,” said Honavar.

One major effort to advance computing is the National Strategic Computing Initiative (NSCI), whose funding became clearer in the recent Obama budget (see HPCwire article, Budget Request Reveals New Elements of US Exascale Program). NSCI has a broad mission to energize HPC and that includes orchestrating the drive to exascale machines, harmonizing infrastructure to handle big data analytics and traditional simulation and modeling, and facilitating HPC adoption by industry.

“Clearly, investments in high performance computing (broadly conceived) are necessary for accelerating science. But it is not sufficient. High performance computing won’t help you if you don’t have algorithmic abstractions of natural processes that lend themselves to analysis and simulation using powerful computers. Hence we emphasize in the WP the need for research on algorithmic or information processing abstractions of natural processes, coupled with formal methods and tools for their analyses and simulation; and cognitive tools that augment and extend human intellect and partner with humans in all aspects of science,” said Honavar.

“I feel that the research agenda we have sketched out nicely complements the obviously important efforts on advancing high performance computing. More importantly it will help maximize the impact of advances in big data and high performance computing on science broadly, dramatically accelerating science, enabling new modes of discovery, all of which are critical for addressing key national and global priorities, [such as] health, education, food, energy, and environment.”

Marshalling attention and resources are remaining challenges. Honavar agreed it’s hard to predict firm timelines for progress, and noted, there are already many examples of cognitive tools being used successfully in the conduct of science. Still, he said he expects to see a significant impact in a matter of decades. “Obviously, there will be faster progress in some disciplines where the seeds for such transformation have already been sown — e.g., computational biology, learning sciences,” he observed.

“Any time we use tools, there is a risk of misusing tools or using tools that are inappropriate for the task at hand, or using tools whose workings we don’t understand, etc. Cognitive tools in the service of science are no exception. We can minimize these risks by building better tools, e.g., machine learning algorithms that produce models that are communicable to scientists, and testable through experiments; and by better education in the design and use of tools.”