The South African Center for High Performance Computing’s (SA-CHPC) Ninth Annual National Meeting was held Nov. 30 – Dec. 4, 2015, at the Council for Scientific and Industrial Research (CSIR) International Convention Center in Pretoria, SA. The award-winning venue was the perfect location to host what has become a popular industry, regional and educational showcase.

Exascale in the 2020s

With the Square Kilometer Array (SKA) being built in the great Karoo region, implications for SA and the HPC industry have captured the attention of a broad range of stakeholders. SKA will be the world’s biggest radio telescope, and the most ambitious technology project ever funded. With an expected 50-year lifespan, SKA Phase One construction is scheduled to begin in 2018, and early science and data generation will follow by 2020.

With only a few years in which to prepare for SKA, it’s not surprising that the CHPC conference has begun to feature in-depth data science and network infrastructure content, in addition to the meeting’s traditional HPC tutorials, workshops, plenaries, and student programs. Once again, the Southern African Development Community (SADC) HPC and Industry Forums were co-located with the CHPC meeting. An increased number of conference attendees from data science and high-speed network occupations added diversity in terms of gender, discipline and nationalities represented. All things considered, the annual CHPC meeting provides a wealth of learning opportunities, and brings professional networking to an emerging region of the HPC world.

Just how resource-intensive will SKA be?

It’s safe to say that SKA will set the “Big Data” curve. In his plenary address, Peter Braam (SKA Cambridge University, Parallel Scientific) described the range of instrumentation that will support the project, and how a combination of dedicated and cloud-enabled resources will fulfill its varied and complex missions. As for data, by 2020 SKA’s central signal processing array—50 times more sensitive than any current radio instrument—will generate 1 exabytes per day, and 100 exabytes per day by 2028. Its imaging function will produce 400 terabytes daily for worldwide consumption by 2020, and 10,000 petabytes per day by 2028. As for computational processing power, it will require 300 petaflops (archiving 1 exabyte) by 2020 and 10 times more by 2028.

Rudolph Pienaar (Boston Children’s Hospital, Harvard) noted that even before SKA data enters the infostream, by 2019 general network traffic will explode as a larger percentage of the population has access to networks; with an additional 30 percent more networked devices per capita and many more applications that are increasingly resource-intensive. Global IP traffic will reach 1.1 zettabytes per year in 2016, or 88.4 exabytes (one billion gigabytes) per month. By 2019, global IP traffic will reach 2.0 zettabytes per year, or 168 exabytes per month.

Most of the Karoo region and surrounding areas currently lack high-speed networks, and a reliable electrical supply. However, with ten wealthier countries and more than 100 organizations behind the project, SA expects to have the support it will need to host SKA. Additionally, many of SADC’s 15 member states will benefit from an improved power grid and access to high-speed networks, in addition to slipstream advantages such as workforce development opportunities and access to tools that support international research engagement.

CHPC National Meeting

The 2015 meeting was called to order by General Chair Janse Van Rensburg (CHPC) who introduced Director General Phil Mjwara (SA Dept. of Science & Technology). Dr. Mjwara expressed the vital role HPC will play in South Africa’s future. “A greater investment in cyberinfrastructure (CI) and supportive human capital development are essential for good governance, sustainability and commerce,” he said.

Mjwara shared 2015 highlights, including a milestone partnership between CHPC and SANRen. The agreement, reached in April, 2015, allows CHPC to engage with 155 tier-2 sites around the world that access the Large Hadron Collider at CERN in Geneva, Switzerland.

CHPC Director Happy Sithole described how their Cape Town center has grown since launching in 2007 when it supported 15 researchers with 2.5 teraflops of computational power. “That was a good start for South Africa, and CHPC was the only center on the continent at the time,” said Sithole. Since then, CHPC has expanded to meet demands. At the time of the 2015 conference, CHPC supported 700 researchers with 7,000 cores and 64 teraflops. “But, we could be fully subscribed with our three largest research projects,” he added.

CHPC Director Happy Sithole described how their Cape Town center has grown since launching in 2007 when it supported 15 researchers with 2.5 teraflops of computational power. “That was a good start for South Africa, and CHPC was the only center on the continent at the time,” said Sithole. Since then, CHPC has expanded to meet demands. At the time of the 2015 conference, CHPC supported 700 researchers with 7,000 cores and 64 teraflops. “But, we could be fully subscribed with our three largest research projects,” he added.

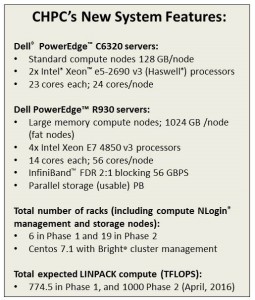

Sithole was pleased to announce the addition of a new Dell system that will be operational in early 2016. Launched in two phases, the first is expected to achieve 700 teraflops and the second will add an additional 300 teraflops with GPU acceleration. “This brings a total of one petaflops of computational power to the region,” he added. Thirty percent of the system’s cycles will be available for lease by CSIR industry partners.

The student poster and cluster competition awards were presented Thursday evening following a plenary address by Thomas Sterling (Indiana University). Sterling is executive associate director and chief scientist at the Center for Research in Extreme Scale Technologies (CREST) and is best known for his pioneering work in commodity clusters as “the father of Beowulf.” He noted the accelerated progress being made by CHPC and pioneer projects in region, and credited Sithole for blazing the trail on behalf of industry, science and education.

“The new CHPC Dell and Mellanox system will provide world-class compute capability to prepare the emerging southern African region for exascale computing in the next decade,” said Sterling.

Plenary Highlights

The opening plenary address by Merle Giles (National Center for Supercomputing Applications, U.S.) was titled “HPC-Enabled Innovation and Transformational Science & Engineering: The Role of CI.” As the leader of NCSA’s private sector program, Giles explained HPC’s macro and micro economic impact on the economy, with the federal investment defining macro-, and micro-economic impact coming from universities and industry.

Giles described the “R&D Valley of Death,” or the funding gap that exists between the theoretical and basic research led by universities (or startups), and the production-commercialization phase effectuated by industry. “Somewhere in the middle, before optimization and ‘robustification,’ great ideas tend to crash and burn for lack of funding,” he said. “When this happens, it adversely affects how the public perceives science and technology spending, in general,” he added. Greater emphases on investment and tech transfer will help bridge this gap.

Giles said President Obama initiated an important discussion in January, 2015, during his state of the union address. By explaining how precision medicine and the curing of common diseases are enabled by fast computers and data science, he demystified the concept so that average citizens could understand why HPC is important to them. He then announced the nation’s strategic computing initiative in August. “Through this steady dialogue, Obama is laying the groundwork for future support of a greater public investment in advanced CI,” Giles said.

He added that all sectors—public, private and industry—must share the responsibility for preparing the workforce. “And, by the way, coding is no longer the new literacy; modeling and the ability to master data are more important as HPC becomes more accessible, and the long tail of science engages a broader range of practitioners,” he added. He concluded with facts from an International Data Corporation study that found for every dollar invested in HPC, $515 dollars in revenue and $43 dollars of profit are generated. Feasibility should be addressed, but it’s often overlooked. No single company, agency or government can fund everything. A sustainable program requires collaboration and a holistic approach to spending.

Peter Braam (SKA Cambridge University, Parallel Scientific) presented “HPC Stack—Scientific Computing Meets Cloud.” Braam explained how cloud-augmented cluster services satisfy a greater spectrum of administrative and production applications than earth-bound HPC can on its own. By provisioning clusters with containers, or cloud-enabled virtual machines (VMs), it’s possible to facilitate core services, such as identity management, storage, scheduling, and monitoring. “We discovered there are generally two groups of users: people performing operations, and developers who are experimenting in pursuit of discovery. To support the latter, we realized it’s more feasible to deploy 100 small virtual cloud-enabled environments than 100 HPC systems.” Cambridge, CHPC and Canonical Ltd are pursuing this study.

Thursday’s plenary was delivered by CODATA Executive Director Simon Hodson, and was titled “Mobilizing the data revolution: CODATA’s work on data policy, data science and capacity building.”

Hodson suggested that a 2012 report by The Royal Society titled “Science as an open enterprise” made a profound impact on the industry when it stated that data underpinning research findings must be openly available. “Transparency, credibility and reproducibility are the keys to gaining public confidence; data must therefore be accessible, assessable, intelligible, usable, and reusable,” he said.

The European Union’s Horizon 2020 project included, word-for-word, excerpts from the report that suggested principal investigators are guilty of scientific misconduct if their data aren’t accessible. Most European and U.S. agencies now require data generated by research they fund to be liberated, and many have issued policies regarding open research data. Leading industry journals and digital repositories have also adopted the practice, including Dryad, GenBank and TreeBASE.

Hodson addressed data citation best practices, and financing strategies to support sustainable data repositories. He presented the findings of a joint declaration of data citation principles by the CODATA-ICSTI Task Group on Data Citation Standards and Practices and FORCE11 (Future of eScience Communications and Scholarship). “Make data available in a usable way, so they can be recognized and properly credited,” he said, referring to a CODATA paper published in the Journal of Data Science titled “Out of Cite, Out of Mind: The Current State of Practice, Policy, and Technology for the Citation of Data.”

Hodson announced a series of 2016-17 Data Science Summer Schools that are co-sponsored by CODATA and the Research Data Alliance (RDA). The two-week programs will cover software and data carpentry, data stewardship, curation, visualization, and more. CODATA-RDA earmarked $60,000 for travel funding for registrants who lack institutional support. The first will be held in Trieste, Italy on August 1-12, 2016. The International Center for Theoretical Physics (ICTP) will support up to 120 students, and 30,000 Euros are allocated for student travel. Students from low and middle-income countries (LMICs) will be given priority, and additional sponsors are welcome.

Health data concerns were addressed by Rudolph Pienaar (Boston Children’s Hospital) with his presentation titled “Web 2.0 and beyond: Leveraging Web Technologies as Middleware in Healthcare and High Performance Compute Clusters; Data, Apps, Results, Sharing and Collaboration.”

Pienaar argued that even today, most medical clinical applications follow 1990’s vintage interfaces and patient medical data is housed in locked-down silos that don’t facilitate cross-comparison. From an integration perspective, medical data is dead on arrival – the only common central point to all medical data in a hospital is the billing department. It is largely impossible for physicians or researchers to make meaningful comparisons of data housed in different silos. The system was designed to facilitate billing, and to protect patient privacy, but it impedes research and diagnostics. Pienaar believes that the current infrastructure is not at all well suited to handle the amount and complexity of data that is coming down the pipe in the next five years.

That’s when ChRIS was born. The Boston Children’s Hospital Research Integration System (ChRIS) is a novel browser-based data storage and data processing workflow manager. Developed using Javascript and Web2.0, ChRIS anonymizes personal information, and includes a plug-in to accommodate clusters of collaborators that could, potentially, be located around the world. By employing a local virtual machine on the desktop, ChRIS hides the complexity of data scheduling on an HPC by keeping everything local. The system also uses Google real-time API services that facilitate to enable real-time true image sharing and collaboration. Multiple parties can interact with the same medical visual scene, each in their own browsers, and all views, slices, are updated in real time – using a design approach that is not screen sharing, but more akin to how multi-player 3D gaming works.

SADC HPC Forum

The SADC HPC Forum has been meeting since 2013 when the University of Texas, U.S. donated a decommissioned HPC system called “Ranger” to CHPC. More than 20 Ranger racks were divided into several mini-Rangers that were placed in eight centers in five SADC states. Each mini-Ranger forms a footprint for human capital development (HCD) and the initiative has launched an important public-private dialogue that SADC hopes will inspire support for future expansion.

The SADC HPC Forum has been meeting since 2013 when the University of Texas, U.S. donated a decommissioned HPC system called “Ranger” to CHPC. More than 20 Ranger racks were divided into several mini-Rangers that were placed in eight centers in five SADC states. Each mini-Ranger forms a footprint for human capital development (HCD) and the initiative has launched an important public-private dialogue that SADC hopes will inspire support for future expansion.

Ms. Mmampei Chaba (Dept. of Science & Technology, SA) welcomed everyone on behalf of the host country, and Ms. Anneline Morgan (SADC Secretariat) introduced Forum Chair Tshiamo Motshegwa (U-Botswana).

Forum delegates and international advisers further refined the SADC collaborative framework document that last year’s forum attendees had begun to draft, and a final version will be presented to the SADC Ministry. There were several newcomers; delegates who hadn’t attended in the past, and representatives from countries that were entirely new to the forum, including Mauritius, Namibia and Seychelles. All were eager to know how their countries could contribute to and engage with the shared CI.

Everyone was eager to discuss best practices relating to data sharing across national borders; an uncomfortable concept for those who haven’t managed research data. The international advisers explained that the ability to facilitate the secure, seamless and reliable transfer of data among SADC sites, and beyond, is crucial to the project’s success. To that end, following the meeting, Motshegwa began to plan a cybersecurity and data conference scheduled for mid-April, 2016 in Gaborone, Botswana. U.S. and European cybersecurity experts are invited to participate. They will share roughly 50 years of collective experience with the facilitation of secure data across peered networks, international computational systems and interfederated CIs.

SADC HPC Forum delegate questions frame future discussions and HCD programming. While technology training has been the focus, each site is encouraged to add education, outreach, communication, and external relations skills to their teams. By building teams that include a combination of technical and soft skills, they will be better prepared to support and sustain their CI programs for the future.

Presentations and videos from the awards evening are available on the CHPC website.

2016 CHPC National Meeting

Please join us in East London, South Africa for the 2016 CHPC National Meeting, and SADC HPC Forum Dec. 5-9, 2016.

Plan to spend extra time while visiting this beautiful region, and be sure to pack your hiking boots! It will be summer in South Africa, and you’ll find some of the best hiking, bird watching, fishing, swimming, and horseback riding in the world. East London is South Africa’s only river port city. The nearby Wild Coast region has miles of unspoiled, white beaches framed by thick forests that give way to steep, rocky cliffs. The area is steeped in Xhosa tradition, and their farms are scattered along the coast. It’s sparsely-populated, and it’s likely you’ll meet more cows than people. With a favorable international currency exchange rate, you’ll only be limited your energy and time.

About the Author

Elizabeth Leake is the president and founder of STEM-Trek Nonprofit, a global, grassroots organization that supports scholarly travel for science, technology, engineering, and mathematics scholars from underrepresented groups and regions. Since 2011, she has worked as a communications consultant for a variety of education and research organizations, and served as correspondent for activities sponsored by the eXtreme Science and Engineering Discovery Environment (TeraGrid/XSEDE), the Partnerhip for Advanced Computing in Europe (DEISA/PRACE), the European Grid Infrastructure (EGEE/EGI), South African Center for High Performance Computing (CHPC), the Southern African Development Community (SADC), and Sustainable Horizons, Inc.

Elizabeth Leake is the president and founder of STEM-Trek Nonprofit, a global, grassroots organization that supports scholarly travel for science, technology, engineering, and mathematics scholars from underrepresented groups and regions. Since 2011, she has worked as a communications consultant for a variety of education and research organizations, and served as correspondent for activities sponsored by the eXtreme Science and Engineering Discovery Environment (TeraGrid/XSEDE), the Partnerhip for Advanced Computing in Europe (DEISA/PRACE), the European Grid Infrastructure (EGEE/EGI), South African Center for High Performance Computing (CHPC), the Southern African Development Community (SADC), and Sustainable Horizons, Inc.