Last week at SC16, Intel revealed its product roadmap for embedding its processors with key capabilities and attributes needed to take artificial intelligence (AI) to the next level. It also used its Intel AI Day event in San Francisco on November 17, as a platform to reveal the code-names of two projects that will result in new silicon rolling out in 2017 and beyond.

The chip giant says it will test the first AI-specific hardware, code-named “Lake Crest,” in the first half of 2017, with limited availability later in the year. Lake Crest will be optimized for running neural network workloads, and will feature “unprecedented compute density with a high-bandwidth interconnect.”

Intel also announced a second new product, code-named “Knights Crest,” that will integrate its Xeon processors with the technology it obtained with its August acquisition of Nervana Systems. Note that despite the “Knights” branding, this part bares no resemblance to the Xeon Phi line.

Diane Bryant, executive vice president and general manager of the Data Center Group at Intel, says the Intel Nervana platforms will “produce breakthrough performance and dramatic reductions in the time to train complex neural networks.” She predicted that Intel would deliver a 100x increase in performance of deep learning training.

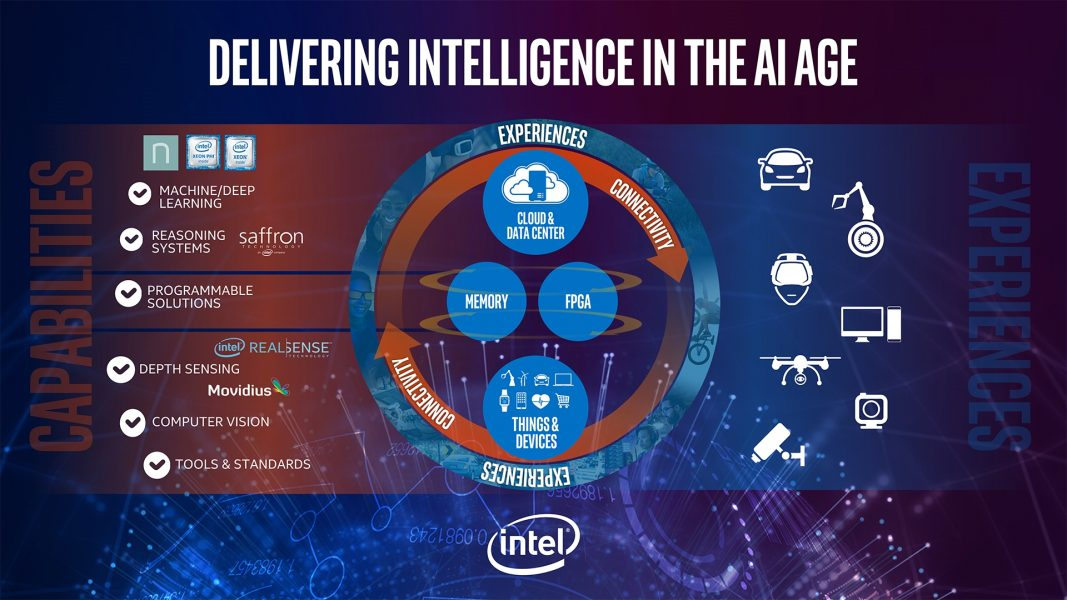

Intel has been moving strongly into the deep learning area with several key acquisitions, including Nervana; Movidus Systems, a developer of low-power machine vision technology that it bought in September; and Saffron Technology, a developer of “natural learning” solution profiled in Datanami.

“Our goal is to compress the innovation cycle from conception to the deployment of increasingly intelligent, robust, and collaborative machines,” Intel CEO Brian Krzanich writes in a blog post today.

Beyond GPUs

AI is still in its early days, Krzanich writes, and the underlying hardware that’s used to execute deep learning tasks is bound to change. “Some scientists have used GPGPUs [general purpose graphical processing units] because they happen to have parallel processing units for graphics, which are opportunistically applied to deep learning,” he writes. “However, GPGPU architecture is not uniquely advantageous for AI, and as AI continues to evolve, both deep learning and machine learning will need highly scalable architectures.”

The $55-billion chip manufacturer also revealed details about other hardware initiatives, including the next generation of Intel Xeon Phi co-processors, code-named “Knights Mill,” which will offer mixed precision support and deliver up to 4x better performance than the previous generation for deep learning, according to Intel. Those Knights Mill chips will be available in 2017.

Intel also announced it’s giving select cloud service provider early access to the next generation of its Xeon processors, code-named “Skylake.” Combined with the 512-bit extensions to the Advanced Vector Extensions SIMD instructions for x86, Skylake will result in a “significant” processing boost for inference machine learning workloads, Intel says.

Patrick Moorehead, principal analyst at Moor Insights & Strategy, who was at the Intel AI Day event today, says Intel did what it needed to do to stay relevant in a fast-moving industry.

“Intel threw their formal AI strategy axe into the ocean, which was very important given the general tech industry sees GPUs as the current driver of AI compute,” Moorehead shared via email. “If Intel can execute on and deliver what they said they would do today, Intel will be a future player in AI.”

While GPUs are doing most of the “heavy lifting” for deep neural net training today, there’s no reason why Intel — which acquired FPGA manufacturer Altera in 2015 — can’t influence the technological direction that AI follows.

“If you look at how quickly they have pulled together Altera, Nervana, Phi, Xeon and all the required software work, it’s impressive for such a large company,” Moorehead says. “It’s now up to Intel to flawlessly execute.”