At the AMD Tech Summit in Sonoma, Calif., last week (Dec. 7-9), CEO Lisa Su unveiled the company’s vision to accelerate machine intelligence over the next five to ten years with an open and heterogeneous computing approach and a new suite of hardware and open-source software offerings.

The roots for this strategy can be traced back to the company’s acquisition of graphics chipset manufacturer ATI in 2006 and the subsequent launch of the CPU-GPU hybrid Fusion generation of computer processors. In 2012, the Fusion platform matured into the Heterogeneous Systems Architecture (HSA), now owned and maintained by the HSA Foundation.

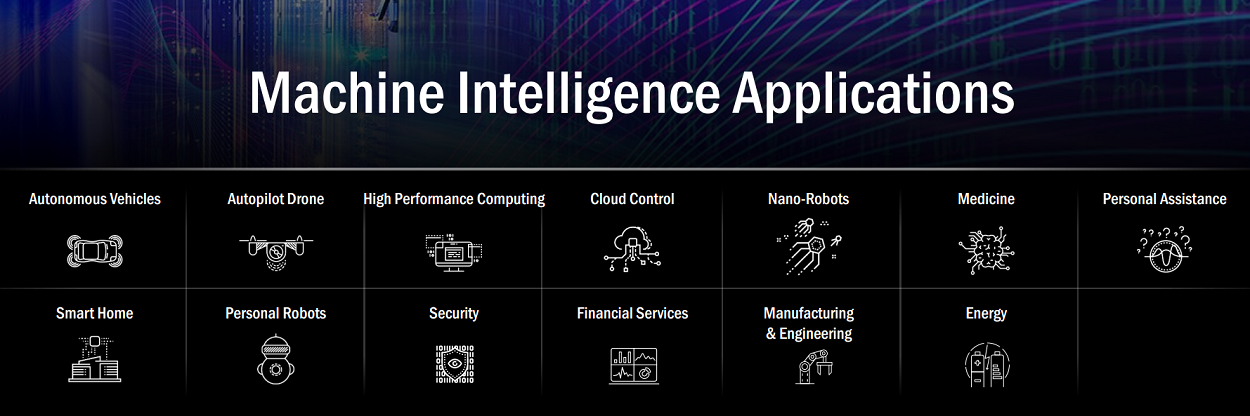

Ten years since launching Fusion, AMD believes it has found the killer app for heterogeneous computing in machine intelligence, which is driven by exponential data surges.

“We generate 2.5 quintillion bytes of data every single day – whether you’re talking about Tweets, YouTube videos, Facebook, Instagram, Google searches or emails,” said Su. “We have incredible amounts of data out there. And the thing about this data is it’s all different – text, video, audio, monitoring data. With all this different data, you really are in a heterogeneous system and that means you need all types of computing to satisfy this demand. You need CPUs, you need GPUs, you need accelerators, you need ASICS, you need fast interconnect technology. The key to it is it’s a heterogeneous computing architecture.

“Why are we so excited about this? We’ve actually been talking about heterogeneous computing for the last ten years,” Su continued. “This is the reason we wanted to bring CPUs and GPUs together under one roof and we were doing this when people didn’t understand why we were doing this and we were also learning about what the market was and where the market needed these applications, but it’s absolutely clear that for the machine intelligence era, we need heterogeneous compute.”

Aiming to boost the performance, efficiency, and ease of implementation of deep learning workloads, AMD is introducing a brand-new hardware platform, Radeon Instinct, and new Radeon open source software solutions.

The Instinct brand will launch in the first half of 2017 with three accelerators (MI6, MI8 and MI25):

- The Radeon Instinct MI6 accelerator is based on the Polaris GPU architecture. It is an inference accelerator optimized for jobs/second/Joule with 5.7 teraflops of peak FP16 performance at 150W board power and 16GB of GPU memory.

- The Radeon Instinct MI8 accelerator is a small-form factor inference accelerator based on the “Fiji” Nano GPU. It offers 8.2 teraflops of peak FP16 performance at less than 175W board power and 4GB of High-Bandwidth Memory (HBM).

- The Radeon Instinct MI25 accelerator is based on the brand-new Vega GPU architecture. It is designed for deep learning training, optimized for time-to-solution. Feeds and speeds aren’t available yet, but it will include 2x packed math support, high bandwidth cache and controller and will be available in configurations of less than 300 watts.

All the Instinct cards are built exclusively by AMD and feature passive cooling, AMD MultiGPU (MxGPU) hardware virtualization technology conforming with the SR-IOV (Single Root I/O Virtualization) industry standard, and 64-bit PCIe addressing with Large Base Address Register (BAR) support for multi-GPU peer-to-peer support.

AMD also announced MIOpen, a free, open-source library for GPU accelerators aimed at easing implementation of high-performance machine intelligence applications. Availability for MIOpen is planned for Q1 2017. It will be part of the ROCm software stack, AMD’s open-source HPC-class platform for GPU computing.

“We are going to address key verticals that leverage a common infrastructure,” said Raja Koduri, senior vice president and chief architect of Radeon Technologies Group. “The building block is our Radeon Instinct hardware platform, and above that we have the completely open source Radeon software platform. On top of that we’re building optimized machine learning frameworks and libraries.”

AMD is also investing in open interconnect technologies for heterogeneous accelerators; the company is a founding member of CCIX, Gen-Z and OpenCAPI.

Koduri previewed several of the server platforms that will be combine Radeon Instinct with AMD’s upcoming “Naples” server-class chip. You’ll recall that Naples is a 32 core (64-thread) server CPU based on the Zen microarchitecture, which is purported to enable a 40 percent generational improvement in instructions per clock. Koduri said that customers AMD spoke with at SC16 were most excited about this merging of technology.

“The server platform will be optimized for heterogeneous I/O,” Koduri said, “and will lower the system cost for heterogeneous computing dramatically. It’s the lowest latency architecture, it has peer to peer communication, leveraging that large box support, so that you can have many GPUs attached to a single node, not one GPU, not two GPUs, but four, eight, or 16 GPUs.”

“The server platform will be optimized for heterogeneous I/O,” Koduri said, “and will lower the system cost for heterogeneous computing dramatically. It’s the lowest latency architecture, it has peer to peer communication, leveraging that large box support, so that you can have many GPUs attached to a single node, not one GPU, not two GPUs, but four, eight, or 16 GPUs.”

“Many times we are required to have multiple CPUs like dual or quad CPUs in order get a certain memory footprint or to get a certain number of GPUs connected to that. If we are able to get that done in a single socket, that’s going to decrease the cost of deployment by increasing performance,” said Vik Malyala, senior director of marketing at Supermicro, during a panel presentation at the AMD Tech Summit.

Supermicro is one of the server partners that is working with AMD to execute its heterogeneous hardware strategy. The Supermicro SYS 1028GQ-TRT box will sport three Radeon Instinct boards.

Inventec, AMD’s ODM partner, is building the following platforms:

- Inventec K888 G3 is a 100 teraflop (FP16) box with four Radeon Instinct MI25 boards.

- The Falconwitch platform houses 16 Radeon Instinct MI25 boards and delivers up to 400 teraflops (FP16).

- The Inventec Rack with Radeon Instinct stacks multiple Falconwitch devices to provide up to 3 petaflops (FP16) performance.

The Naples server CPU is expected to debut in the second quarter of 2017. AMD said that demonstrations of Naples will be forthcoming as it gets closer to launch.

The AMD Tech Summit is a follow-on to the inaugural summit that debuted last December (2015). That first event was initiated by Raja Koduri as a team-building activity for the newly minted Radeon Technologies Group. The initial team of about 80, essentially hand-picked by Koduri to focus on graphics, met in Sonoma along with about 15 members of the press. The event was expanded this year to accommodate other AMD departments and nearly 100 media and analyst representatives.