Last week IBM reported successfully using one million phase change memory (PCM) devices to implement and demonstrate an unsupervised learning algorithm running in memory. It’s another interesting and potentially important step in the quickening scramble to develop in-memory computing techniques to overcome the memory-to-processor data transfer bottlenecks that are inherent in von Neumann architecture. IBM promises big gains from PCM technology.

“When compared to state-of-the-art classical computers, this prototype technology is expected to yield 200x improvements in both speed and energy efficiency, making it highly suitable for enabling ultra-dense, low-power, and massively-parallel computing systems for applications in AI,” says IBM researcher Abu Sebastian in account of the work posted on the IBM Research Zurich website.

In this particular research, IBM demonstrated the ability to identify “temporal correlations in unknown data streams.” One of the examples, perhaps chosen with tongue-in-cheek, was use of the technique to detect and reproduce an image of computer pioneer Alan Turing. The full research is presented in a paper, ‘Temporal correlation detection using computational phase-change memory’, published in Nature Communications last week.

Evangelos Eleftheriou, an IBM Fellow and co-author of the paper, is quoted in the blog, “This is an important step forward in our research of the physics of AI, which explores new hardware materials, devices and architectures. As the CMOS scaling laws break down because of technological limits, a radical departure from the processor-memory dichotomy is needed to circumvent the limitations of today’s computers. Given the simplicity, high speed and low energy of our in-memory computing approach, it’s remarkable that our results are so similar to our benchmark classical approach run on a von Neumann computer.”

IBM used PCM devices based on germanium antimony telluride alloy stacked and sandwiched between two electrodes. The extent of its crystalline versus amorphous structure (its phase) between the electrodes is changed by pulsing current through the device which heats up the material causing the phase change; this in turn controls its conductance levels. (For background see HPCwire article, IBM Phase Change Device Shows Promise for Emerging AI Apps)

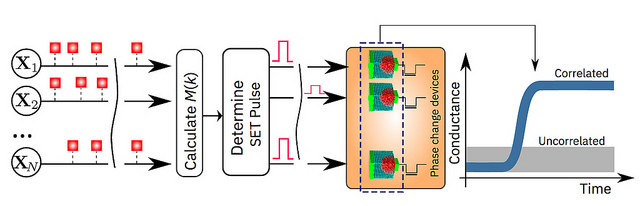

Shown below is a schematic of the IBM algorithm.

To demonstrate the technology, the authors chose two time-based examples and compared their results with traditional machine-learning methods such as k-means clustering:

- Simulated Data: one million binary (0 or 1) random processes organized on a 2D grid based on a 1000 x 1000 pixel, black and white, profile drawing of famed British mathematician Alan Turing. The IBM scientists then made the pixels blink on and off with the same rate, but the black pixels turned on and off in a weakly correlated manner. This means that when a black pixel blinks, there is a slightly higher probability that another black pixel will also blink. The random processes were assigned to a million PCM devices, and a simple learning algorithm was implemented. With each blink, the PCM array learned, and the PCM devices corresponding to the correlated processes went to a high conductance state. In this way, the conductance map of the PCM devices recreates the drawing of Alan Turing.

- Real-World Data: actual rainfall data, collected over a period of six months from 270 weather stations across the USA in one hour intervals. If rained within the hour, it was labelled “1” and if it didn’t “0”. Classical k-means clustering and the in-memory computing approach agreed on the classification of 245 out of the 270 weather stations. In-memory computing classified 12 stations as uncorrelated that had been marked correlated by the k-means clustering approach. Similarly, the in-memory computing approach classified 13 stations as correlated that had been marked uncorrelated by k-means clustering.

Shown below is figure 5 from the paper with further details of the examples (click image to enlarge):

“Memory has so far been viewed as a place where we merely store information. But in this work, we conclusively show how we can exploit the physics of these memory devices to also perform a rather high-level computational primitive. The result of the computation is also stored in the memory devices, and in this sense the concept is loosely inspired by how the brain computes,” according to Sebastian, who is an exploratory memory and cognitive technologies scientist, IBM Research, and lead author of the paper. He also leads a European Research Council funded project on this topic

Here’s an excerpt from the paper and link to a short video on the work:

“We show how the crystallization dynamics of PCM devices can be exploited to detect statistical correlations between event-based data streams. This can be applied in various fields such as the Internet of Things (IoT), life sciences, networking, social networks, and large scientific experiments. For example, one could generate an event-based data stream based on the presence or absence of a specific word in a collection of tweets. Real-time processing of event-based data streams from dynamic vision sensors is another promising application area. One can also view correlation detection as a key constituent of unsupervised learning where one of the objectives is to find correlated clusters in data streams.”

Link to paper: https://www.nature.com/articles/s41467-017-01481-9

Link to article: https://www.ibm.com/blogs/research/2017/10/ibm-scientists-demonstrate-memory-computing-1-million-devices-applications-ai/