In preparation for the arrival of Aurora, slated to be the first U.S. exascale supercomputer, Argonne National Laboratory is actively working to make techniques such as in situ and in transit visualization and analysis available to their user community plus the HPC community at large. The result is a DOE multi-institutional effort that includes Argonne, private companies, and other national labs to leverage SENSEI, a portable framework that enables in situ, in transit, and traditional batch visualization workflows that can use either ray tracing or triangle-based rendering back ends, for analysis and scalable interactive rendering.

In situ visualization has been identified as a key technology to enable science at the exascale[i]. In situ visualization means that the visualization occurs on the same nodes that perform the computation. In transit visualization is not as directly coupled to the simulation, and can help load-balance by using more nodes to support computationally expensive simulations like LAMMPs. Unlike in situ, in transit does incur some overhead when moving data across the communications fabric between nodes. Both methods keep the data in memory and avoid writing to storage.

Joseph Insley (visualization and analysis team lead at the Argonne Leadership Computing Facility) points out, “With SENSEI, users can utilize in situ and in transit techniques to address the widening gap between Flop/s and I/O capacity which is making full-resolution, I/O-intensive post hoc analysis prohibitively expensive, if not impossible.” Silvio Rizzi (assistant computer scientist, Argonne) highlights portability when he states, “the idea behind SENSEI is to write once and use anywhere.”

The Argonne team led by Nicola Ferrier as PI has adapted the popular LAMMPS (e.g. the Large-scale Atomic/Molecular Massively Parallel Simulator) code to demonstrate the benefits of the SENSEI framework. The integration of SENSEI made use of existing mechanisms in LAMMPS for coupling with other simulation codes.[ii]

Understanding the choice of LAMMPS as a SENSEI testbed

Paul Navrátil, director of Visualization at the Texas Advanced Computing Center (TACC), helps us understand the meaning and importance of in situ and in transit visualization to the general HPC community as well as the choice of LAMMPS by the ALCF team.

Just as Argonne will host the fastest U.S. supercomputer with Aurora, TACC will be home to Frontera, which will become the fastest academic supercomputer in the United States when it becomes operational in 2019.

Navrátil notes, “We expect in situ workflows to become increasingly necessary on Frontera and across all large-scale simulation science.” He believes that, “In transit analysis will also play an increasing role as simulations improve support for loosely-coupled in situ frameworks. With an in transit pathway, the simulation resources do not need to be shared for analysis tasks, which is favorable when analysis is compute-intensive, or when the simulation requires all available resources itself.”

LAMMPS is a compute intensive application plus it is a very popular simulation code, which makes it a natural testbed for SENSEI as it lets large numbers of users explore the benefits of in situ visualization plus the load balancing benefits of in transit visualization and analysis. SENSEI is also being used in multiple science domains, including molecular dynamics and materials science.

An in transit workflow using SENSEI and OSPRay is shown below.

SENSEI is very flexible and allows researchers to perform analysis and use either OpenGL rendering or create photo real images. Jim Jeffers (senior director and senior principle engineer, Intel Visualization Solutions) notes that the interactive performance delivered by the Intel Rendering Framework and photorealistic rendering with the freely available OSPRay library and viewer, “addresses the need and creates the want” for photorealistic rendering. Succinctly, interactive ray tracing with its inherent lighting capability lets scientists get more from their data. Jeffers’ is famous for stating, “a picture is worth an exabyte.”

The ALCF team provided the following figure to illustrate what is possible when instrumenting LAMMPS with SENSEI. They used the Intel OSPRay library that is part of the Intel Rendering Framework and the libIS, a lightweight, flexible library to create this in transit visualization. However, SENSEI was designed[iv] work with other libraries in place of libIS such as catalyst (part of ParaView), ADIOS (from Oak Ridge National Laboratory), and LibSim (part of VisIt), as well as GPU-based software to perform in transit visualizations.

Understanding Software Defined Visualization (SDVis)

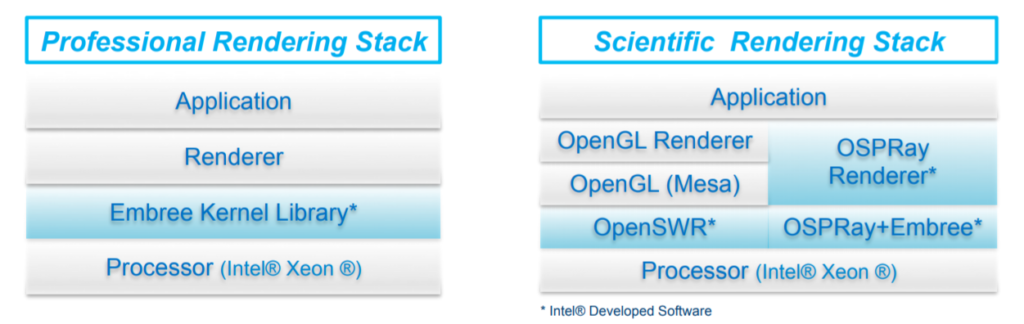

The foundation of CPU-based in situ and in transit visualization is Software Defined Visualization. The core functionality are the freely available, open-source Intel OSPRay, Embree, and OpenSWR libraries. These libraries have been incorporated into the Intel® Rendering Framework stack as shown below.

Using CPUs for rendering has taken the HPC community by storm. Rizzi summarizes the interest at Argonne by noting, “We want to enable visualization on our supercomputers which are CPU-based”. Navrátil highlights TACC’s commitment by pointing out that, “CPU-based SDVis will be our primary visual analysis mode on Frontera, leveraging the Intel Rendering Framework stack.”

Scaling and the ability to run efficiently are two key ideas behind the OSPRay ray tracing and the OpenSWR OpenGL SDVis renderer.

Kitware, for example, performed trillion triangle OpenGL visualizations using the LANL Trinity supercomputer. David DeMarle, (visualization luminary and engineer at Kitware) observes that, “CPU-based OpenGL performance does not trail off even when rendering meshes containing one trillion (10^12) triangles on the Trinity leadership class supercomputer. Further, we might see a 10-20 trillion triangle per second result as our current benchmark used only 1/19th of the machine.” The ability of the CPU to access large amounts of memory is key to realizing trillion triangle per second rendering capability.

Meanwhile, OSPRay users have demonstrated the ability to render and visualize large, photorealistic images on everything from cosmological data sets to molecules and complex scenes. No special hardware is required for rendering, which can achieve interactive photorealism on as few as eight Intel Xeon Scalable 8180 processors or scale to high-quality rendering for in situ nodes. [vii] [viii] [ix] [x]

Viewing the rendered images

The “visualize anywhere” nature of CPU-based SDVis means that visualizing locally or remotely is possible on devices that can display from memory. Extraordinary display flexibility without device dependencies makes “visualize anywhere” even better. HPC users appreciate how they can view results on their laptops and switch to display walls or a cave.

SENSEI also supports existing batched save-to-storage workflows.

Summary

The HPC community has always been about pressing the limits of computation. For this reason, in situ and in transit visualization frameworks have been created to work with CPU-based rendering to eliminate data movement. In this way, visualization can scale and keep pace with simulation as the HPC community runs on petascale and anticipates the next generation exascale supercomputers.

Rob Farber is a global technology consultant and author with an extensive background in HPC and in developing machine learning technology that he applies at national labs and commercial organizations. Rob can be reached at [email protected].

[i] https://science.energy.gov/~/media/ascr/pdf/program-documents/docs/Exascale-ASCR-Analysis.pdf

[ii] https://lammps.sandia.gov/doc/Howto_couple.html

[iii] Will Usher, Silvio Rizzi, Ingo Wald, Jefferson Amstutz, Joseph Insley, Venkatram Vishwanath, Nicola Ferrier, Michael E. Papka, and Valerio Pascucci. 2018. libIS: A Lightweight Library for Flexible In Transit Visualization. In ISAV: In Situ Infrastructures for Enabling Extreme-Scale Analysis and Visualization (ISAV ’18), November 12, 2018, Dallas, TX, USA. ACM, New York, NY, USA, 6 pages. https://doi.org/10.1145/3281464.3281466.

[v] https://doi.org/10.1145/3281464.3281466

[vi] https://tacc.github.io/visitOSPRay/

[viii] http://www.cgw.com/Press-Center/In-Focus/2018/Scalable-CPU-Based-SDVis-Enables-Interactive-Pho.aspx

[ix] https://www.ixpug.org/documents/1496440983IXPUG_insitu_S1_Jeffers.pdf

[x] http://www.techenablement.com/third-party-use-cases-illustrate-the-success-of-cpu-based-visualization/