Quantum computing pioneer D-Wave Systems today “previewed” plans for its next-gen adiabatic annealing quantum computing platform which will feature a new underlying fab technology, reduced noise, increased connectivity, 5000-qubit processors, and an expanded toolset for creation of hybrid quantum-classical applications. The company plans to “incrementally” roll out platform elements over the next 18 months.

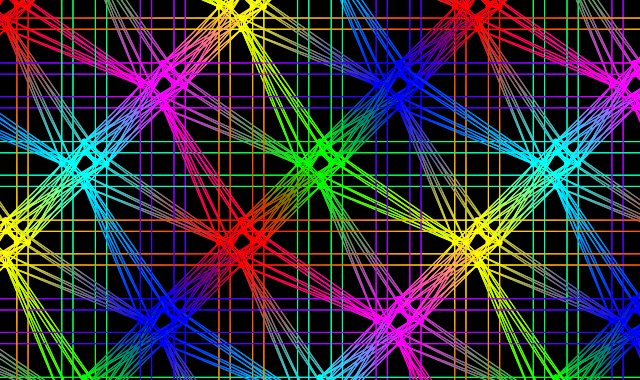

One major change is implementation of a new topology, Pegasus, in which each qubit is connected to 15 other qubits making it “the most connected of any commercial quantum system in the world,” according to D-Wave. In the current topology, Chimera, each qubit is connected to six other qubits. The roughly 2.5x jump in connectivity will enable users to tackle larger problems with fewer qubits and achieve better performance reports D-Wave.

“The reason we are announcing the preview now is because we will be making this technology available incrementally over the next 18 months and we wanted to provide a framework,” Alan Baratz, executive vice president, R&D and Chief Product Officer, D-Wave, told HPCwire. The plan, he said, is “to start by talking about the new topology now, how it fits into the whole. Then we’ll be announcing new tools, how they fit in. Next you’ll start to see some of the new low noise technology – that will initially be on our current generation system and you’ll see that in the cloud.” The final piece will be early versions of the 5000-qubit next generation systems.

It’s an ambitious plan. Identifying significant milestones now, but without specific dates, is an interesting gambit. Starting now, users can use D-Wave’s Ocean development tools which include compilers for porting of problems into the Pegasus topology. D-Wave launched its cloud-accessed development platform last fall – LEAP – and many of the new features and tools will show up there first (see HPCwire article, D-Wave Is Latest to Offer Quantum Cloud Platform).

Bob Sorensen, chief analyst for quantum computing at Hyperion Research, had a positive reaction to D-Wave’s plan, “This announcement indicates that D-Wave continues to advance the state of the art in its quantum computing efforts. Although the increase from 2000 to 5000 qubits is impressive in itself, what strikes me is the new Pegasus topology. I expect that this increased connectivity will prove to be a major driver of new, interesting, and heretofore unrealizable QC algorithms and applications. Finally, I think it is important to note that D-Wave continues to listen to its wide, growing, and increasingly experienced customer base to help guide D-Wave’s future system designs. Being able to tap into the collective expertise of such a user base continues to be a critical element driving the evolution of D-Wave systems.”

Altogether, says D-Wave, the features of its next-gen system are expected to accelerate the race for commercial relevance and so-called quantum advantage – the goal of solving a problem sufficiently better on a quantum computer than on a classical computer to warrant switching to quantum computing for that application. D-Wave has aggressively marketed its success selling machines to commercial and government customers and says those users have developed “more than 100 early applications in areas as diverse as airline scheduling, election modeling, quantum chemistry simulation, automotive design, preventative healthcare, logistics and more.” How ready those apps are is sometimes debated. In any case, Baratz expects the next gen platform to have enough power (compute, developer tools, etc.) to lead to demonstrating customer advantage.

Sorensen is more circumspect about quantum advantage’s importance, “To my mind, the issue of quantum advantage is not a critical one. I really don’t think most users care about a somewhat artificial milestone. What matters is the development of algorithms/applications that bring a new capability to an existing problem or offer some significant speed-up over an existing application. Give a user 50X performance improvement and he/she is not going to lose much sleep debating quantum advantage.

“Bottom line. If at some point the headline reads, “Company Z demonstrates quantum advantage in algorithm X,” what will that mean to the existing and potential QC user base writ large? Not much I suspect. Not without a spate of algorithms to back it up.”

Here are marketing bullet points as excerpted from D-Wave’s announcement:

- New Topology: Pegasus is the most connected of any commercial quantum system in the world. Each qubit is connected to 15 other qubits (compared to Chimera’s 6), giving it 2.5x more connectivity. It enables embedding of larger problems with fewer physical qubits. The D-Wave Ocean software development kit (SDK) includes tools for generating the Pegasus topology. Interested users can try embedding their problems on Pegasus.

- “Lower Noise: next generation system will include the lowest noise commercially-available quantum processing units (QPUs) ever produced by D-Wave. This new QPU fabrication technology improves system performance and solution precision to pave the way to greater speedups.

- “Increased Qubit Count: with more than 5000 qubits, the next generation platform will more than double the qubit count of the existing D-Wave 2000Q. Gives programmers access to a larger, denser, more powerful graph for building commercial quantum applications.

- “Expansion of Hybrid Software & Tools: Investments in ease-of-use, automation and provide a more powerful hybrid development environment building upon D-Wave Hybrid. Allows allowing developers to run across classical and the next-generation quantum platforms in Python and other common languages. Modular approach incorporates logic to simplify distribution, allowing developers to interrupt processing and synchronize across systems to draw maximum computing power out of each system.

- “Ongoing Releases: components of the D-Wave next generation quantum platform will come to market between now and mid-2020 via ongoing QPU and software updates available through the cloud. The complete system will be available through cloud and on-premise in mid-2020. Users can get explore a simulation of the new Pegasus topology today.

D-Wave didn’t reveal much detail of the enabling technology advances. Mark Johnson, VP, processor design & development said, “In terms of the integrated circuit we have basically redone the stack and that allowed us to make the design more compact. It also allowed us to get more connectivity. We are also making changes within that stack to reduce the intrinsic contribution to noise and decoherence from the materials. We’re not going to be talking about the recipe, just realize it is a fundamental technology node change, [with] new materials, a new fabrication processes, a new stack.”

Baratz said, “I’d add only that the new materials and processes are not just ‘in design’. We’ve actually used them on our current generation system, our 2000 qubit system. We’ve rebuilt it, using this newer technology stack, have several of them operating in our lab now, and are seeing the results from it we expected to see.”

The lower noise technology, said Baratz, will enable longer coherence times and higher quality solutions. The new operating software “will be designed specifically to support hybrid applications and that means we will be significantly reducing latency. This is important for hybrid applications where you run part classically and send to the quantum processors, get the result, run classically, and back and forth,” he said. For LEAP users, D-Wave will also offer new scheduling options so instead of having to run in a queue, users can reserve blocks of time if necessary to run a longer applications.

A brief review on the D-Wave approach may be useful. It differs rather dramatically from the universal gate-based model. With a gate-model quantum computer you have to specify the sequence of instructions and gates required to solve the problem. In that sense it’s a bit more like programming a classical system where you have to specify the sequence of instructions.

“For our system you don’t do that,” said Baratz. “All you do is specify the problem in a mathematical formulation that our system understands. It understands two different formulations. One of them is the quadratic binary optimization problem. The other is an Ising optimization problem. It’s basically a well-defined mathematical construct. So really programming our system has nothing to do with physics, nothing to do with qubits, nothing to do with entanglement, nothing to do with tuning with pulses; it is about mapping your problem into this mathematical formulation. It’s more like a declarative programing model where you don’t really have to specify the sequence of instruction. As a result it’s much easier to program.”

This description of how D-Wave systems work, taken from D-Wave’s site, may be helpful:

“In nature, physical systems tend to evolve toward their lowest energy state: objects slide down hills, hot things cool down, and so on. This behavior also applies to quantum systems. To imagine this, think of a traveler looking for the best solution by finding the lowest valley in the energy landscape that represents the problem.

“Classical algorithms seek the lowest valley by placing the traveler at some point in the landscape and allowing that traveler to move based on local variations. While it is generally most efficient to move downhill and avoid climbing hills that are too high, such classical algorithms are prone to leading the traveler into nearby valleys that may not be the global minimum. Numerous trials are typically required, with many travelers beginning their journeys from different points.

‘In contrast, quantum annealing begins with the traveler simultaneously occupying many coordinates thanks to the quantum phenomenon of superposition. The probability of being at any given coordinate smoothly evolves as annealing progresses, with the probability increasing around the coordinates of deep valleys. Quantum tunneling allows the traveler to pass through hills—rather than be forced to climb them—reducing the chance of becoming trapped in valleys that are not the global minimum. Quantum entanglement further improves the outcome by allowing the traveler to discover correlations between the coordinates that lead to deep valleys.”

Like its quantum computing rivals IBM and Rigetti, D-Wave is betting heavily on cloud-delivery as both a means for attracting and training QC users as well as offering production capability. Of course, D-Wave is still the only vendor selling systems outright for on premise, though IBM’s new IBM Q System One seems to be a step in that direction.

D-Wave has made it quite easy to create a LEAP account. Users can get one minute of free time to try out the system and one minute per month on an ongoing basis for free if they agree to open source any work created. Baratz says a minute of time buys more than you think (~400-to-4,000 experiments). Fees for commercial use start at $2,000 per hour per month with discounts if you sign up for longer periods of time.

No doubt quantum watchers will monitor how well and how timely D-Wave delivers on its promise. There has been no shortage of optimism from the QC development community (vendor and academia). Likewise the recent $1.25 billion U.S. Quantum Initiative, passed in December, has added to the chorus of those arguing there’s a global quantum computing race with high stakes at risk. We’ll see.

Feature Image: Illustration of Pegasus connectivity, Source: D-Wave Systems