2020 has proven a harrowing year – but it has produced remarkable heroes. To that end, this year, the Association for Computing Machinery (ACM) introduced the Gordon Bell Special Prize for High Performance Computing-Based COVID-19 Research. The prize, which was awarded in a ceremony today at the (virtual) SC20 supercomputing conference, recognizes “outstanding research achievement towards the understanding of the COVID-19 pandemic through the use of high-performance computing.”

Nominations for the prestigious award were selected “based on performance and innovation in their computational methods, in addition to their contributions towards understanding the nature, spread and/or treatment of the disease.” The award is accompanied by a $10,000 prize. The Special Prize for High Performance Computing-Based COVID-19 Research is slated to be awarded in 2021 as well.

Nominations for the prestigious award were selected “based on performance and innovation in their computational methods, in addition to their contributions towards understanding the nature, spread and/or treatment of the disease.” The award is accompanied by a $10,000 prize. The Special Prize for High Performance Computing-Based COVID-19 Research is slated to be awarded in 2021 as well.

The four finalist teams presented virtually at SC20 in advance of the awards ceremony, showcasing the myriad ways in which massive supercomputing has been utilized to provide crucial knowledge around the pandemic and the virus at its core, from atom-by-atom simulations of the viral envelope to person-by-person simulations of major cities.

And the winner is…

Bronis R. de Supinski, chair of the Gordon Bell Prize Committee and CTO for Livermore Computing at Lawrence Livermore National Laboratory (LLNL), took the virtual stage to announce the winning team: a wide-reaching, nationwide collaboration to develop unprecedented simulations of key aspects of the novel coronavirus.

AI-Driven Multiscale Simulations Illuminate Mechanisms of SARS-CoV-2 Spike Dynamics

Team: Lorenzo Casalino, Abigail Dommer, Zied Gaieb, Emilia P. Barros, Terra Stzain, Surl-Hee Ahn, Anda Trifan, Alexander Brace, Anthony Bogetti, Heng Ma, Hyungro Lee, Matteo Turilli, Syma Khalid, Lillian Chong, Carlos Simmerling, David Hardy, Julio Maia, James Phillips, Thorsten Kurth, Abraham Stern, Lei Huang, John McCalpin, Mahidhar Tatineni, Tom Gibbs, John Stone, Shantenu Jha, Arvind Ramanathan and Rommie E. Amaro.

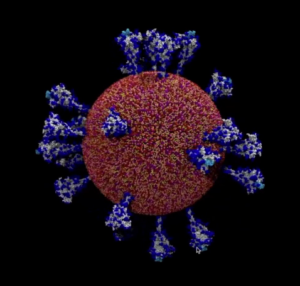

The winning team zeroed in on a part of the SARS-CoV-2 virus that has become notorious to anyone following COVID-19 research: the spike protein, which both provides the coronavirus with its namesake crown-like spikes and allows it to infect human cells. The team used Summit (still the second-most powerful publicly ranked supercomputer) to simulate the SARS-CoV-2’s spike protein and viral envelope using 305 million atoms.

“Experiments give us a picture of what these things look like, but they can’t tell us the whole story,” said Rommie Amaro, co-lead of the project and professor and endowed chair of chemistry and biochemistry at the University of California San Diego. “The only way we can do this is through simulations, and right now we are pushing the capabilities of molecular simulations to the limits of the computer architectures that we have on this earth. This is at the edge of possibilities of what people are capable of doing.”

“We are giving people never-before-seen, intimate views of this virus, with resolution that is impossible to achieve experimentally.”

“We are giving people never-before-seen, intimate views of this virus, with resolution that is impossible to achieve experimentally,” she added. “Why we care about this is because if we want to understand how the virus infects the host cell, if we want to be able to design antibodies and new drugs to block and cure infection, if we want to be able to design new therapeutics, this information at this very fine resolution at the atomic level is required.”

To achieve the massive simulation, the team optimized and scaled the Nanoscale Molecular Dynamics (NAMD) code across Summit, a feat made possible through extensive work on other supercomputers, including Frontera, Comet and ThetaGPU. The results illuminated the virus’ sugary glycan shield – which protects it from many pharmaceutical attack strategies – and highlighted the critical role of the virus’ receptor binding domain.

An incredible slate of finalists

Though the SARS-CoV-2 simulations took home the prize at the end of the day, the entire field of finalists illustrated the astonishing work that the HPC community has put into ending the pandemic. Keep reading to learn more about the other three finalist teams.

High-Throughput Virtual Laboratory for Drug Discovery Using Massive Datasets

Team: Jens Glaser, Josh V. Vermaas, David M. Rogers, Jeff Larkin, Scott LeGrand, Swen Boehm, Matthew B. Baker, Aaron Scheinberg, Andreas F. Tillack, Mathialakan Thavappiragasam, Ada Sedova and Oscar Hernandez.

Another ORNL-based team also used Summit – this time, to screen more than a billion compounds for their ability to bind with two different structures of SARS-CoV-2’s main protease… and completing each of those screenings in under 24 hours.

To achieve those remarkable results, the team scaled AutoDock-GPU to 27,612 of Summit’s Nvidia V100 GPUs, ending up with a 350-fold speedup compared to the CPU version of the same code. The researchers faced an uphill battle on this front, as very few molecular docking codes have used GPUs, and fewer still are well-supported – let alone open-source. The researchers worked with Nvidia to create a CUDA version of the code for high-throughput analysis.

“When we were using Summit, we were docking 20,000 compounds a second.”

“When we were using Summit, we were docking 20,000 compounds a second,” said Ada Sedova, a biophysicist in the Molecular Biophysics Group within ORNL’s Biosciences Division and co-lead of the project. “We have done this in 24 hours with full optimization of these poses, the way people would normally do at the small scale. To be able to do this on a billion compounds would have taken months on even the largest academic clusters without the optimizations of AutoDock-GPU for Summit.”

“We think that the rapid response to the COVID-19 pandemic that we stood up on Summit is essential to developing a forward-looking computational capability for future global health crises,” added Jens Glaser, a computational scientist at ORNL and another co-lead of the project. “Importantly, the speedup was realized end-to-end and contains necessary machine learning and data analytics components, and that allows us to incorporate feedback from experiments into the machine learning models and converge onto predictions and more potent inhibitors.”

A Population Data-Driven Workflow for COVID-19 Modeling and Learning

Team: Jonathan Ozik, Justin M. Wozniak, Nicholson Collier, Charles M. Macal and Mickael Binois.

A finalist team led by Argonne National Laboratory, meanwhile, used supercomputing for epidemiological analysis. Using Argonne’s Theta supercomputer (39th on the most recent Top500), the team modeled how COVID-19 spreads through populations using a city-scale representation of Chicago. The simulated Windy City was populated by 2.7 million digital individuals traveling among 1.2 million locations. The model was optimized to simultaneously run on more than 800 of Theta’s nodes.

“In ChiSIM [the Chicago Social Interaction Model], we represent every person in the city of Chicago as an individual, including their socioeconomic and demographic variables, their activities and the places they visit – schools and workplaces, for example – in the course of those activities,” explained Nicholson Collier, a senior software engineer at Argonne. “As the agents follow their activity schedules, they become colocated with other agents in a place and interact with them, leading to trillions of interactions over the course of the simulation.”

“… trillions of interactions over the course of the simulation.”

“With this model, you have potentially many people interacting in many different ways: some might be infected, some might be susceptible, and they mix in different proportions in a variety of different locations – there are different locations like schools and workplaces where very different parts of the population interface,” said Jonathan Ozik, an Argonne computational scientist and co-lead of the project. “The multitude of possibilities the model presents make it quite qualitatively different from – and quantitatively more complex than – a statistical model or more simplified compartmental models, which are much faster to run.”

Throughout the pandemic, results from CityCOVID have been used to inform stakeholders and decision-makers, particularly in Chicago and the state of Illinois.

Enabling Rapid COVID-19 Small Molecule Drug Design Through Scalable Deep Learning of Generative Models

Team: Sam Ade Jacobs, Tim Moon, Kevin McLoughlin, William D. Jones, David Hysom, Dong H. Ahn, John Gyllenhaal, Pythagoras Watson, Felice C. Lightstone, Jonathan E. Allen, Ian Karlin and Brian Van Essen.

Another finalist team hailed from LLNL, where researchers used Sierra (which recently defended its title as the 3rd most powerful publicly ranked supercomputer) to create an accurate, efficient generative model for producing novel compounds with the potential to treat COVID-19. After training the model on over 1.6 billion small molecule compounds, the team reduced the training time from a day to just 23 minutes.

“Drug design is both costly in time and effort,” said Brian Van Essen, a computer scientist and leader of the Informatics Group at LLNL. “It’s normally a 15-year process to bring a new therapeutic from discovery all the way through FDA review.” The goal, he said, was to greatly condense the time frame of the first two trial phases, but also reduce the high risk of failure in phase three trials.

“Our globally asynchronous multi-level parallel training approach strong scales to all of Sierra with up to 97.7 percent efficiency,” the researchers wrote, adding that they achieved 318 petaflops for 17.1 percent of half-precision peak using tensor cores. The researchers say that their model can be used to create an automated “self-learning design loop” for drug discovery, even with much less impressive computing resources than Sierra.

“This ability to quickly create high-quality machine learning models changes the time-to-insight from a compute-limited issue to a human-limited one.”

“This capability will have a dramatic impact on drug discovery,” said Ian Karlin, an LLNL computer scientist who co-authored the paper. “This ability to quickly create high-quality machine learning models changes the time-to-insight from a compute-limited issue to a human-limited one.”

Next, the researchers want to improve the scaling even further, train using more types of models, increase automation and improve overall efficiency.

And also…

Don’t forget to check our coverage of the winners and finalists for the 2020 ACM Gordon Bell Prize.