In this regular feature, HPCwire highlights newly published research in the high-performance computing community and related domains. From parallel programming to exascale to quantum computing, the details are here.

Deducing the properties of galaxy mergers using HPC-powered deep neural networks

These authors from Intel, minds.ai, Leiden University and the SURF Cooperative propose DeepGalaxy, “a visual analysis framework trained to predict the physical properties of galaxy mergers based on their morphology.” They tested DeepGalaxy on the Endeavour supercomputer at NASA, where “scaling efficiency [exceeded] 0.93 when trained on 128 workers[.]” “Without having to carry out expensive numerical simulations,” the researchers wrote, “DeepGalaxy … achieves a speedup factor of ∼10^5.”

Authors: Maxwell X. Cai, Jeroen Bedorf, Vikram A. Saletore, Valeriu Codreanu, Damian Podareanu, Adel Chaibi and Penny X. Qian.

Modernizing particle-in-cell codes for exascale plasma simulation

In nuclear fusion research, particle-in-cell (or “PIC”) codes are used to describe how particles are distributed over time. This trio of researchers from the University of Ljubljana in Slovenia introduce algorithmic modernizations of key PIC codes in preparation for the exascale era. In particular, they report that their approach “eliminates artificial grid-generated noise” and “offers a more direct method for treating complex simulation [cases].”

Authors: Ivona Vasileska, Pavel Tomšič and Leon Kos.

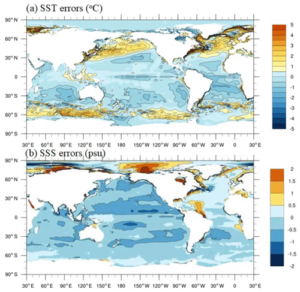

Optimizing the Community Earth System Model for Sunway TaihuLight

Optimizing the Community Earth System Model for Sunway TaihuLight

Sunway TaihuLight is the fourth most-powerful publicly ranked supercomputer in the world. In this paper, 44 authors across ten Chinese institutions and two American institutions describe the process of optimizing the high-resolution Community Earth System Model (CESM-HR, a fully coupled global climate model) on Sunway TaihuLight. The authors report a 3.4-fold increase in simulation speed as a result of their efforts.

Authors: Shaoqing Zhang, Haohuan Fu, Lixin Wu, Yuxuan Li, Hong Wang, Yunhui Zeng, Xiaohui Duan, Wubing Wan, Li Wang, Yuan Zhuang, et al.

Deducing GPU lifetimes on the Titan supercomputer

Oak Ridge’s Titan supercomputer placed in the top ten of the Top500 until 2019. In this paper, six Oak Ridge researchers analyze over 100,000 years of “GPU lifetime” data from Titan’s 18,688 GPUs. “Using time between failures analysis and statistical survival analysis techniques,” they write, “we find that GPU reliability is dependent on heat dissipation to an extent that strongly correlates with detailed nuances of the cooling architecture and job scheduling.” Based on their conclusions, they make recommendations for future supercomputers.

Authors: George Ostrouchov, Don Maxwell, Rizwan Ashraf, Christian Engelmann, Mallikarjun Shankar and James Rogers.

“High performance computing (HPC) user support teams are the first line of defense against large-scale problems, as they are often the first to learn of problems reported by users,” write these authors, a duo from Johns Hopkins University and Los Alamos National Laboratory (LANL). In their paper, they examine the user support ticketing system for the LANL HPC Consult Team, developing proof-of-concept tools for task automation.

Authors: Alexandra DeLucia and Elisabeth Moore.

Combining HPC with cloud tech to provide real-time insights into simulations

Combining HPC with cloud tech to provide real-time insights into simulations

The expense of performing true in situ analysis of scientific simulation data on supercomputing systems has led to the growth of cloud-based big data approaches – however, the semantic differences between cloud systems and HPC systems can be challenging. These authors from Oak Ridge National Laboratory and two U.S. universities introduce ElasticBroker, a tool that filters, aggregates, converts and steadily uploads scientific simulation data to a distinct cloud ecosystem, where it performs real-time data analysis.

Authors: Feng Li, Dali Wang, Feng Yan and Fengguang Song.

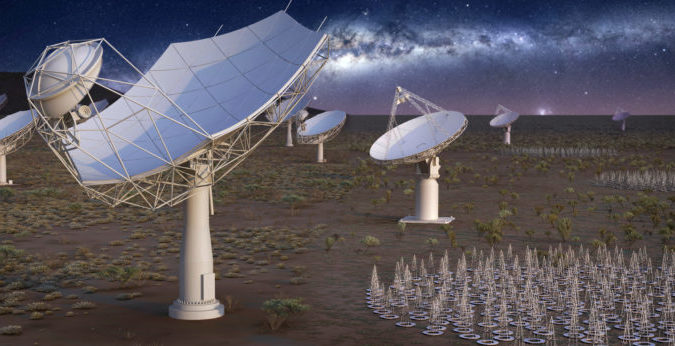

Processing Square Kilometre Array data on Summit

This paper (written by a dozen researchers from Oak Ridge National Lab, the University of Western Australia and other institutions) present a workflow for simulating and processing full-scale data from the first phase of the Square Kilometre Array (SKA), which, when completed, will be the world’s largest radio telescope. To operate that workflow, they use Oak Ridge’s Summit supercomputer to simulate a “typical six-hour observation” and process that dataset with an imaging pipeline.

Authors: Ruonan Wang, Rodrigo Tobar, Markus Dolensky, Tao An, Andreas Wicenec, Chen Wu, Fred Dulwich, Norbert Podhorszki, Valentine Anantharaj, Eric Suchyta, Baoqiang Lao and Scott Klasky.

Do you know about research that should be included in next month’s list? If so, send us an email at [email protected]. We look forward to hearing from you.