The wait is over. Today Intel officially launched its 10nm datacenter CPU, the third-generation Intel Xeon Scalable processor, codenamed Ice Lake. With up to 40 “Sunny Cove” cores per processor, built-in acceleration and new instructions, the Ice Lake-SP platform offers a significant performance boost for AI, HPC, networking and cloud workloads, according to Intel.

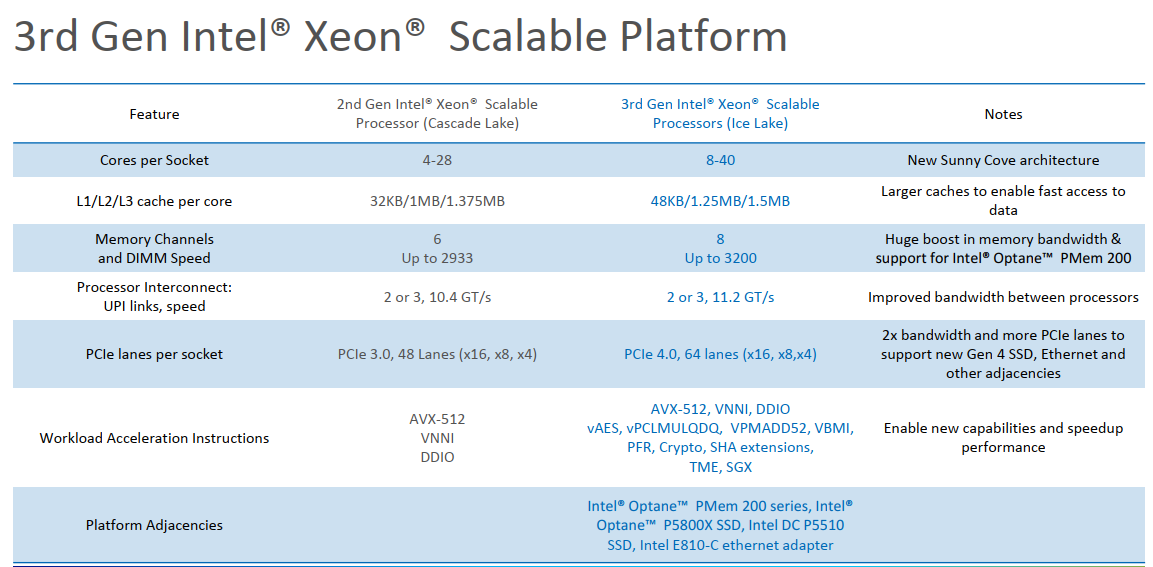

In addition to increasing the core count from 28 to 40 over previous-gen Cascade Lake, Ice Lake provides eight channels of DDR4-3200 memory per socket and up to 64 lanes of PCIe Gen4 per socket, compared to six channels of DDR4-2933 and up to 48 lanes of PCI Gen3 per socket for the previous generation.

In addition to increasing the core count from 28 to 40 over previous-gen Cascade Lake, Ice Lake provides eight channels of DDR4-3200 memory per socket and up to 64 lanes of PCIe Gen4 per socket, compared to six channels of DDR4-2933 and up to 48 lanes of PCI Gen3 per socket for the previous generation.

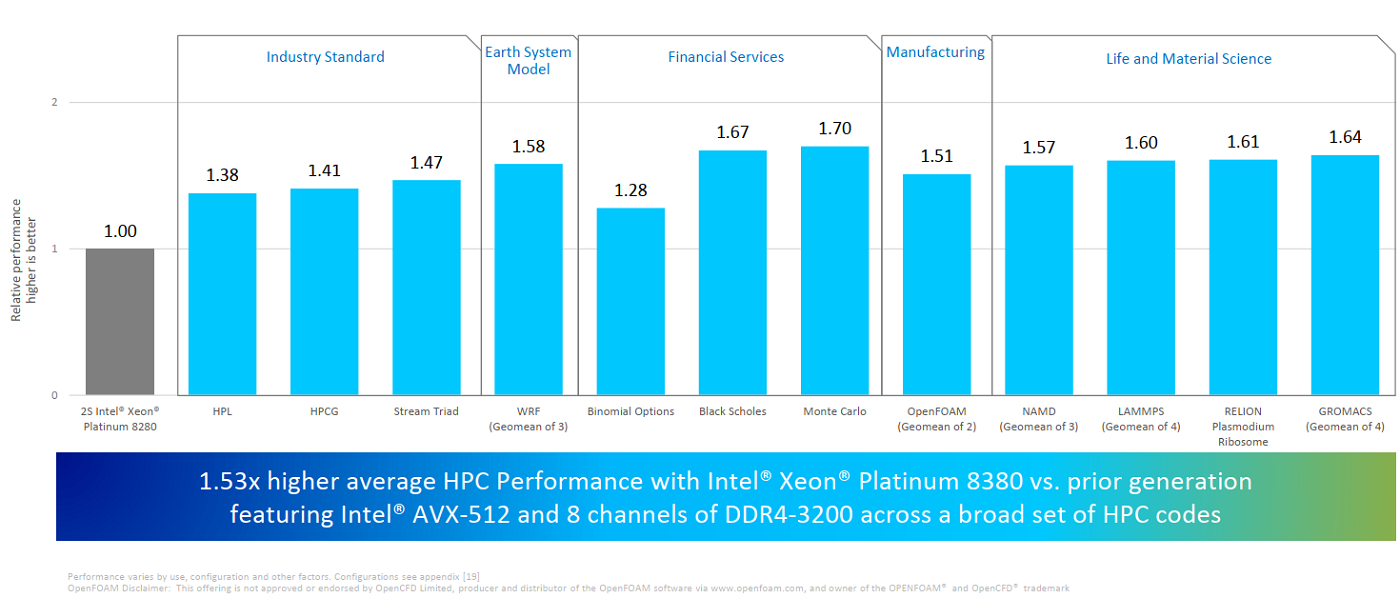

With these enhancements, along with AVX-512 for compute acceleration and DL Boost for AI acceleration, Ice Lake delivers an average 46 percent performance improvement for datacenter workloads and 53 higher average HPC performance, generation-over-generation, according to Intel. In early internal benchmarking*, Intel also showed Ice Lake outperforming the recently launched AMD third-generation Epyc processor, codenamed Milan, on key HPC, AI and cloud applications.

In an interview with HPCwire, Intel’s Vice President and General Manager of HPC Trish Damkroger highlighted the work that went into the Sunny Cove core as well as the HPC platform enhancements. “Having eight memory channels is key for memory bound workloads, and with the 40 cores along with AVX-512, the CPU shows great performance for a lot of workloads that are more compute bound,” she said. Damkroger further emphasized Intel’s Speed Select Technology (SST), which enables granular control over processor frequency, core count, and power. Although Speed Select was introduced on Cascade Lake, it previously only facilitated the configuring of frequency, but with Ice Lake, there is the added flexibility to dynamically adjust core count and power.

Using Intel’s Optane Persistent Memory (PMem) 200 series combined with traditional DRAM, the new Ice Lake processors support up to 6 terabytes of system memory per socket (versus 4.5 terabytes supported by Cascade Lake and Cascade Lake-Refresh). Optane PMem 200 is part of Intel’s datacenter portfolio targeting the new third-generation Xeon platform, along with Optane P5800X SSD, SSD D5-P5316 NAND, Intel Ethernet 800 series network adapters (offering up to 200GbE per PCIe 4.0 slot), and the company’s Agilex FPGAs.

In addition to moving to PCIe Gen4, which provides a 2X bandwidth increase compared with Gen3, the socket to socket interconnect rates for Ice Lake have increased nearly 7.7 percent for improved bandwidth between processors.

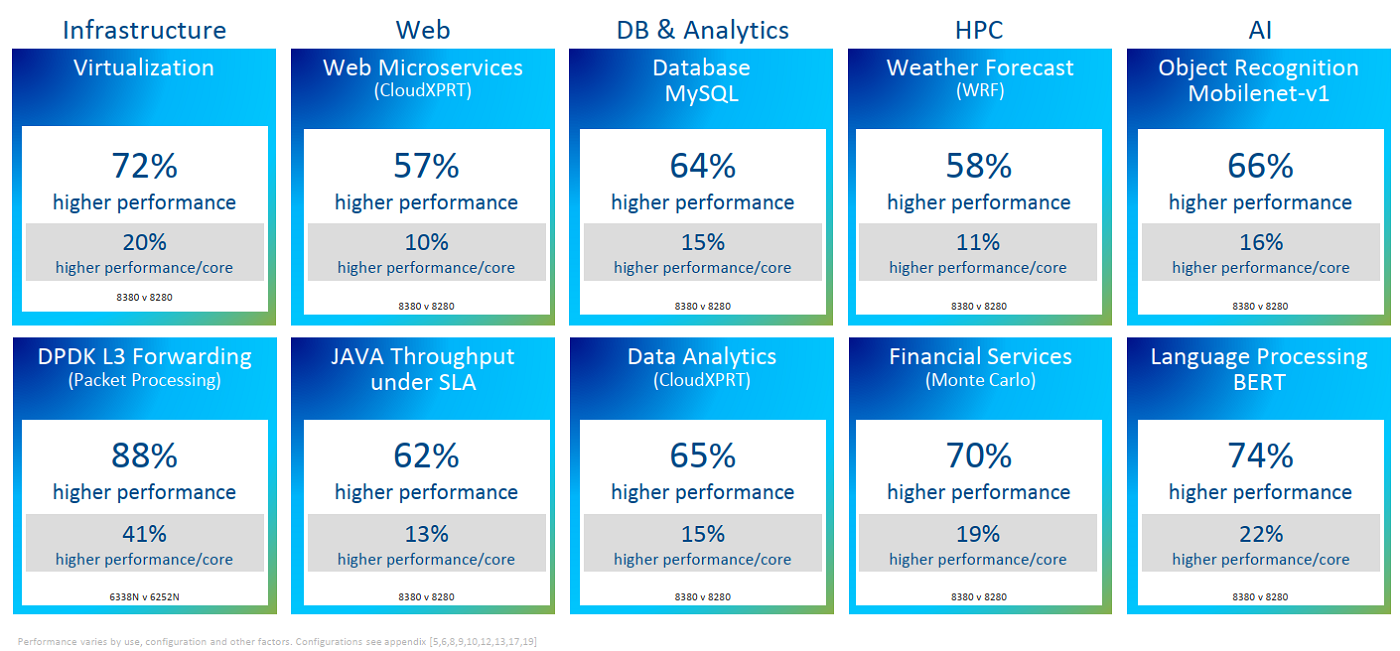

Gen over gen, Ice Lake delivers a 20 percent IPC improvement (28-core, ISO frequency, ISO compiler) and improved per-core performance on a range of workloads, shown on the slide below (comparing the 8380 to the 8280).

The combination of AVX-512 instructions (first implemented on the now-discontinued Intel Knights Landing Phi in 2016 and on Skylake in 2017) and the 8-channels of DDR4-3200 memory are proving especially valuable for boosting HPC workloads. With AVX-512 enabled, the 40-core, top-bin 8380 Platinum Xeon achieves 62 percent better performance on Linpack, over AVX2.

Compared with the previous-gen Cascade Lake, the Ice Lake 8380 Xeon achieves 38 percent higher performance on Linpack, 41 percent higher performance on HPCG, and 47 percent faster performance on Stream Triad, in Intel testing.

These improvements on industry-standard benchmarking apps are reflected on application codes used in earth system modeling, financial services, manufacturing, as well as life and material science. The slide below shows improvements on a total of 12 HPC applications, including 58 percent higher performance on the weather forecasting code WRF, 70 percent improved performance on Monte Carlo, 51 percent speed-up on OpenFoam, and 57 percent improvement on NAMD.

Damkroger said that the 57 percent improvement on NAMD — a molecular dynamics code used in life sciences — is just the start. Intel worked with the NAMD team at the University of Illinois Urbana-Champaign to further optimize performance, achieving a 2.43X gen-over-gen performance boost (143 percent). “It’s all because of AVX-512 optimizations,” said Damkroger.

At the University of Illinois’ OneAPI Center of Excellence, researchers are working to expand NAMD to support GPU architectures through the use of OneAPI’s open standards. “We’re preparing NAMD to run more optimally on the upcoming Aurora supercomputer at Argonne National Laboratory,” said Dave Hardy, senior research programmer, University of Illinois Urbana-Champaign.

Another illustrative use case comes from financial services, a field which is beset by space and power constraints (in New York City, for example) and which uses complex in-house software. Pointing to the 70 percent speedup for Monte Carlo simulations, gen over gen, and claiming a 50 percent improvement versus the competition (ie AMD’s 7nm Milan CPU), Damkroger said the gains are attributable to Ice Lake’s L1 and L2 cache sizes, the eight faster memory channels, and also the AVX-512 instructions. She indicated that more optimized results will be on the way. “Honestly, we just got our Milan parts in about a week ago,” said Damkroger. “We have all the information for Rome, but we obviously are doing these comparisons to the latest competition.”

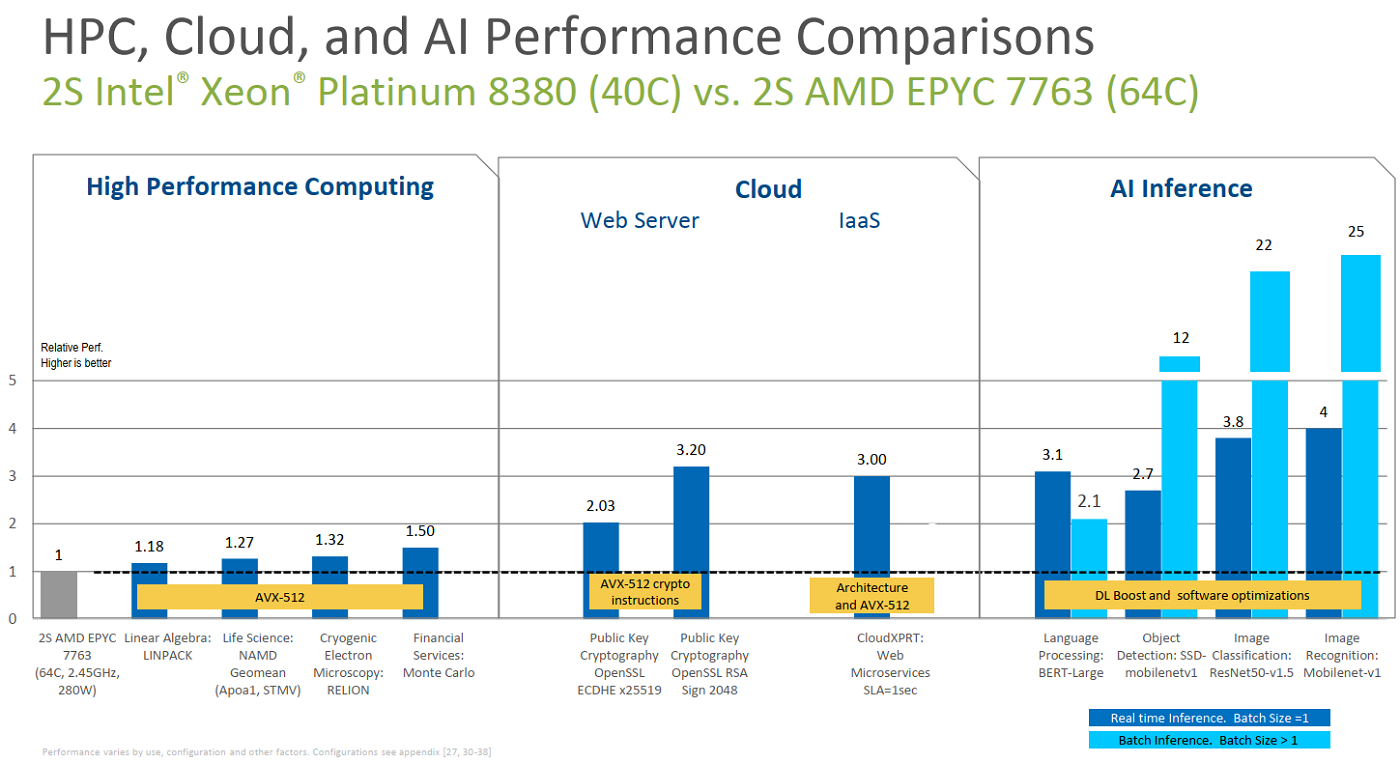

Intel has had the Milan parts in-house long enough to conduct some early competitive benchmarking*. The slide below (which Intel shared during a media pre-briefing last week) shows HPC, cloud and AI performance comparisons for the top Ice Lake part (40-core) versus the top AMD Epyc Milan part (64-core) in two-socket configurations. According to Intel’s testing, Ice Lake outperformed Milan by 18 percent on Linpack, by 27 percent on NAMD, and by 50 percent on Monte Carlo (as already stated).

Coming just three weeks after AMD’s Epyc Milan launch, the Intel Ice Lake launch pits third-gen Xeon against third-gen Epyc. “Intel’s positioning vis-a-vis AMD is certainly better than before,” said Dan Olds, chief research officer with Intersect360 Research. “I think it might be a toss up for customers; it’s going to depend on their workload and it’s going to depend on price-performance as usual, but whereas before today’s launch, it was kind of a lay-in to pick AMD, it’s not anymore. Looking at Intel’s benchmarking [gen over gen], WRF is almost 60 percent higher, Monte Carlo is 70 percent higher, Linpack is up 38 percent and HPCG is 41 percent higher — now that’s significant, HPCG is the torture test.”

“We’ll have to see what happens in the real world head to head with AMD,” Olds said, “but this puts Intel back in the game in a solid place. A 50 percent move from generation to generation is a big deal. It’s not quite Moore’s law, but it’s pretty solid.”

While AMD has gained significant ground since it reentered the datacenter arena with Epyc in 2017, Intel holds about 90 percent server market share. “Intel x86 is easily the dominant processor type in the global HPC market,” Steve Conway, senior advisor at Hyperion Research told HPCwire. The research firm’s studies show that Intel x86 will likely remain dominant through 2024, the end of their forecast period.

“Based on announced benchmarks, Ice Lake looks like an impressive technical advance,” said Conway. “We’ll know more as results on challenging real-world applications become available. Intel also has a pricing challenge against AMD, so it will be interesting to learn how prices for comparable SKUs compare. The most important Ice Lake benefit is that it’s designed to serve both established and emerging HPC markets effectively, especially AI, cloud, enterprise and edge computing. That’s the key to future success.”

Vik Malyala, senior vice president leading field application engineering at Supermicro, told HPCwire their customers were eager for the PCIe Gen 4 and the higher-core density provided in Ice Lake. “For our customers, many workloads have been optimized for Intel architecture for the longest time. That is the reason many of our customers were willing to wait as opposed to jumping to alternate offerings,” he said.

“AMD does have a process advantage, so we should not underestimate that,” Malyala said. “But at the same time, what I’m excited about is both of them are offering good performance. And customers can actually choose a platform not because something is not available, but both are available, so they can actually try it out and see which one that fits best within their budget and fits their application requirements.”

Built-in acceleration and security

For the artificial intelligence space, Intel says Ice lake delivers up to 56 percent more AI inference performance for image classification than the previous generation, and offers up to a 66 percent boost for image recognition. For language processing, Ice Lake delivers up 74 percent higher performance on batch inference gen-over-gen. And on ResNet50-v1.5, the new CPU delivers 4.3 times better performance using int8 via Intel’s DL Boost feature compared with using FP32.

“The convergence of AI and HPC is becoming a reality, and customers are thrilled that the 3rd Gen Intel Xeon Scalable processor enables a dynamic reconfigurable datacenter that supports diverse applications,” shared Intel’s Nash Palaniswamy, in an email exchange with HPCwire. “Our latest 3rd Gen Xeon Scalable processor is a powerhouse for AI workloads and delivers up to 25x performance on image recognition using our 40 core CPU compared to our competitor’s 64 core part,” said Palaniswamy, vice president and general manager of AI, HPC, datacenter accelerators solutions and sales at Intel.

The third-generation Xeon Scalable processors also add new security features, including Intel Software Guard Extensions (SGX) and Intel Total Memory Encryption (TME) for built-in security, and Intel Crypto Acceleration for streamlined processing of cryptographic algorithms. Over 200 ISVs and partners have deployed Intel SGX, according to Intel.

The SKU stack

The Ice Lake family includes 56 SKUs, grouped across 10 segments (SKU chart graphic): 13 are optimized for highest per-core scalable performance (8 to 40 cores, 140-270 watts), 10 for scalable performance (8 to 32 cores, 105-205 watts), 15 target four- and eight- socket (18 to 28 cores, 150-250 watts), and there are three single-socket optimized parts (24 to 36 cores, 185-225 watts). There are also SKUs optimized for cloud, networking, media and other workloads. All but four SKUs support Intel Optane Pmem 200 series technology.

Reserved for liquid cooling environments, the 38-core 8368Q Platinum Xeon dials up the frequency of the standard 38-core 8368, increasing the base clock from 2.4 GHz to 2.6 GHz, all-core turbo from 3.2 GHz to to 3.3 GHz, and single-core turbo from 3.4 GHz to 3.7 GHz.

At the top of the SKU mountain is the 8380 with 40 cores, a frequency of 2.3 GHz (base), 3.0 GHz (turbo) and 3.4 GHz (single-core turbo), offering 60 MB cache in a 270 watt TDP. Compared to the Cascade Lake 8280, the 8380 provides 12 additional cores and runs 65 watts hotter. The suggested customer price for the new 8380 is $8099, which is actually about 19 percent less than the list price on the 8280 ($10009).

Intel has not said publicly if it plans to release a multi-chip module (MCM) version of Ice Lake, as a follow-on to the 56-core Cascade Lake-AP part. Intel could conceivably deliver an 80-core ICL-AP but given the 270 watt power envelope of the 8380, that may not be feasible from a thermal standpoint.

Supermicro’s Malyala approves of Intel’s approach to segment-specific SKUs and the near-complete support for Optane PMem across the stack. “It was a pretty big headache for a lot of people with Cascade lake and Cascade Lake Refresh in terms of them trying to figure out how to bring all these features and which ones to enable. It’s a lot cleaner now with Ice Lake,” he said. “There’s a virtualization SKU, a networking SKU, single-socket, long lifecycle. Presenting it this way helps customers to pick and choose because now the product portfolio has exploded, right? So how do people know which one to pick? That is addressed to some extent with the segment-specific SKUs, which also helps us, Supermicro, to validate these in our products.”

In a pre-briefing held last week, Intel said the Ice Lake ramp, which commenced in the final quarter of last year, is going well. The company has shipped more than 200,000 units in the first quarter of 2021 and reports broad industry adoption across all market segments with more than 250 design wins within 50 unique OEM and ODM partners, noting over 20 publicly announced HPC adopters.

Prominent HPC customers who have received shipments so far include LRZ and Max Planck (in Germany), Cineca (in Italy), the Korea Meteorological Administration (KMA), as well as the National Institute of Advanced Industrial Science and Technology (AIST), the University of Tokyo and Osaka University (in Japan).

The third-generation Xeon products are available now through a number of OEMs, ODMs, cloud providers and channel partners. Launch partners Cisco, Dell, Gigabyte, HPE, Lenovo, Supermicro and Tyan (among others) are introducing new or refreshed servers based on the new Intel CPUs, and Oracle has announced compute instances backed by the new Xeons in limited preview with general availability on April 28, 2021. More announcements will be made in the days and weeks to come.

* Benchmarking details at https://edc.intel.com/content/www/us/en/products/performance/benchmarks/intel-xeon-scalable-processors/