Currently, there are many qubit technologies vying for sway in quantum computing. So far, superconducting (IBM, Google) and trapped ion (IonQ, Quantinuum) have dominated the conversation. Microsoft’s proposed topological qubit, which relies on the existence of a still-unproven particle (Majorana), may be the most intriguing. Recently, neutral atom approaches have quickened pulses in the quantum community. Advocates argue the technology is inherently more scalable, offers longer coherence times (key for error correction), and they point to proof-of-concept 100-qubit systems that have already been built.

Atom Computing, founded in 2018, is one of the neutral atom quantum computing pioneers. Last week, it announced a successful Series B funding round ($60M). It currently has a 100-qubit system (Phoenix) and says it will use the latest cash infusion to build its launch system (Valkyrie) which will be much larger (qubit count) and likely be formally announced in 2022 and brought to market in 2023. It’s also touting 40-second coherence (nuclear spin qubit), which the company says is a world record.

“Our first product will launch as a cloud service initially, probably with a partner. And we know which one or two partners want to go with, and just haven’t signed any contracts yet. We’re still kind of negotiating the T’s and C’s,” said Rob Hays, Atom Computing’s relatively new (July ’21) CEO and president. Most recently, Hays was the chief strategy officer at Lenovo. Before that, he spent 20-plus years in Intel’s datacenter group, working on Xeon, GPUs and OmniPath products.

Company founder Ben Bloom shifted to CTO with Hays’ arrival. Bloom’s Ph.D. work was cold atom quantum research, done with Jun Ye at the University Colorado. Notably, Ye recently won the 2022 Breakthrough Prize in Fundamental Physics[i] for “outstanding contributions to the invention and development of the optical lattice clock, which enables precision tests of the fundamental laws of nature.” Ye is on Atom’s science advisory. Company headcount is now roughly 40, and the next leg in its journey is to bring a commercial neutral atom to market.

If this formula sounds familiar, that’s because it is. There’s been a proliferation of quantum computing startups founded by prominent quantum researchers who, after producing POC systems, bring on veteran electronics industry executives to grow the company. (Link to a growing list of quantum computing/communication companies).

All of the newer emerging qubit technologies are drawing attention. Quantum market watcher Bob Sorensen of Hyperion Research told HPCwire, “I am somewhat of a fan of neutral atom qubit technology. It’s room temp, it has impressive coherence times, but to me, most importantly, it shows good promise for scaling to a large single qubit processor. Atom, along with and France’s Pasqal, are committed to the technology and are they getting the funding and additional support to keep on their development and deployment track. So we do need to keep an eye on their progress.”

So what is neutral atom-based quantum computing? Bloom and Hays recently briefed HPCwire on the company’s technology and plans.

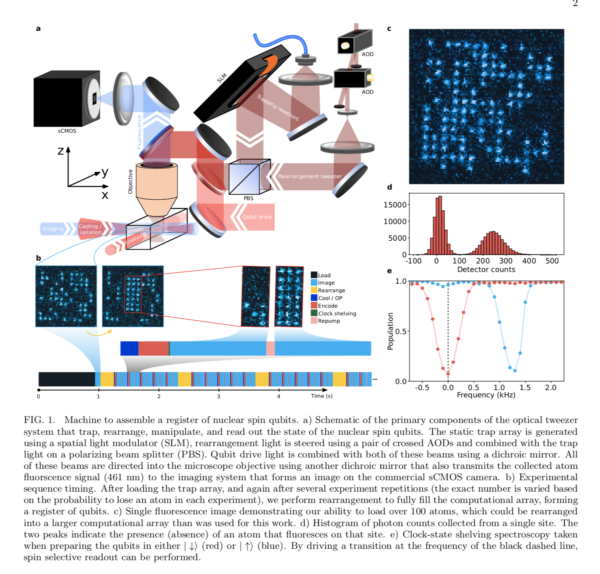

Broadly, neutral atom qubit technology shares much with trapped ion technology — except, obviously, the atoms aren’t charged. Instead of confining ions with electromagnetic forces, neutral atom approaches use light to trap atoms and hold them in position. The qubits are the atoms whose nuclear magnetic spin states (levels) are manipulated to set the qubit state. Atom has written a recent paper (Assembly and coherent control of a register of nuclear spin qubits) describing its approach.

Bloom said, “We use atoms in the second column of the periodic table (alkaline earth metals). All those atoms share properties. We use strontium, but it doesn’t actually have to have been strontium, it could have been anyone in that column. Similar to trapped ion technology, we capture single atoms, and we optically trapped them. We create this optical trapping landscape with lasers. The nice thing about this is every atom you trap and you put in those light traps is exactly the same. The coherence times you can make are really, really long. It was kind of only theorized you could create them that long, but now we’ve shown that you can create them that long.”

Hays describes the apparatus. “We put some strontium crystals and a little oven next to the vacuum chamber. There’s a little tube that [takes in] gaseous form of strontium as they get heated up and off-gased. The atoms are sucked into the vacuum chamber. Then we shine lasers through the little windows in the vacuum chamber to [form] a grid of light and the little individual atoms that are floating around in there get stuck like a magnet to those spots of light. Once we get them stuck in space, we can actually move them if we want and we can write quantum information with them using a separate set of lasers at a different wavelength. We’ve got a camera that sits under the microscope objective in the top of the system that reads out of the results.

“All that gets fed back into a standard rack of servers that’s running our software stack, you know, the classic compute system off to the side. That’s running our operating system, our scheduler, all the API’s for the access, programming, data storage. That rack also has our proprietary radio frequency control system, which is how we control the lasers. And we’re basically just controlling how many spots light there are, and what the frequency phase and amplitude is of those spots of light. People interact with it remotely.”

It’s pretty cool. Think of a cloud of atoms trapped in the vacuum tube. Lasers are shined through the cloud along an X/Y axes (2D). Wherever the beams intersect, a sticky spot is created, and nearby atoms get stuck in those spots. You don’t get 100 percent filled sticky spots on the first pass, but Atom has demonstrated the ability to move individual atoms to fill in open spots. The result is an 10×10 array of stuck neutral atoms which serve as qubits at addressable locations. The trapped atoms are spaced four microns apart, which is far enough to prevent nuclear spin (qubit state) interaction.

Entanglement between qubits is accomplished by pumping the atoms up into a Rydberg state. This basically puffs up the atoms’ outer shell, enlarging the spatial footprint, and permits becoming entangled with neighbors. This is how Atom Computing gets two-qubit gates.

“To scale the system, we just simply create more spots a light, so instead of like a 10 by 10 array, if we went to a 100 by 100 array of lasers, then we get to 10,000 qubits and if we went to 1000 by 1000 we get to a million the qubits,” said Hays. “So, at four microns [apart] to get to a million qubits we’re still less than a millimeter on a side in a cube. And it’s all, again, wireless control. We don’t have to worry about cabling up the different chips together and then putting them in a dilution refrigerator and all that kind of stuff; we just put more spots of light in the same vacuum chamber and read them with the control systems in the cameras.”

Hays noted that achieving 3D arrays is possible, but much trickier. Currently, Atom computing is focused on 2D arrays. Achieving the current 100-qubit system was done with lots of hand-tuning and intended for experimental flexibility. Moving forward, said Hays, CAD tools with an emphasis on manufacturability, use efficiency, will guide development of the Valkyrie system. Bloom and Hays declined to say how many qubits it would have.

It will be interesting to watch the ongoing jostling among qubit technologies.

Sorensen said, “I still think it is too early to start picking winners and losers in the qubit modality race… and isn’t that part of the fun right now? In reality, there are lots of variables to consider besides qubit count and other qubit specific technical parameters. To me, increasingly, the goal to focus on is not on how to build a qubit, but how to build a processor. That is why when I look at a modality, I consider its overall architectural potential: does it scale, can you do reasonable I/O to the classical side, does it have ready solutions for networking, and does it require esoteric equipment to manufacture and/or operate in a traditional compute environment?”

The issue, says Sorensen, is that there are many factors to consider here, so specific modality may not be the only valid indicator of the winner: “IBM, Quantinuum, Rigetti, and IonQ are quite visible in the sector, representing a range of modalities, but they recognize that they need to bring more to the table in terms of vision, experience, market philosophy, and end use relevance. The smart players know that it is entirely possible, as we have seen in the past, that the best pure technology does not always win in the final market analysis.”

Hays emphasizes Atom Computing is a hardware company and will work with the growing ecosystem for other tools, “We’re focusing on the hardware and the necessary software levels – operating system, scheduler, API’s, etc. – that allow people to interact with the system. [For other needs] we’re working with the ecosystem. We’re going to support Qiskit, we support it internally and we’ll support it for whichever cloud service provider we choose to go to market with we’ll support their tool suite as well. Then there’s companies like QC Ware, Zapata, Classiq and others that are building their own platforms. We’re going to be very partner friendly.”

Atom Computing says it has early collaborators but it’s hard to judge progress without fuller public access to the system. It will be interesting to see just how big (qubit count) the forthcoming system ends up being, and also what benchmarks Atom Computing supplies to the community along the way.

Figure describing Atom Computing approach from its paper.

[i] The Breakthrough Prize in Fundamental Physics[1] is awarded by the Fundamental Physics Prize Foundation, a not-for-profit organization dedicated to awarding physicists involved in fundamental research. The foundation was founded in July 2012 by Russian physicist and internet entrepreneur Yuri Milner.[2]

As of September 2018, this prize is the most lucrative academic prize in the world[3] and is more than twice the amount given to the Nobel Prize awardees.[4][5] This prize is also dubbed by the media as the “XXI Century Nobel”.[6]