Intel held its 2022 investor meeting yesterday, covering everything from the imminent Sapphire Rapids CPUs to the hotly anticipated (and delayed) Ponte Vecchio GPUs. But somewhat buried in its summary of the meeting was a new namedrop: “Falcon Shores,” described as “a new architecture that will bring x86 and Xe GPU together into a single socket.”

The reveal was brief, delivered by Raja Koduri (senior vice president and general manager of the Accelerated Computing Systems and Graphics [AXG] Group at Intel) over the course of just a couple minutes toward the end of a virtual breakout session.

Falcon Shores

“We are working on a brand new architecture codenamed Falcon Shores,” Koduri said. “Falcon Shores will bring x86 and Xe GPU acceleration together into a Xeon socket, taking advantage of next-generation packaging, memory, and I/O technologies, giving huge performance and efficiency improvements for systems computing large datasets and training gigantic AI models.”

“We expect Falcon Shores to deliver more than 5× performance per watt, more than [a] 5× compute density increase and more than 5× memory capacity and bandwidth improvement [relative to current platforms], all in a single socket with a vastly simplified GPU programming model,” he continued. “Falcon Shores is built on top of an impressive array of technologies … including the Angstrom-era process technology, next-generation packaging, new extreme bandwidth shared memory being developed by Intel, and industry-leading I/O. We are super excited about this architecture as it brings acceleration to a much broader range of workloads than the current discrete solutions.”

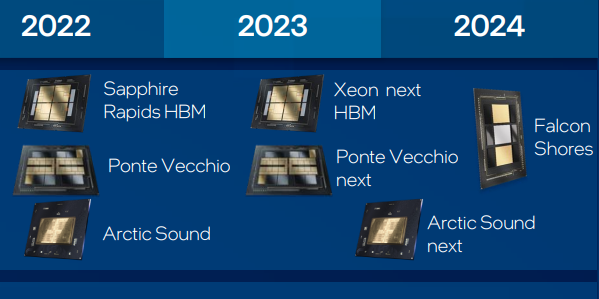

According to the roadmap, Falcon Shores is slated for 2024.

Coup de Grace?

One might compare the concept of Intel’s Falcon Shores to Nvidia’s forthcoming Grace CPU—its first Arm-based processor, and one designed (like Falcon Shores) to tightly couple with its in-house GPUs. In Nvidia’s case, Grace will use next-generation Nvidia NVLink and future-generation Arm Neoverse cores to couple with GPUs at a one-to-one CPU-GPU ratio. Grace was announced almost a year ago (April 2021) and Nvidia is slating it for 2023, a year earlier than Falcon Shores.

Sapphire Rapids

These are all, however, a ways off—and Koduri also took the time to highlight items much closer on the horizon than Falcon Shores. Sapphire Rapids, for instance—Intel’s next-gen Xeon—will start shipping next month to select customers.

“Sapphire raises the bar and sets a new standard in the industry for workload-optimized performance,” said Sandra Rivera, executive vice president and general manager of the Datacenter and AI Group at Intel, during the event. “Sapphire Rapids will also lead the industry in important memory and interconnect standards. For example, PCIe [and] DDR5, as well as the new high-speed cache coherent interconnect CXL, a standard that Intel led on in the industry.”

Sapphire Rapids will also come in a high-bandwidth memory (HBM) flavor, which Intel stressed throughout the event. “Our strategy is to build on this foundation [with Xeon] and extend this to even higher compute and memory bandwidth,” Koduri said. “First, we are bringing high-bandwidth memory—or HBM—integrated into the package with the Xeon CPU, offering GPU-like memory bandwidths to CPU workloads.” Sapphire Rappids with HBM is expected to ship, Koduri said, in the second half of 2022.

Ponte Vecchio

Slightly further out than Sapphire Rapids is Ponte Vecchio, Intel’s discrete Xe GPU that is slated to serve as the heart of the United States’ Aurora exascale supercomputer. Aurora—initially scheduled for 2021—is now (ostensibly) scheduled for late 2022, in lockstep with Intel’s process node challenges and the resulting delays for Ponte Vecchio. Originally intended to use Intel’s 7nm process (now known as “Intel 4”), the main compute tile of Ponte Vecchio is now being manufactured on TSMC’s N5 process.

“We are on track to deliver this GPU for [the] Aurora supercomputer program later this year,” Koduri assured listeners. “We are making steady progress on this product, and we are excited to show some early leadership performance results on this GPU.” To that end, he presented performance comparisons against an unidentified competitor product on a financial services workload benchmark, saying pre-production Ponte Vecchio units showed a “significant performance improvement over the best solution in the market today.”

oneAPI

Gelling Intel’s near-term strategy is its oneAPI programming model.

“Combining Ponte Vecchio with Xeon HBM is great from a hardware perspective,” Koduri said, “but equally important is bringing a way to seamlessly and transparently take advantage of that hardware technology with the existing base of Xeon HPC and AI software. That is where our OneAPI open ecosystem comes into play. We architected OneAPI to leverage the Xeon software ecosystem seamlessly, allowing software developers to work across a range of CPUs and accelerators with a single codebase. By making OneAPI an open ecosystem, we move the barriers of [the] closed, proprietary programming environment that current GPU accelerators use in HPC and AI.”

Koduri added that Intel expects “tremendous momentum in developer adoption of our open approach this year as Xeon HBM and [Ponte Vecchio] get more accessible to everyone.”

… and beyond

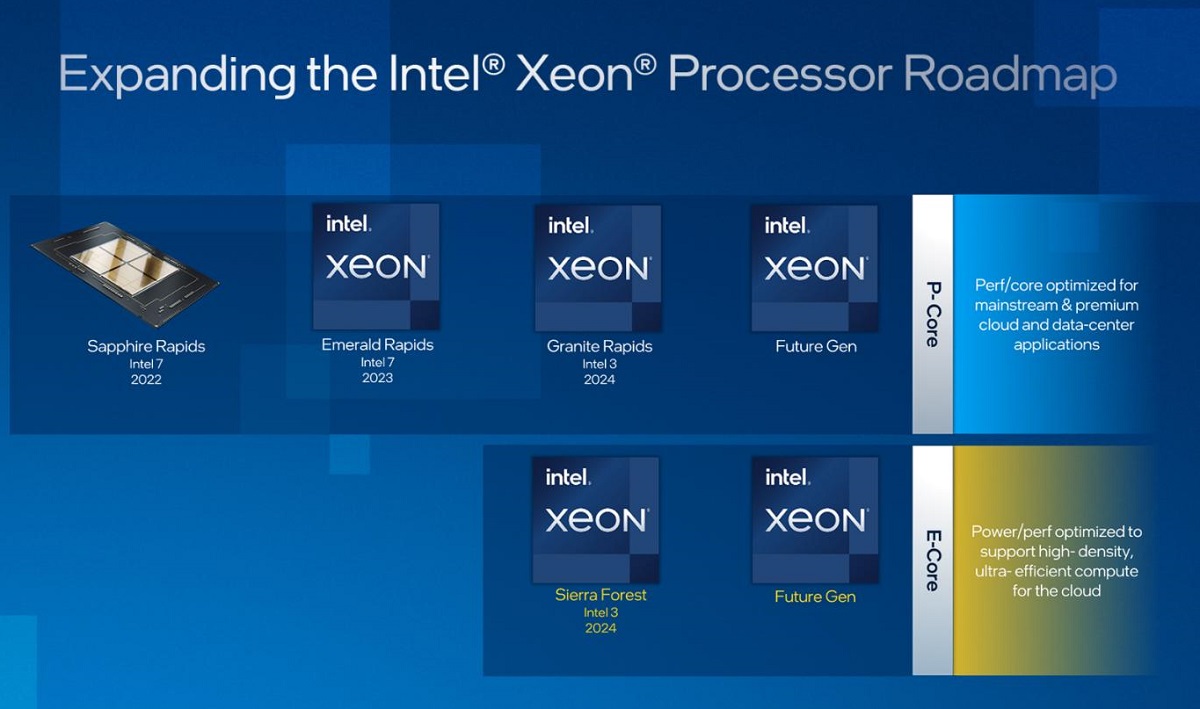

“We have follow-on products for Xeon HBM and PVC in 2023 coming as well,” he said. Among those: Emerald Rapids, Intel’s next-generation Xeon processor on the Intel 7 process node.

2024, then, brings us back to Falcon Shores. 2024 will also bring Granite Rapids, another next-generation Xeon product—a P-core processor previously slated for the Intel 4 process, but now upgraded to the Intel 3 process. Granite Rapids will launch alongside Sierra Forest, an E-core Xeon processor also on the Intel 3 process.