In this regular feature, HPCwire highlights newly published research in the high-performance computing community and related domains. From parallel programming to exascale to quantum computing, the details are here.

HipBone: A performance-portable GPU-accelerated C++ version of the NekBone benchmark

Using three HPC systems at the Oak Ridge Laboratory – Summit supercomputer and Frontier early access clusters, Spock and Crusher – the academic-industry research team (which includes two authors from AMD) demonstrated the performance of hipBone, an open source application for Nek5000 computational fluid dynamics applications. HipBone “is a fully GPU-accelerated C++ implementation of the original NekBone CPU proxy application with several novel algorithmic and implementation improvements, which optimize its performance on modern finegrain parallel GPU accelerators.” The tests demonstrate hipBone’s “portability across different clusters and very good scaling efficiency, especially on large problems.”

Authors: Noel Chalmers, Abhishek Mishra, Damon McDougall, and Tim Warburton

A Case for intra-rack resource disaggregation in HPC

A multi-institution research team utilized Cori, a high performance computing system at the National Energy Research Scientific Computing Center, to analyze “resource disaggregation to enable finer-grain allocation of hardware resources to applications.” In their paper, the authors also profile a “ variety of deep learning applications to represent an emerging workload.” Researchers demonstrated that “for a rack configuration and applications similar to Cori, a central processing unit with intra-rack disaggregation has a 99.5 percent probability to find all resources it requires inside its rack.”

Authors: George Michelogiannakis, Benjamin Klenk, Brandon Cook, Min Yee Teh, Madeleine Glick, Larry Dennison, Keren Bergman, and John Shalf

Improving Scalability with GPU-Aware Asynchronous Tasks

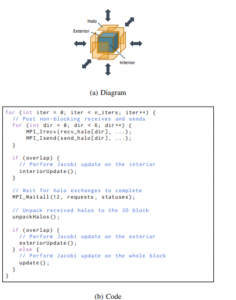

Computer scientists from the University of Illinois at Urbana-Champaign and Lawrence Livermore National Laboratory demonstrated improved scalability to hide communication behind computation with GPU-aware asynchronous tasks. According to the authors, “while the ability to hide communication behind computation can be highly effective in weak scaling scenarios, performance begins to suffer with smaller problem sizes or in strong scaling due to fine-grained overheads and reduced room for overlap.” The authors integrated “GPU-aware communication into asynchronous tasks in addition to computation-communication overlap, with the goal of reducing time spent in communication and further increasing GPU utilization.” They were able to demonstrate the performance impact of their approach by utilizing “a proxy application that performs the Jacobi iterative method on GPUs, Jacobi3D.” In their paper, the authors also dive into “techniques such as kernel fusion and CUDA Graphs to combat fine-grained overheads at scale.”

Authors: Jaemin Choi, David F. Richards, Laxmikant V. Kale

A convolutional neural network based approach for computational fluid dynamics

To overcome the cost, time, and memory disadvantages of using computational fluid dynamic (CFD) simulation, this Indian research team proposed using “a model based on convolutional neural networks, to predict non-uniform flow in 2D.” They define CFD as “the visualization of how a fluid moves and interacts with things as it passes by using applied mathematics, physics, and computational software.” The authors’ approach “aims to aid the behavior of fluid particles on a certain system and to assist in the development of the system based on the fluid particles that travel through it. At the early stages of design, this technique can give quick feedback for real-time design revisions.”

Authors: Satyadhyan Chickerur and P Ashish

Matrix-model simulations using quantum computing, deep learning, and lattice Monte Carlo

This international research team conducted “the first systematic survey for quantum computing and deep-learning approaches to matrix quantum mechanics.” While the “Euclidean lattice Monte Carlo simulations are the de facto numerical tool for understanding the spectrum of large matrix models and have been used to test the holographic duality,” the authors write, “they are not tailored to extract dynamical properties or even the quantum wave function of the ground state of matrix models.” The authors compare the deep learning approaches to lattice Monte Carlo simulations and provide baseline benchmarks. The research leveraged Riken’s HOKUSAI “BigWaterfall” supercomputer.

Authors: Enrico Rinaldi, Xizhi Han, Mohammad Hassan, Yuan Feng, Franco Nori, Michael McGuigan, and Masanori Hanada

A group of researchers from Cornell University and Lawrence Berkeley National Laboratory propose a multi-task and multi-fidelity autotuning framework, called GPTuneBand to tune high-performance computing applications. “GPTuneBand combines a multi-task Bayesian optimization algorithm with a multi-armed bandit strategy, well-suited for tuning expensive HPC applications such as numerical libraries, scientific simulation codes and machine learning models, particularly with a very limited tuning budget,” the authors write. Compared to its predecessor, GPTuneBand demonstrated “a maximum speedup of 1.2x, and wins over a single-task, multi-fidelity tuner BOHB on 72.5 percent tasks.”

Authors: Xinran Zhu, Yang Liu, Pieter Ghysels, David Bindel, Xiaoye S. Li

High performance computing architecture for sample value processing in the smart grid

In this Open Access article, a group of researchers from the University of the Basque Country, Spain, present a high level interface solution for application designers that addresses the challenges of current technologies for the Smart Grid. Making the case that FPGAs provide superior performance and reliability over CPUs, the authors present a “solution to accelerate the computation of hundreds of streams, combining a custom-designed silicon Intellectual Property and a new generation field programmable gate array-based accelerator card.” The researchers leverage Xilinx’s FPGAs and adaptive computing framework.

Authors: Le Sun, Leire Muguira, Jaime Jiménez, Armando Astarloa, Jesús Lázaro

Do you know about research that should be included in next month’s list? If so, send us an email at [email protected]. We look forward to hearing from you.