The Frontier supercomputer was installed at Department of Energy’s Oak Ridge National Laboratory in 2021, with the final cabinet rolled into place in October. While shakeout of the full 2-exaflops peak system continues – we have heard off-record about troubles with the interconnect technology – the Frontier project is running with a smaller testbed system of the same core design.

Clocking in at about 40 petaflops peak double-precision, “Crusher” is a 1.5-cabinet iteration of the Cray EX Frontier supercomputer. Crusher will serve early science users while integration and testing of the full 74-cabinet Frontier system continues. The Frontier system is on track to be the United States’ first exascale system sometime this year, and will enter full user operations on January 1, 2023, according to Oak Ridge National Laboratory.

Clocking in at about 40 petaflops peak double-precision, “Crusher” is a 1.5-cabinet iteration of the Cray EX Frontier supercomputer. Crusher will serve early science users while integration and testing of the full 74-cabinet Frontier system continues. The Frontier system is on track to be the United States’ first exascale system sometime this year, and will enter full user operations on January 1, 2023, according to Oak Ridge National Laboratory.

Crusher consists of 192 HPE Cray EX nodes – each with one AMD “Trento” 7A53 Epyc CPU and four AMD Instinct MI250X GPUs (for a total 768 GPUs). Trento uses the same Zen-3 cores as Milan, optimized for better memory efficiencies. Nodes are connected by HPE’s Slingshot-11 interconnect. Each node sports 512GiB DDR4 memory on the CPU and 512GiB HMB2e (128GiB per GPU) with coherent memory across the node.

By contrast, the full-size Frontier is slated to deliver 2 exaflops of peak double-precision performance in 74 cabinets within a 29MW power envelope. Occupying a 372 m2 footprint at the Oak Ridge Leadership Computing Facility (OLCF), Frontier spans 9,408 nodes aggregating 9.2 petabytes of memory (4.6 petabytes of DDR4 and 4.6 petabytes of HBM2e). Total GPU count: 37,632. There are 37 petabytes of node local storage, and access to 716 petabytes of center-wide storage.

The HPE Olympus racks used in the Frontier architecture are entirely liquid-cooled, including the DIMMs and NICs. Each cabinet (when dry) weighs 3,630 kilograms. The full Frontier system has a total of 81,000 cables.

Crusher, said Oak Ridge, is ready to “crush” science, although we suspect the name might also be a nod to the chief medical officer from the television series Star Trek: The Next Generation. By extension, the full configuration would be the “Final Frontier.”

Four projects have already had their codes successfully optimized for Crusher and thus Frontier as well. They are the CANcer Distributed Learning Environment, or CANDLE, project; the Computational hydrodynamics on ∥ (parallel) architectures, or Cholla, project; the Locally Self-Consistent Multiple Scattering, or LSMS, project; and the Nuclear Coupled-Cluster Oak Ridge, or NuCCOR, project. Some of these codes date back to OLCF’s first hybrid-architecture system, the decommissioned 27-petaflop Cray XK7 Titan supercomputer that also employed CPU+GPU nodes and which was stood up in 2012.

Highlights of early results:

- The CANDLE team has successfully run one of their Transformer models (for natural language processing) on Crusher, achieving an 80 percent speedup on a Crusher node from previous systems.

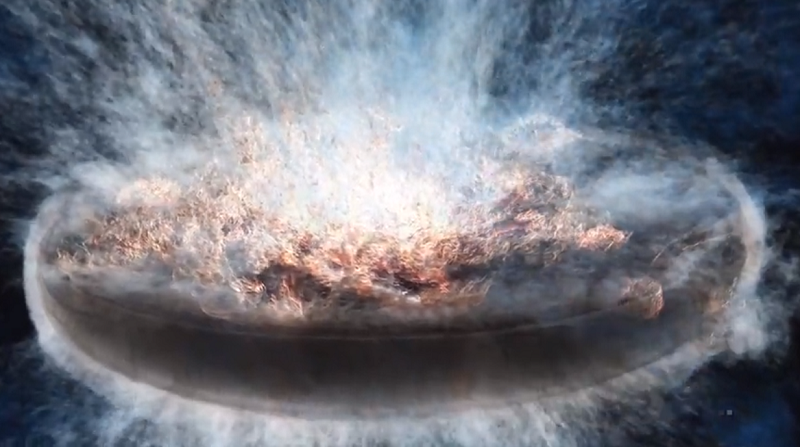

- Cholla, one of the first astrophysics codes to be rewritten for Frontier, is seeing 15-fold speedups on Crusher.

- A materials code – LSMS – that can perform large-scale calculations of up to 100,000 atoms has been successfully deployed on Crusher.

- NuCCOR, a nuclear physics code that can perform massive simulations of nuclei, is seeing 8-fold speedups on Crusher.

“Crusher is the latest in a long line of test and development systems we have deployed for early users of OLCF platforms and is easily the most powerful of these we have ever provided,” said ORNL’s Bronson Messer, OLCF director of science. “The results these code teams are realizing on the machine are very encouraging as we look toward the dawn of the exascale era with Frontier.”

“Taking up only 44 square feet of floor space, Crusher is 1/100th the size of the previous Titan supercomputer but faster than the entire 4,352-square-foot system was, packing a massive computing punch for its small size,” further reported the Oak Ridge announcement.

Frontier was originally scheduled to be deployed in the back half of 2021 and accepted in 2022. Delays of some kind or another are typical with supercomputing systems of this scope and scale, and Frontier is the first implementation of the AMD A+A architecture in addition to being one of the world’s first exascale machines. It remains to be seen whether Frontier will be ready in time for the late-May (not June this year) Top500 list as had been widely anticipated (given that the system was fully installed prior to the release of the November 2021 list). Oak Ridge did not offer a precise timeline for Frontier’s deployment and acceptance other than stating it will happen in 2022, followed by full operations commencing on January 1, 2023.

One challenge that Oak Ridge and their vendor partners have already overcome pertains to Covid-spurred supply chain shortages. Speaking at SCA22 earlier this month, ORNL Corporate Research Fellow Al Geist said that of Frontier’s 59 million parts, there were about 2 million parts that the regular manufacturers could not supply. “There was a heroic effort by the HPE and AMD teams calling up electronics warehouses and […] other manufacturers and [sourcing the missing parts.]”

A leadership-class facility (it’s in the name), OLCF is the home of Summit, another heterogeneous CPU-GPU system that debuted in 2018. Delivering 149 Linpack petaflops, the IBM-built machine is currently the number two system on the twice-yearly Top500 list of fastest computers. The title of world’s fastest supercomputer is officially held by the Riken Arm-based Fujitsu system (442 petaflops peak), but China is thought to have two exascale systems that were withheld from the list for political reasons.

Two other exascale systems are on deck in the United States: Aurora at Argonne National Laboratory and El Capitan at Livermore National Laboratory. Aurora, having had several resets and setbacks, is slated to be stood up at Argonne National Lab later this year. The Intel-HPE collaboration is now targeting more than 2-exaflops peak performance. On the face of it, Frontier’s slowed rollout could conceivably put those timelines in contention; however, Frontier is already already on the floor and Aurora isn’t. The Ponte Vecchio GPU for the Aurora supercomputer won’t be delivered until later this year, Intel recently reported. Meanwhile, preparation for El Capitan is well underway at Livermore; the system – to be built by HPE using a similar architecture as Frontier – is slated for delivery in 2023, promising greater than 2-exaflops peak performance.

Read the OLCF press release for more details on the science codes that are running on Crusher.