In this regular feature, HPCwire highlights newly published research in the high-performance computing community and related domains. From parallel programming to exascale to quantum computing, the details are here.

The E3SM diagnostics package: a python-based diagnostics package for earth system models evaluation

E3SM Diags is an open-source Python software package that was released in 2017 and developed to support the Department of Energy Energy Exascale Earth System 5 Model project. A multi-institutional team of researchers modeled E3SM Diags after the atmospheric model working group diagnostics package from the National Center for Atmospheric Research. In this pre-print open access paper, researchers detail version 2.6’s new features including “more process-oriented and phenomenon-based evaluation 10 diagnostics have been implemented, such as analysis of the Quasi-biennial Oscillation, El Niño – Southern Oscillation, streamflow, diurnal cycle of precipitation, tropical cyclones and ozone.” Researchers designed the tool to be flexible and added “new observational datasets and new diagnostic 15 algorithms.” In this latest version, the software package was “extended significantly beyond the initial goal to be a Python equivalent of the NCL AMWG package.”

Authors: Chengzhu Zhang, Jean-Christophe Golaz, Ryan Forsyth, Tom Vo, Shaocheng Xie, Zeshawn Shaheen, Gerald L. Potter, Xylar S. Asay-Davis, Charles S. Zender, Wuyin Lin, Chih-Chieh Chen, Chris R. Terai, Salil Mahajan, Tian Zhou, Karthik Balaguru, Qi Tang, Cheng Tao, Yuying Zhang, Todd Emmenegger, and Paul Ullrich

21296exponentially complex quantum many-body simulation via scalable deep learning method

Chinese researchers pose their justification for their bid to win the Gordon Bell Prize in this paper from a multi-institutional team of researchers. The researchers report that “a deep learning-based simulation protocol can [solve the quantum many-body problem] with state-of-the-art precision in the Hilbert space as large as 21296 for spin system and 3144 for fermion system, using a HPC-AI hybrid framework on the new Sunway supercomputer.” Using up to 40 million heterogeneous SW26010pro cores, the applications achieved 94 percent weak scaling efficiency and 72 percent strong scaling efficiency,” according to the research team.

Authors: Xiao Liang, Mingfan Li, Qian Xiao, Hong An, Lixin He, Xuncheng Zhao, Junshi Chen, Chao Yang, Fei Wang, Hong Qian, Li Shen, Dongning Jia, Yongjian Gu, Xin Liu, and Zhiqiang Wei

AxoNN: an asynchronous, message-driven parallel framework for extreme-scale deep learning

Researchers from the department of computer science at the University of Maryland in College Park, Maryland, introduce a parallel deep learning framework named AxoNN, which “exploits asynchrony and message-driven execution to schedule neural network operations on each GPU, thereby reducing GPU idle time and maximizing hardware efficiency.” With the implementation of AxoNN, memory consumption is reduced by four times“by using the CPU memory as a scratch space for offloading data periodically during training.” The reduction in memory consumption enabled the researchers to “increase the number of parameters per GPU by four times, thus reducing the amount of communication and increasing performance by over 13%.” Researchers demonstrated that “when tested against large transformer models with 12–100 billion parameters on 48–384 NVIDIA Tesla V100 GPUs, AxoNN achieves a per-GPU throughput of 49.4– 54.78% of theoretical peak and reduces the training time by 22- 37 days (15–25% speedup) as compared to the state-of-the-art.”

Authors: Siddharth Singh and Abhinav Bhatele

In this paper, an international team of researchers from Texas A&M University in Texas, California Institute of Technology and Sandia National Laboratories in California, and the Indian Institute of Science in India present “an effective methodology of implementing temporal discretization using a multi-stage Runge-Kutta method with asynchrony-tolerant (AT) schemes.” The researchers argue that the combination of using the multi-stage Runge-Kutta method with AT schemes provides minimal overheads to scale next-generation exascale machines with extreme parallelism. “Together these schemes are used to perform asynchronous simulations of canonical reacting flow problems, demonstrated in one-dimension including auto-ignition of a premixture, premixed flame propagation and non-premixed autoignition.” In addition, the paper also dives into the loss of accuracy of weighted essentially non-oscillatory schemes when used in conjunction with relaxed synchronization. “To overcome this loss of accuracy, high-order AT-WENO schemes are derived and tested on linear and non-linear equations.” Lastly “AT-WENO schemes are demonstrated in the propagation of a detonation wave with delays at PE boundaries.”

Authors: Komal Kumari, Emmet Cleary, Swapnil Desai, Diego A. Donzis, Jacqueline H. Chen, and Konduri Aditya

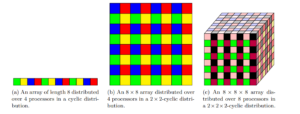

Minimizing communication in the multidimensional FFT

Mathematicians from the Mathematical Institute at the Utrecht University in the Netherlands “present a parallel algorithm for the fast Fourier transform (FFT) in higher dimensions.” According to Thomas Koopman and Rob H. Bisseling, “this algorithm generalizes the cyclic-to-cyclic one-dimensional parallel algorithm to a cyclic-to-cyclic multidimensional parallel algorithm while retaining the property of needing only a single all-to-all communication step.” Using the Dutch National Supercomputer Snellius, the researchers “show that FFTU is competitive with the state-of-the-art and that it allows to use of a larger number of processors, while keeping communication limited to a single all-to-all operation. For arrays of size 10243 and 645, FFTU achieves a speedup of a factor 149 and 176, respectively, on 4,096 processors.”

Authors: Thomas Koopman and Rob H. Bisseling

Deep neural networks for solving extremely large linear systems

Hong Kong mathematicians from the University of Hong Kong in Pokfulam, Hong Kong study “deep neural networks for solving extremely large linear systems arising from physically relevant problems.” According to the authors, the biggest advantage of using the method is the amount of storage saved. The paper includes”‘examples arising from partial differential equations, queueing problems and probabilistic Boolean networks…to demonstrate that solutions of linear systems with sizes ranging from septillion (1024) to nonillion (1030) can be learned quite accurately.”

Authors: Yiqi Gu and Michale K. Ng

mpiQulacs: a distributed quantum computer simulator for A64FX-based cluster systems

Researchers from the ICT Systems Laboratory at Fujitsu LTD designed mpiQulacs, which is a distributed state vector simulator that is “optimized for large-scale simulation on A64FX based cluster systems.” In this paper, the researchers “evaluate weak and strong scaling of mpiQulacs with up to 36 qubits on a new 64-node A64FX-based cluster system named Todoroki.” Researchers compare mpiQulacs with other distributed state vector simulators demonstrating that mpiQulacs was able to perform really well with “large-scale simulation on tens of nodes while sustaining a nearly ideal scalability.” In addition, they define quantum B/F ratio, which “indicates the execution efficiency of state vector simulators running on cluster systems.” Using quantum B/F ratio, the researchers also demonstrated “that mpiQulacs running on Todoroki fits the requirements of distributed state vector simulation rather than the existing simulators running on general purpose CPU-based or GPU-based cluster systems.”

Authors: Satoshi Imamura, Masafumi Yamazaki, Takumi Honda, Akihiko Kasagi, Akihiro Tabuchi, Hiroshi Nakao, Naoto Fukumoto, and Kohta Nakashima

Do you know about research that should be included in next month’s list? If so, send us an email at [email protected]. We look forward to hearing from you.