Almost exactly a year ago, Google launched its Tensor Processing Unit (TPU) v4 chips at Google I/O 2021, promising twice the performance compared to the TPU v3. At the time, Google CEO Sundar Pichai said that Google’s datacenters would “soon have dozens of TPU v4 Pods, many of which will be operating at or near 90 percent carbon-free energy.” Now, at Google I/O 2022, Pichai revealed the blue-ribbon fruit of those labors: a TPU v4-powered datacenter in Mayes County, Oklahoma, that Google says is the world’s largest publicly available machine learning hub.

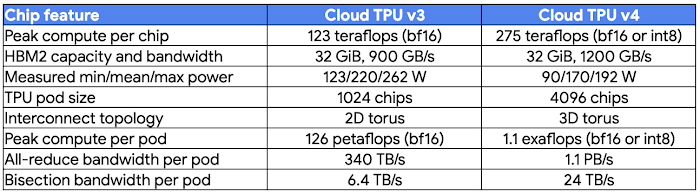

“This machine learning hub has eight Cloud TPU v4 Pods, custom-built on the same networking infrastructure that powers Google’s largest neural models,” Pichai said. Google’s TPU v4 Pods consist of 4,096 TPU v4 chips, each of which delivers 275 teraflops of ML-targeted bfloat16 (“brain floating point”) performance. In aggregate, this means that each TPU pod packs around 1.13 bfloat16 exaflops of AI power – and that the pods in the Mayes County datacenter total some 9 exaflops. Google says that this makes it the largest such hub in the world “in terms of cumulative computing power,” at least among those accessible to the generic public.

“Based on our recent survey of 2,000 IT decision makers, we found that inadequate infrastructure capabilities are often the underlying cause of AI projects failing,” commented Matt Eastwood, senior vice president for research at IDC. “To address the growing importance for purpose-built AI infrastructure for enterprises, Google launched its new machine learning cluster in Oklahoma with nine exaflops of aggregated compute. We believe that this is the largest publicly available ML hub[.]”

Moreover, Google says that this hub is operating at 90 percent carbon-free energy on an hourly basis – a feat that can be challenging, given the intermittency of renewable energy sources. To learn more about Google’s methodology for carbon-free energy, click here – but not all of the efficiency gains are attributable to Google’s renewable energy efforts. The TPU v4 chip delivers around three times the flops per watt compared to the TPU v3, and the entire datacenter operates at a power usage efficiency (PUE) of 1.10, which Google says makes it one of the energy-efficient datacenters in the world. “This is helping us make progress on our goal to become the first major company to operate all our datacenters and campuses globally on 24/7 carbon-free energy by 2030,” Pichai said at Google I/O.

“We hope this will fuel innovation across many fields, from medicine to logistics, sustainability and more,” he said of the datacenter. To that end, Google has been operating its TPU Research Cloud (TRC) program for several years, providing TPU access to “ML enthusiasts around the world.”

“They have published hundreds of papers and open-source github libraries on topics ranging from ‘Writing Persian poetry with AI’ to ‘Discriminating between sleep and exercise-induced fatigue using computer vision and behavioral genetics,’” said Jeff Dean, senior vice president of Google Research and AI. “The Cloud TPU v4 launch is a major milestone for both Google Research and our TRC program, and we are very excited about our long-term collaboration with ML developers around the world to use AI for good.”

At I/O, Pichai suggested that this was just one piece of a deeper commitment to efficient, powerful datacenters, citing the company’s planned $9.5 billion in investments in datacenters and offices across the U.S. in 2022 alone.