HPCwire presents our interview with Jay Gambetta, IBM Fellow and VP, Quantum, and an HPCwire 2022 Person to Watch. Few companies have tackled as many parts of the complicated quantum computing landscape as IBM. From hardware, software, middleware, education-ware, and use-case exploration, IBM has made impressive gains in all of these areas. Very recently IBM unveiled an expansion of its roadmap that calls for delivering a new 1386-qubit processor – Kookaburra – and delivering a 4,158-qubit system built using three connected Kookaburra processors by 2025. Gambetta shares his thoughts on steps needed to scale up quantum computers and on how IBM is working to support the extended software developer community.

You’ve said 2023 is the year when we will reach broader quantum advantage. Can you elaborate on that idea a little and provide a few examples of applications you think will start being run on quantum computers in a production environment in 2023? Also, how much better will these early quantum applications perform than their classical counterparts?

Our goal is to achieve quantum advantage as soon as possible. I don’t like to focus so much on dates for specific items without considering instead an entire roadmap to make it possible. To achieve quantum advantage, we need to both increase the performance of the processors and develop a better understanding of how to deal with errors and program a quantum computer.

Our goal is to achieve quantum advantage as soon as possible. I don’t like to focus so much on dates for specific items without considering instead an entire roadmap to make it possible. To achieve quantum advantage, we need to both increase the performance of the processors and develop a better understanding of how to deal with errors and program a quantum computer.

Starting with the performance lens, performance is measured by three metrics: Scale, Quality, and Speed. Scale is the number of operational qubits in a processor, and we have laid out our roadmap to achieve 1121 qubits in 2023. We are currently updating the roadmap to include modularity to show a path to even greater scale. Quality is a measure of how well the quantum processor runs quantum circuits and we have shown that this can be at least doubled each year as measured by the quantum volume. Speed is about how fast quantum circuits can be run on our machines, last year we achieved a 120x improvement in speed and we are expecting another factor of 10 this year as measured by CLOPS. Only by increasing the performance do we have a chance to achieve quantum advantage.

The second lens to achieve quantum advantage is programming of these machines. There has been some artificial differentiation in quantum computers being either a fault tolerant quantum computer (FTQC) or noisy intermediate-scale quantum (NISQ). I personally try to stay away from labeling either and come back to what it means to compute. To achieve quantum advantage, we need to run quantum circuits that do not have any efficient classical method to simulate and applications that use these circuits that either give us a cheaper, faster, or more accurate solution than what can be done using “only” classical methods. Long term we will need error correction and fault tolerance, however the first examples of quantum advantage will be achieved using error mitigation and smart methods for breaking the problem into smaller pieces which we call circuit knitting. Error mitigation was a method we came up with in 2017 and since then the field has developed extensions and ideas that make it possible to run it on larger systems and recently work has started to tie error mitigation and error correction together. Circuit knitting is the idea that we can have a large problem and break it into smaller quantum problems and knit these pieces back together using classical methods to solve the larger problem, examples include entanglement forging, circuit cutting, and circuit embedding. If you ask what is in common to both error mitigation and circuit knitting, it is the idea that quantum and classical computing need to work together. To do this we need to develop a new way of programming a quantum computer. In 2016 when we first put a quantum computer on the cloud the API was a simple circuit API; since then, we have developed the Qiskit Runtime which is an API that allows a user to establish a runtime environment allowing simple quantum programs to run quantum circuits at fast rates. Next is Quantum Serverless which is a new method of programming that allows a developer or researcher to flexibly combine various kinds of computing infrastructure such as quantum processors, CPUs, GPUs, or even other specially-built classical accelerators. Through this innovation a developer or researcher does not need to worry about the particular type of computing infrastructure and can focus on their code and program applications that use either circuit knitting or error mitigation. Only through this will we have the tools to enable quantum advantage in the near future.

Lastly if I must guess where the applications will be that demonstrate quantum advantage, it will most likely be either in problems that simulate quantum mechanics (chemistry, material science or high energy physics) or problems that deal with data with structure that is hard for a classical computer to find the answer (machine learning, ranking).

Breaking the 100-qubit barrier with the Eagle QPU late in 2021 was an impressive step forward. IBM’s stated goal to bring Osprey, a 433-qubit QPU, to market this year seems like at least as large a challenge. What are big hardware advances needed for Osprey? Also, maybe you could comment on related quantum networking technology and control circuit advances that will also be important?

Scaling a quantum processor while maintaining the processor’s performance is always the biggest challenge for quantum hardware – for any hardware, not only superconducting qubits. We have developed a procedure we call agile hardware development. We have systems that are our core systems and systems that are exploratory. Core systems are ones we have qualified rigorously through repeated iterations and believe we can offer high performance and high reliability; exploratory are ones where we focus on some particular new feature to drive and improve performance. Because we want to bring the newest features to our clients as fast as we can we make both types of systems available. As of today, Falcon R5 is our core system, and we are working on making Eagle our next core system. Some of the new features we have made include a new processor for better coherence (as an example Falcon R8/Hummingbird R3 is more than double the coherence time from previous Falcons/Hummingbirds), a new architecture with faster gates (Falcon R10), faster readout for better CLOPS (Falcon R5), and lastly increasing the number of qubits to push scale (Eagle and Osprey).

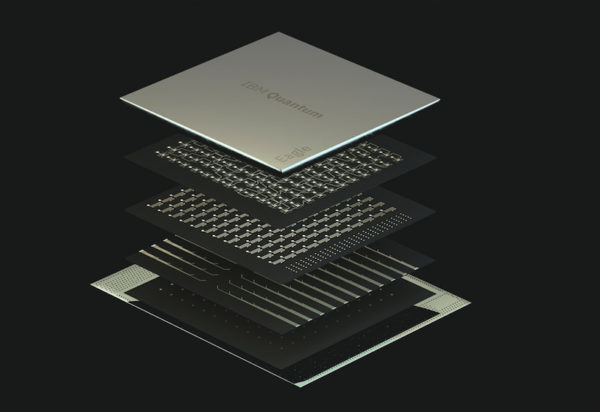

In Osprey, we are continuing with a lot of the similar device packaging advances that made Eagle possible (multi-level wiring and through substrate vias) but we had to design new types of signal delivery to increase the density of I/O that works in a cryogenic temperature to control the processor. At the same time, we have to continue to scale up the control electronics. The performance of control electronics in areas such as stability and noise can be quite an issue with scale and so we had to develop novel ideas to provide proper controlled environments to run effectively. Also, the cost of electronics is an important factor in terms of scaling if we are aiming for millions of qubits for our future systems. So, by making the electronics parts modular, simplifying the microwave circuitry, and also optimizing interconnects, we anticipate being able to produce a scalable control electronics system for a large processor like Osprey with low-noise and high stability.

Furthermore, Osprey, will be a part of our introduction of IBM Quantum System Two, offering a modular quantum hardware system architecture that simplifies scaling into the future and lays the groundwork for quantum data centers.

There’s a huge effort to develop tools able to hide quantum computing’s complexities from the potential user and developer communities. What are the key advances occurring in this area, and do you envision a time when mainstream HPC applications such as AMBER/GROMACS (molecular mod/sim) and math applications such as MATLAB/Mathematica will simply have quantum APIs embedded in them such that users can simply select a quantum option?

Our philosophy is frictionless development for quantum computing. To make quantum computing real we need to make tools for three different types of developers. Kernel, Algorithm, and Model. A kernel developer works at the quantum device level and improves the quantum circuits that are run on quantum hardware. This includes better pulse design, compilers, dynamical decoupling, error mitigation and eventually quantum error correction. We designed OpenQASM as the intermediate representation language for this developer and this developer will build primitive Qiskit Runtime programs for the developer at the next layer to use. The second is an algorithm developer that works to improve core capabilities of quantum computing that exploits both quantum and classical computing algorithms and builds software libraries. I believe quantum serverless will be the tool that simplifies the work for this developer and allows this developer to use both HPC and quantum to build API and libraries for the next layer to use. The third is a model developer who develops quantum application programs using already built-in libraries, functions and advanced HPC applications. Model developers are the domain experts who are using quantum computing as a solution to their problems. Model developers will be the majority of the quantum computing users and if done correctly they should not need to know how to program at the quantum circuit level to do their work. If quantum computing will become useful, quantum services should be provided frictionlessly. I can picture quantum APIs which are embedded in our everyday computation application modules such as MATLAB or AMBER where classical and quantum computing solutions can be programmed in a single computing environment.

Where do you see HPC headed? What trends – and in particular emerging trends – do you find most notable? Any you are concerned about?

I take the simple view that computing is essential for science and business to progress, and high-performance computing is the tool we use. From the hardware I think we have had CPU-centric supercomputers and today we are seeing AI-centric supercomputers emerge and, in the future, I predict we will have quantum-centric supercomputers. The trend to go serverless for HPC fits nicely into the direction quantum is going and if we can make quantum serverless a reality I think a supercomputer that has accelerators for all workloads is the tool we will need to push science and technology forward.

I think this can go even further as we start to understand the intersection between communication and storage for edge-based workloads. Do we need quantum version of edge and or distributed quantum-centric supercomputers? I think the answer will be yes, but this will mean we need to understand how quantum networking, computation, and storage all work together as we build larger and more powerful machines. This can only be done through a vibrant and active community of quantum researchers and developers.

Outside of the professional sphere, what can you tell us about yourself – family stories, unique hobbies, favorite places, etc.? Is there anything about you your colleagues might be surprised to learn?

I grew up in Queensland Australia doing lots of surfing and outdoor activity. From a young age I wanted to build things and was either going to be carpenter or mechanic, but I caught the science bug at university and always found myself doing the subject I did not understand. To relax I have moved away from surfing and focus on snowboarding in the winter and kayaking in the summer. For fun I like to work on restoring the house and building simple furniture. I recently purchased a lake cottage in upstate New York, so I am spending some time to set it up for a relaxing outdoor get away.

Related: HPCwire coverage of IBM’s recently disclosed expanded quantum roadmap

Gambetta is one of 12 HPCwire People to Watch for 2022. You can read the interviews with the other honorees at this link.