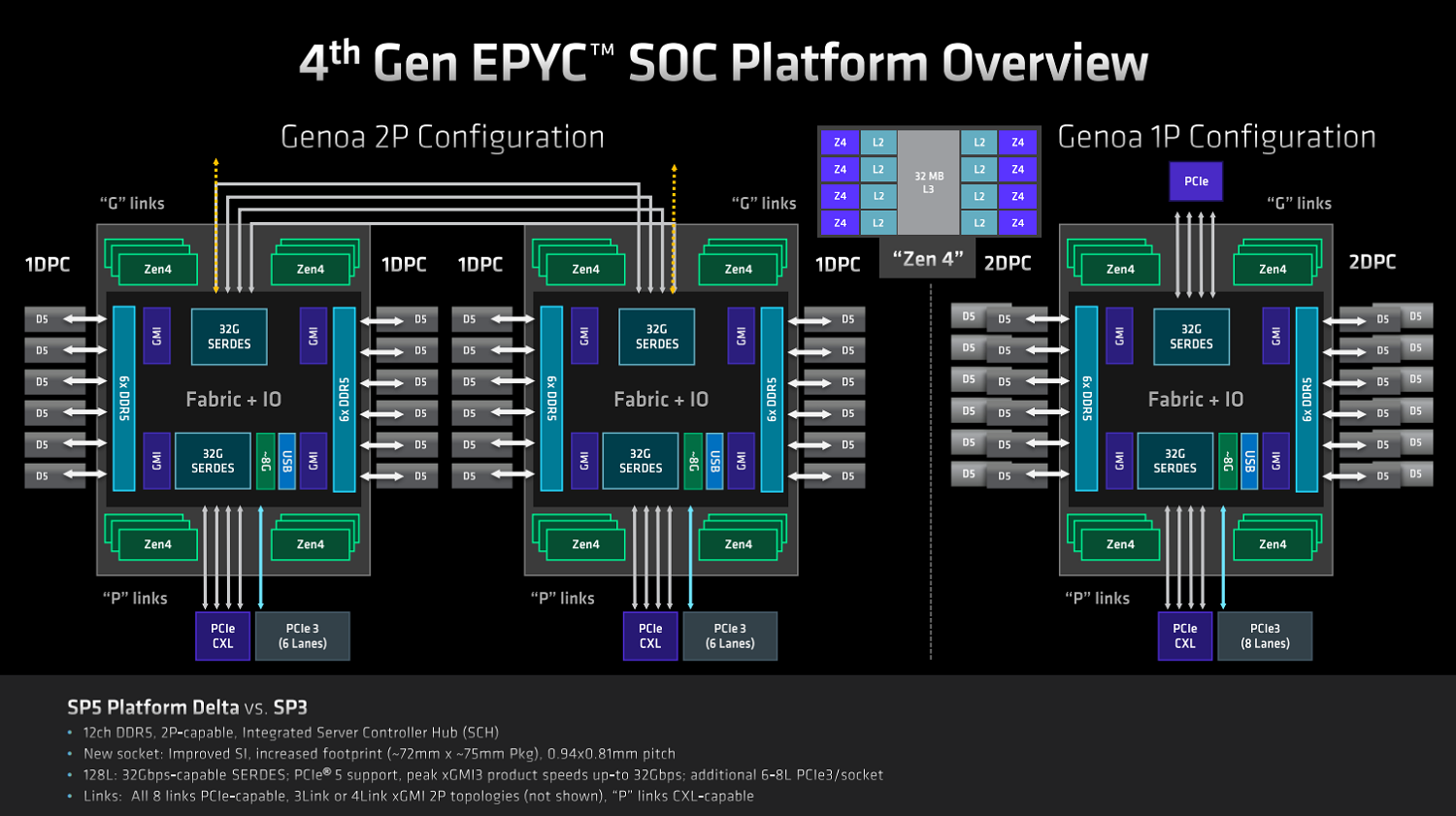

AMD’s fourth-generation Epyc processor line has arrived, starting with the “general-purpose” architecture, called “Genoa,” the successor to third-gen Epyc Milan, which debuted in March of last year. At a launch event held today in San Francisco, AMD announced the general availability of its newest Epyc CPUs with up to 96 TSMC 5nm Zen 4 cores, 12 channels of DDR5 memory and up to 160 lanes of PCIe Gen5. Genoa also introduces support for CXL 1.1 for memory expansion. Security-focused enhancements include expanded AMD Infinity Guard and a doubling of encryption keys.

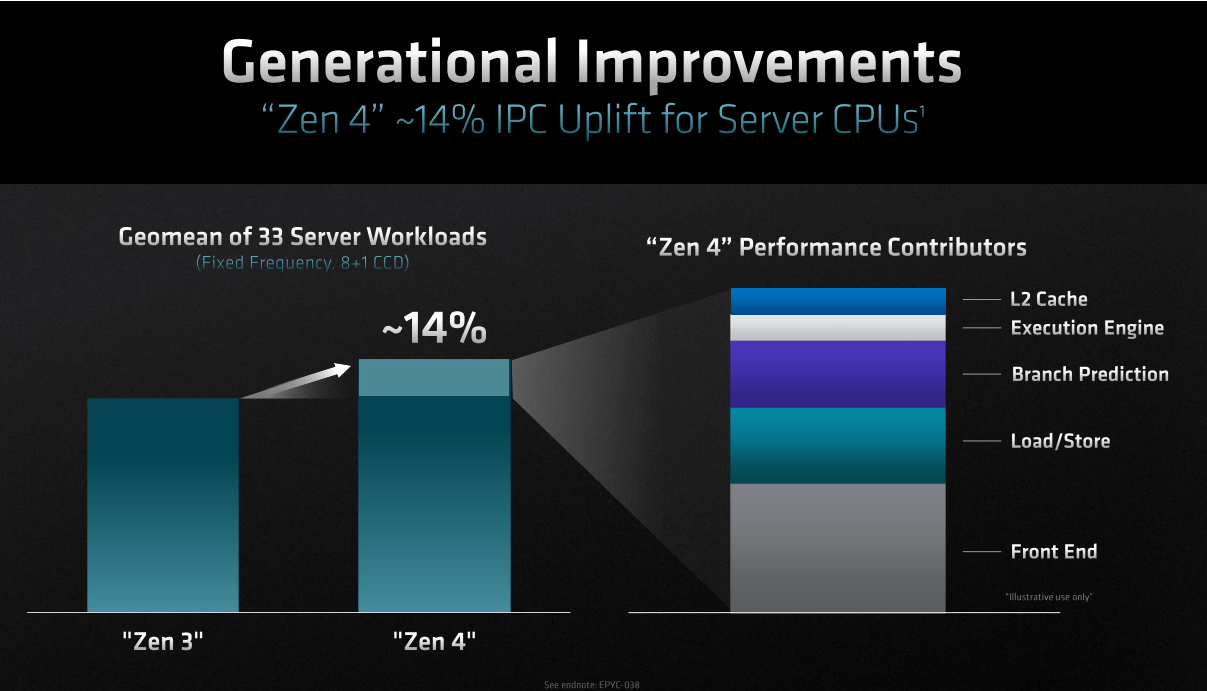

The fourth-gen Epyc chips – which tout more than 90 billion transistors – offer “a significant upgrade in core performance” according to AMD: about 14% more instructions per clock (IPC), based on a geomean of 33 server workloads. For HPC, this translates into faster time to solutions. On the SpecRate 2017-fb-base benchmark, the 96-core Genoa yielded roughly 2x better performance over the previous generation 64-core Epyc and about 2.5x better performance versus a 40-core Intel Ice Lake CPU-based server.

The fourth-gen Epyc chips – which tout more than 90 billion transistors – offer “a significant upgrade in core performance” according to AMD: about 14% more instructions per clock (IPC), based on a geomean of 33 server workloads. For HPC, this translates into faster time to solutions. On the SpecRate 2017-fb-base benchmark, the 96-core Genoa yielded roughly 2x better performance over the previous generation 64-core Epyc and about 2.5x better performance versus a 40-core Intel Ice Lake CPU-based server.

“With the third-gen Epyc, we’re already the world’s fastest supercomputing chip, and going to the fourth-gen Epyc, our leadership has delivered two-and-a-half times more performance than the competition,” said AMD CEO Lisa Su.

Su reiterated that five of the top 10 most powerful supercomputers and eight of the top 10 most efficient supercomputers are using Epyc, including Frontier, the world’s first exascale supercomputer and its most energy-efficient.

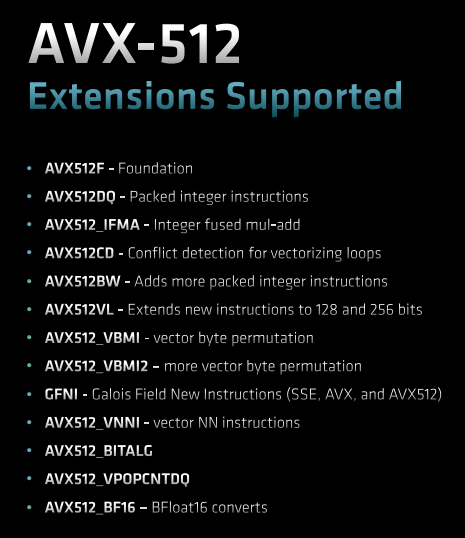

There are new AVX-512 instructions for Zen 4 that include per-lane masking capabilities, new Scatter/Gather instructions, BFloat16 instruction support and VNNI instruction support.

There are new AVX-512 instructions for Zen 4 that include per-lane masking capabilities, new Scatter/Gather instructions, BFloat16 instruction support and VNNI instruction support.

“Our implementation uses a double pumped approach on a 256-bit data path. This is designed to prevent frequency fluctuations while you’re running AVX-512 workloads. This capability is targeted to heavy-lifting HPC applications like molecular simulation, ray tracing, physics simulations, and more,” said Mark Papermaster, AMD’s chief technology officer.

In benchmarking tests, the AVX-512 extensions provided a 4.2x boost for NLP throughput, a 3x boost for image classification and a 3.5x boost for object detection throughput, according to AMD.

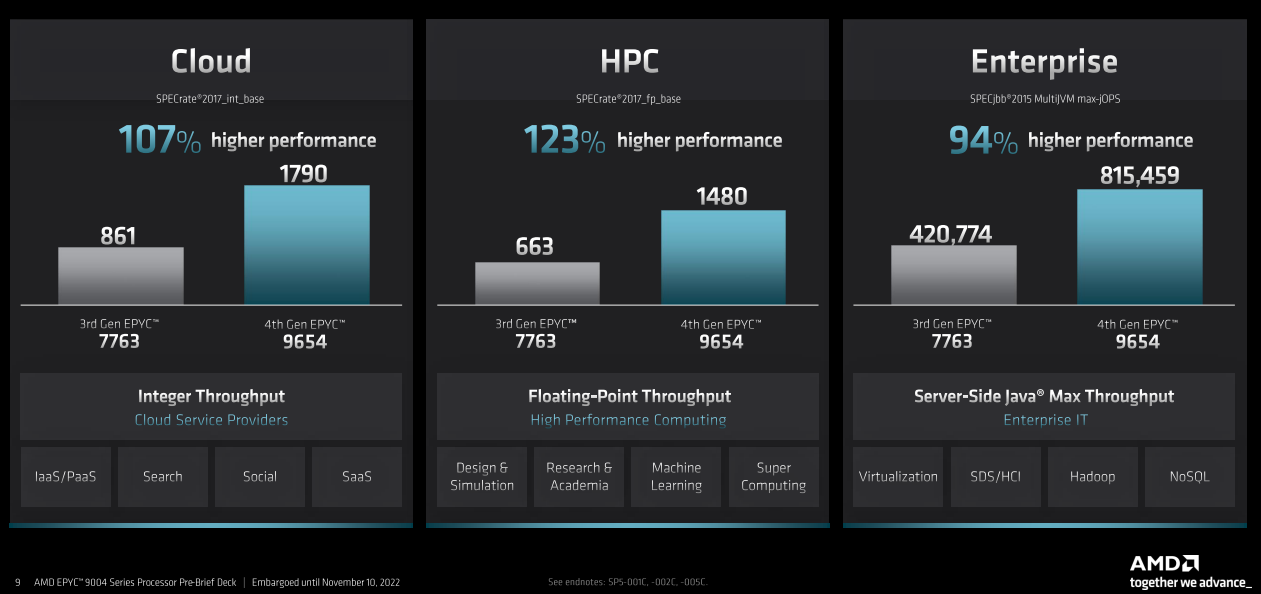

AMD highlighted gen-over-gen performance increases across HPC, cloud and enterprise workloads for the fourth-generation Epycs with a 123% boost for HPC workloads, represented by the SPECrate 2017_fp_base benchmark, with head-to-head comparisons from the top of the stack.

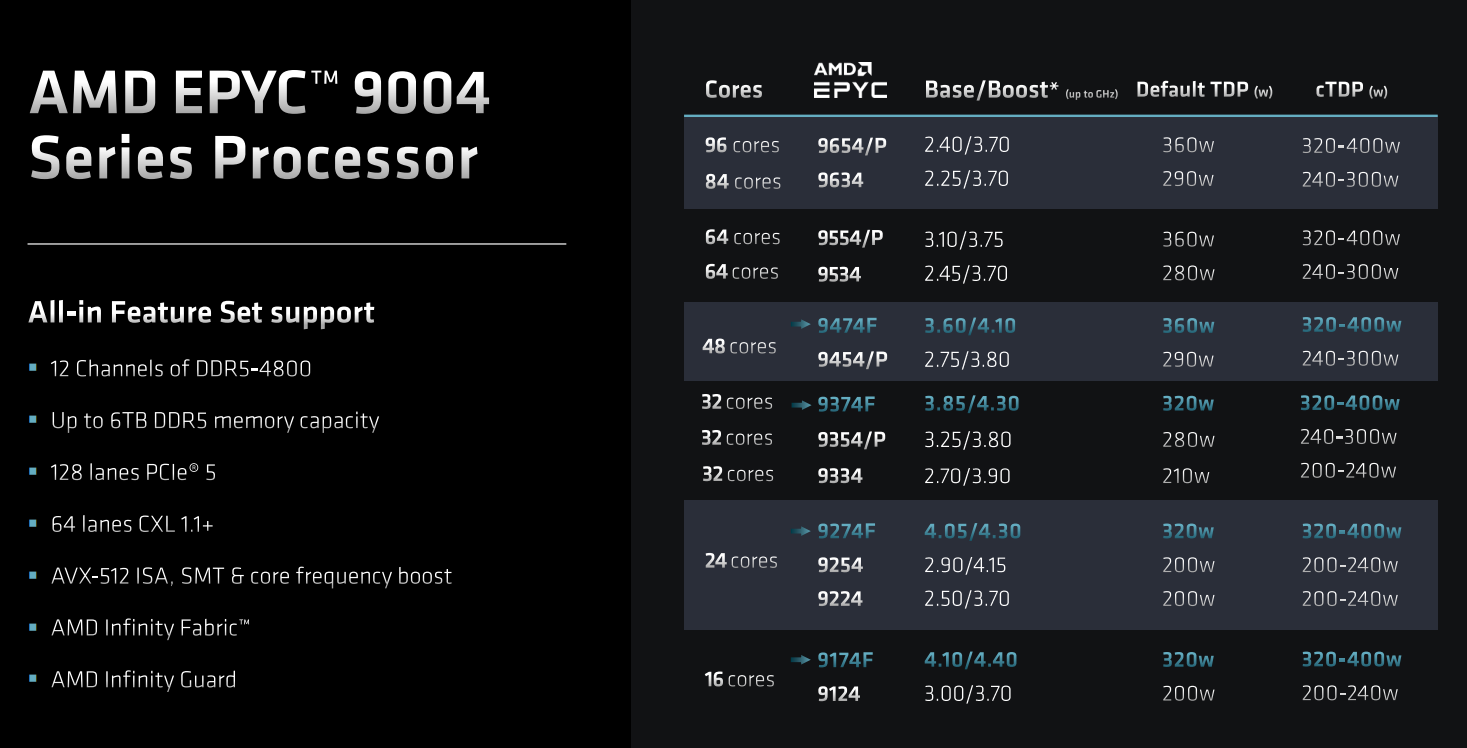

Genoa is available across 18 SKUs (14 2-socket and four 1-socket), ranging from 16 to 96 cores and consuming between 200 and 400 maximum watts. The top-bin Epyc 9654 part comes in a 320-400 watt TDP and provides 5.376 teraflops of peak double-precision performance running at max boost frequency of 3.5 GHz — over 10 teraflops in a dual-socket server. At its base frequency of 2.4 GHz, the 7763 tops out at a theoretical 3.686 double-precision teraflops. The list price for 1,000-unit lots is $11,805 per processor.

You can categorize the 18 SKUs into three buckets, said Ram Peddibhotla, corporate vice president, Epyc product management, in a pre-briefing for media held yesterday. At the high end are the per-core optimized parts, well matched for relational databases, commercial HPC and technical compute workloads. The middle are the core density-optimized parts; they deliver the leadership thread density, said Peddibhotla, and target cloud, HPC, and enterprise. And at the bottom are the 32-core and under parts, delivering balanced performance, performance per watt and TCO-friendly performance for data analytics and web front-end workloads.

“Our production stack design is very customer focused,” said Peddibhotla. “We want to keep it simple and clear, and give complete choice to our customers. So no matter what they pick, they get the full list of features.”

The fourth-gen chiplet architecture is designed with up to 12 5nm complex core die (CCD) chiplets. “In Zen 4, the result is a specialized HPC process, and that’s enabled us to get additional frequency beyond the baseline process technology. The logic and cache area scaling at 5nm allowed us to further reduce the die area over Zen 3 by 18 percent despite adding new performance features and new instruction support,” said Papermaster. The IO die is made on TSMC’s 6nm process.

Mike Clark, AMD corporate fellow and chief architect of Zen, recounted three overarching design goals: performance, latency and throughput. Clark enumerated the successes: double digit IPC and frequency improvements; reduced average latency with larger L2 cache and improved cache effectiveness, and “substantial reductions in dynamic power to efficiently increase core count.”

“We’re pretty proud that we were able to both push the IPC up and also push the frequency up at the same time because they’re pretty diametrically opposed,” said Clark at yesterday’s pre-briefing.

At today’s launch event, Su highlighted what’s ahead for the fourth-gen Epyc roadmap after Genoa, which is shipping today. In the first half of next year, AMD plans to launch the cloud-native Bergamo architecture as well as Genoa-X, optimized for technical computing and databases. The successor to Milan-X, Genoa-X will be the second product to use AMD’s 3D V-Cache technology. Availability for Siena, which targets intelligent edge and telco applications, will follow in the second half of 2023.

Reached for comment, market-watcher Addison Snell (CEO, Intersect360 Research), said “AMD has drummed out a steady campaign of performance benchmarks throughout its multi-generational Zen era, and the launch of the fourth-gen Epyc processor continued that tradition. But more than that, there was a major emphasis on energy efficiency and the cost savings and environmental impact advantage it could deliver.

“With CPU introductions on back-to-back days, there are no direct comparisons yet between Intel Sapphire Rapids and AMD Genoa. AMD has thrown down the gauntlet.”

Indeed, the competition in the x86 CPU space is red hot. Yesterday, Intel announced a rebrand of its highest end Xeons, establishing the Max Series CPU (based on the high-bandwidth memory variant of Sapphire Rapids, Intel’s fourth-gen Xeon CPU) and the newly monikered Max Series GPU (codenamed Ponte Vecchio). Those parts are not set for broad availability until the first half of next year, with the CPU preceding the GPU by about a quarter.

Asked about the company’s competitive positioning vis-à-vis Intel’s HBM-infused CPU, AMD’s Peddibhotla explained their differentiation. “From our point of view, when we built fourth-gen Epyc, the first Genoa product in the family, we are targeting general purpose compute,” he told HPCwire. Referencing 300+ world records held by Epyc, he continued, “If you think about HBM’s use case, it’s an application where the data set fits within that HBM footprint capacity per core that’s available, and if you go outside of that, then you are dealing with programmability aspects because of memory tiering and so on. Our approach is to be general purpose and to build a balanced machine that works across those workloads.”

Ecosystem support for Genoa is robust out of the gate. Hyperscale partner Microsoft Azure is already planning new instances: HX-series and HBv4-series virtual machines with 400 Gbps networking. Google Cloud has plans to incorporate the new processors into Google Cloud Compute Engine. Oracle is planning Genoa-based instances for next year, and Leo Leung, vice president of Oracle Cloud and Technology, told HPCwire that the parts will be particularly well-suited to their Exadata database service and MySQL HeatWave offering, as well as for traditional HPC workloads.

Other companies that have announced support include Dell Technologies, Gigabyte, HPE, Lenovo, Supermicro, Tyan and VMware. Lenovo said its ThinkSystem SD665 server with Neptune warm-water cooling technology is being refreshed with the fourth-gen Epyc CPUs, and customer SURF, the Netherlands-based research organization, will be deploying 700 (“or more”) of these Genoa-based nodes in its Snellius supercomputer.