Currently, Intel doesn’t have a quantum processor that potential users can access. In the fall, it launched a new quantum software development kit and simulator. Sometime in 2023, Intel plans to debut its first QPU, a 12-qubit device, and to provide access to early developers. Is Intel behind in the race to develop quantum computers, you ask? A resounding ‘No’ is James Clarke’s answer. In fact, says Clarke, Intel director of quantum hardware, Intel is playing the tortoise in the familiar hare-and-tortoise fable.

Intel isn’t alone in preaching caution. Others, PsiQuantum is one, have also argued that the clear winner(s) in the quantum sweepstakes won’t be known for years and whoever it is will win the race with a fully fault-tolerant system. (These folks tend to think there will be one winner.) NISQ systems won’t cut it. In truth, many companies are now embracing a toned-down messaging strategy if only to tame what’s been an abundance of hype as the quantum technology community mushroomed.

Late last month, Clarke talked with HPCwire about Intel’s quantum strategy around the same time he penned a position paper and article. In answer to the frequently asked question “when will quantum deliver,” Clarke said, “The one answer I don’t believe is that you’re going to have something that’s going to change the world tomorrow. I also don’t believe that it will never happen. I believe it’s going to happen on a similar cadence to the innovations in the semiconductor industry.” Think 10-to-15 years and you’re in line with Clarke’s thinking.

Presented here are portions of Clarke’s discussion with HPCwire.

HPCwire: Let’s keep it simple. Why is Intel right and those touting a much sooner breakthrough for quantum computing wrong?

Clarke: If I start with sort of the history of computers, the first general purpose computer was the ENIAC. It was started before the end of World War II, but didn’t get commissioned until after. Its purpose was to calculate the trajectory of artillery. It had a few 1000 vacuum tubes, you can see what it looked like in terms of wiring and operators. It lasted for about 10 years. It wasn’t until a few years after the ENIAC that you had the first transistor; I could call this microscopic, but in reality, you could just about see it with the naked eye. This started, perhaps, the start of the microelectronics industry. But it wasn’t until 1958, that you had the first integrated circuit, I’ll say, the first monolithic piece of hardware.

Robert Noyce was one of the inventors of the integrated circuit, he was one of the founders of Intel. It wasn’t until 13 years after that, that we had the Intel 4004, which was generally considered the first microprocessor and that had 2500 transistors on it. This was the start of Moore’s law. To go from there to a million transistors, it wasn’t until about 1990 that you had a million transistors on a chip. I’m going draw a parallel to quantum computing where I think most are coming around to the opinion that you’re going to need about a million qubits to deliver something that is commercially viable. Something that could crack [security] codes, for example, would be roughly a million qubits. Here, it took 43 years to go from the first transistor to the first million transistor chip.

Those people out there that are suggesting that we could go from few 10s of qubits to a level where there’s going to be a commercial quantum advantage are mistaken. I think the timeframe is probably on par with [microelectronics] timeline. Considering the [quantum] community has been working on it since the late 90s in one form or another, it’s not unreasonable to suggest that 10 to 15 years from now, we would have something that would achieve a commercial quantum advantage. And that was captured in the article that I wrote.

HPCwire: To an outsider, the battle between qubit technologies is fascinating, with each claiming distinct advantages such as fidelity, switching speed, scalability, and so on. What’s special about Intel’s qubit?

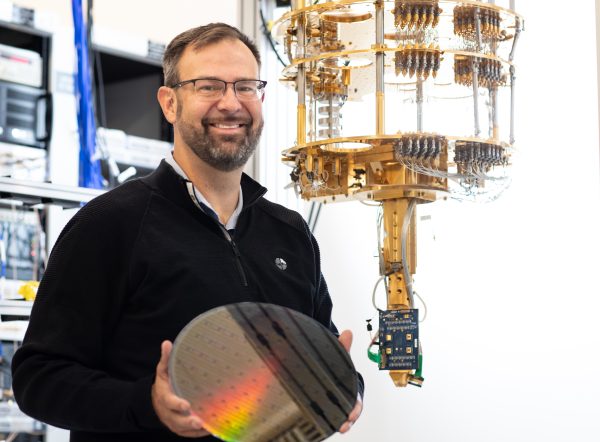

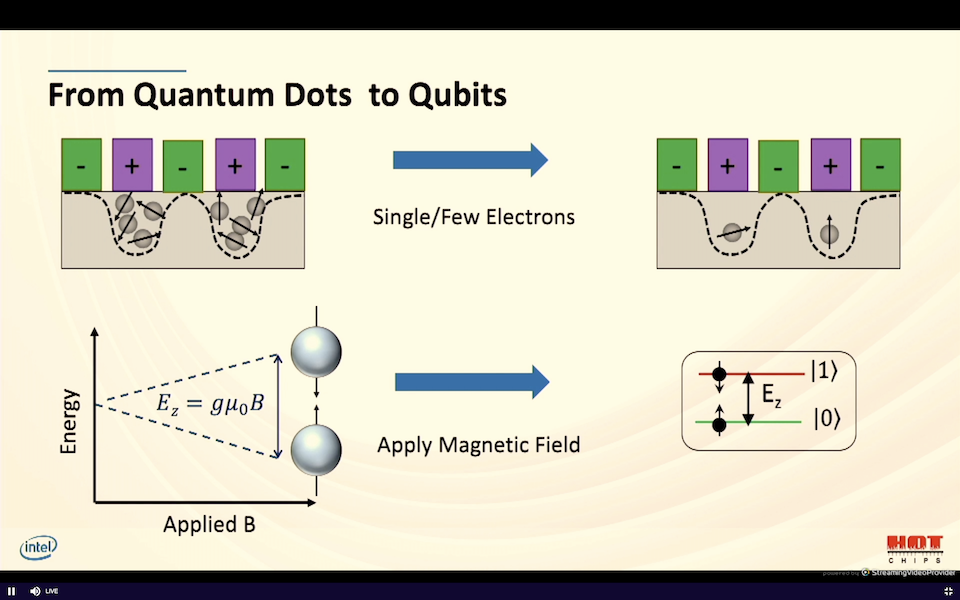

Clarke: Our qubits are different from what you might find with others, for example, IBM, or Google or others that are doing superconducting qubits. We’re doing spin qubits in silicon. They look a lot like a transistor. What we’re doing is encoding the zero and the one of a qubit into a spin of a single electron; we’re essentially making single electron transistors. We do this in Intel’s largest fabs, the RP1 fab and D1 fab in Hillsboro, Oregon. It’s our largest r&d fab. It’s where we’re doing the latest CMOS or logic chips. Once you have the qubits, you’re going need to control the qubits – send the signals into and to read out the state of the qubits. There are two ways we’re doing this. The first is we’re using room-temperature control electronics based on Intel FPGAs to make control boxes.

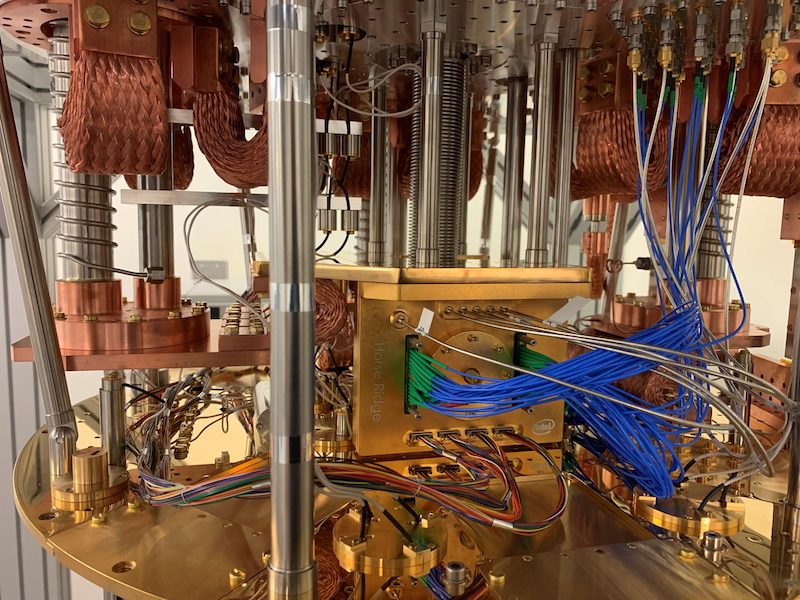

From a form factor perspective, having many wires go [from the control box] into a dilution refrigerator to control the chip is somewhat untenable. What we’re trying to do is put our control electronics very close to the qubit chip in the refrigerator. This is the Horse Ridge series of chips. Horse Ridge uses Intel 16 process. We optimized our design for performance at four Kelvin, that’s four degrees above absolute zero. Right now, we’re on our second generation and have some derivatives of this chip that we’re testing today.

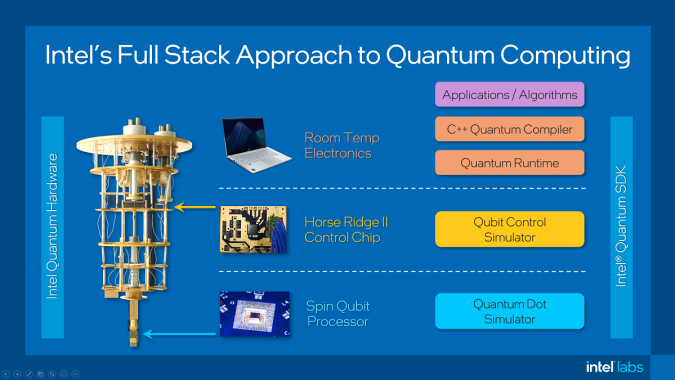

But that’s not enough. Intel is not only good at fabrication, we’re also good at architecture. So, we need a quantum architecture, where we have the compiler, the runtime, the processor at the bottom of our stack (see slide). Today, we have the Intel quantum simulator. Intel was actually one of the first to have a quantum simulator, [and] it’s open source. It simulates abstract qubits. And in – I think it was September or early October at the Intel innovation event – we launched the Intel Quantum Software Developer Kit (SDK). We are starting to engage with developers [and] external partners on our software kit. Right now, you can simulate more qubits, more good qubits than you can with hardware. Developers are doing things in a different approach than others that are out there; these developers have access to how our system would work. Later on, we’re going to put a simulation of the actual spin qubits. In 2023, we’ll tie everything together with the actual spin qubit hardware.

To my knowledge, there are very few, maybe only one other company that can do all of this under the same roof that doesn’t have to go out and work with another company to make parts of this happen, either the fabrication, the control, or the architecture.

HPCwire: Is that important to be a full-stack quantum company? There is a growing quantum ecosystem with lots of people working on many fronts to abstract away much the under-the-hood stuff. Is being a full-stack company necessary or the even best approach?

Clarke: That’s a great question, actually. I can say two things. Certainly Intel, as an IDM (integrated device manufacturer), believes the co-optimization of process control and architecture in conventional transistors provides a performance advantage. We view the same thing here. I’ll give you an example. Right now, there are probably 10 different qubit types out there, maybe five or six are serious qubit types, or at least get talked about a lot today. Imagine if you had a company that focused just on software. For them to have a business model, they would have to focus equally on all types of different qubits, or be an expert in one. To me, at this stage, I think you are giving away a lot of optimization by having that kind of separation of different tasks, fabrication control, and architecture. I think there’s too much to be gained by optimizing the whole system.

HPCwire: Let’s shift gears for a moment. My understanding is Intel will have its QPU available in 2023. I know qubit-count is generally not considered a good measure. What can you tell us about the forthcoming Intel quantum processor?

Clarke: Right now, we’re heading towards an impressive 12-qubit chip. 12 qubits is fewer than what some others in the industry are doing, but we believe that our qubit system is going to scale quite well. Spin qubits are the size of transistors, which is to say they are a million times smaller than a superconducting qubit.

HPCwire: How will Intel provide access to its initial 12-qubit QPUs? Will it be through an Intel based cloud or will you be using one of the existing the public cloud portals?

Clarke: Along with our software development kit, access in the earliest of stages will be offered through Intel-only capability (Intel Developer Cloud). In the longer term, I think it remains to be seen how we would offer it.

HPCwire: How do you see Intel quantum systems becoming part of an HPC landscape writ large that perhaps has many vibrant pieces to the ecosystem? Will the full stack approach preclude what’s expected to be a heterogeneous landscape? Longer-term are you expecting that it will be much like the developer community currently around Intel’s processors?

Clarke: Let me take that in pieces. A large-scale quantum computer is going to need a high-performance computer or a small supercomputer to help process the data. Our vision would be an Intel quantum computer tied to an Intel supercomputer. In fact, the bill of materials of that overall larger system might reside more in the supercomputer than in the quantum compute part. Any sort of system, any sort of algorithm, will have parts that get fed to the classical computer and parts that get fed to the quantum computer, they will work in synergy.

HPCwire: I know Europe is more aggressive in its stated intentions to incorporate quantum computing into its existing advanced HPC centers and that’s why I wonder about the nimbleness of an Intel quantum system being able to sit in a more heterogeneous ecosystem?

Clarke: Yeah, that is completely fair. We do see many requests for information from supercomputer centers about our quantum aspirations or ambitions at this stage. I think it’s early still to know how the two systems merge. I can tell you that our team at Intel is looking at these algorithms, the hybrid algorithms [for use] with classical and quantum. The developer community is likely going to have to evolve a little bit to learn how to separate the parts of the algorithms that can be sent to the quantum computer.

HPCwire: Do you think that one quantum modality will win out? Or do you think there will be two or three that maybe have specialized applications that they are best suited for? Or will it not make any difference when we get to fault tolerance?

Clarke: That’s a good question. I think the larger companies out there, Intel included, and IBM and Google, are focused on a general-purpose quantum computer at the moment. Each of our systems has slightly a different topology – the way the qubits are connected, the way they run. But probably if you have a system of a certain size, and you can instead characterize your system by the number of fault-tolerant qubits, I would expect the largest system to be the best system, whatever that might be. At this stage, if there are 10 different qubit types, I would expect no more than a couple to reach that stage and it wouldn’t surprise me if just one qubit type won out.

HPCwire: A few quantum computing companies report they have applications in use today. Although most are really proto applications not being used in production environments, D-Wave says a terminal at the Port of Los Angeles is optimizing container movement – loading and unloading from ships and trucks – with daily calls to a D-Wave system. That’s an in-production app. My question is, do you think there will be near-term applications able to deliver quantum advantage?

Clarke: That is a big question. I would say that five years ago when many companies started to be a bit more public about their quantum programs, everybody thought that we would have those applications by now. If you go back and look at the publication space, most companies thought there would be commercial quantum systems by 2022 or 2023. Intel was remarkably consistent, because we know how long it takes to develop a new technology. Even if we come out on a two-year cadence for transistor technology, the development time for those technologies is 10 to 12 years. I haven’t seen any indication that there will be applications that provide a big commercial advantage in the near-term. I was at a White House event in the fall of 2022. And the sentiment was similar between all of the other companies.

Now what does that mean? Maybe there will be an application that beats a commercial, classical computer, but it’s unlikely to be an application that would make a ton of money. And that’s ultimately what we care about, something that’s going to change the world.

HPCwire: And the speed-ups so far being discussed are limited too.

Clarke: Well, Shor’s algorithm for cryptography has a provable speed-up with a quantum computer, even if it hasn’t been realized. Optimization problems, to my knowledge, don’t have a provable speed-up, meaning that there’s a mathematical proof that says that you should get an advantage. There’s a hope that they will, but it hasn’t been proven.

HPCwire: What can you tell us about milestones for the Intel program in the next year? Should we expect the next Horse Ridge controller chip? Could Intel become a component supplier to other quantum companies? Microsoft also has, I believe, a cryogenic control chip?

Clarke: We’re aware of what they’re doing. We do feel that the Intel chip is unique. We’re using the capability of our Horse Ridge chips [which] are unprecedented at the moment in comparison to others. [On milestones], I’ll say we’re characterizing our 12-qubit chip now and developing a larger array chip. For Horse Ridge, we are characterizing our latest chip and beginning to set the targets for the next generation of chips.

HPCwire: As you point out, it’s early days in terms of taking quantum computing devices out of the lab and turning them into useful devices. Do you think we will see a few surprises in the next few years in terms of how the technology evolves?

Clarke: I think the only surprise is that those people that think [progress] will be quick are fooling themselves. There is a reason why we chose a qubit that looks exactly like a transistor. That reason is so we can use all of our tools in the toolbox for making transistors to make our qubits. If you were to pull a group of people together and say, “is quantum computing harder than classical computing,” everybody would say, yes, even the people that are really excited about it. So why should we think that quantum computing will evolve at a faster pace than Moore’s law? You want to be careful not to predict the future only based on the past, but there’s no indication that it can move that much faster. In fact, the only thing I would say is the more we copy the tools in Moore’s Law toolbox, the faster we’re going to be able to go.

I don’t think they’re actually going to be any surprises. I think it’s going to go at a slow, steady pace, and many of the people out there planning on a quick profit are going to be disappointed, perhaps even lost in the weeds.

HPCwire: Maybe. There’s an awful lot of people working on quantum computing today. Unlike early in the IC evolution – which got its boost mostly from two specific programs, the Minuteman and Apollo programs – there is a lot of money and effort from government and industry pouring into quantum computing. Given the number of people and diversity and intensity of ongoing quantum development, you still don’t see any acceleration of the development process?

Clarke: That’s an excellent question. We think the public/government money [being invested in quantum], so public but not defense, around the world is probably $24 billion or so. That’s a lot. The problem is that right now, there are at least five qubit [types]. In fact, I would say that there are probably seven different types of qubits. The huge effort you mentioned is spread over many technologies, [and] very few of them overlap.

If you think about how transistors developed or how the microelectronics industry developed with Sematech in the 90s, for example; it was everybody coming together in a pre-competitive phase and saying these are the very few options for the future. The victors were the ones that took those few options and made them reality. Right now, we have a ton of different technologies out there. And the requirements don’t overlap at all. For example, I need refrigerators. Trapped ion and photonics need lasers. I don’t care about lasers. So there really isn’t any pre-competitive collaboration such as existed in the semiconductor industry. There’s virtually none. That really dilutes the amount of money that could be used to accelerate efforts.

HPCwire: I know DOE is trying to foster more private-public collaborations at their quantum centers and also at their quantum testbeds. I believe Intel is actually going to participate with one.

Clarke: Correct, we’re participating with Q-NEXT (based at Argonne National Laboratory). The comment I would make [regarding the] challenge with centers for the government is that the way you get the center approved is you bring a huge collaboration together and what you want to avoid with these centers is where the amount of money sounds large, but is essentially giving every professor one student to work on it. Nothing holistic gets done. That would be a concern. That’s something that we have always asked to watch out for. Giving everybody a little bit of money or taking a huge effort but spreading it over a huge number of technologies means you’re unlikely going to accelerate anything.

HPCwire: Thanks Jim!