Intel is bringing subscription and rental services to semiconductors as it explores new business models, but it remains to be seen if buyers warm up to the idea of paying extra to unlock features on a chip.

Intel is bringing an “on-demand” feature to its new Xeon CPUs codenamed Sapphire Rapids, which the company launched on Tuesday after long delays.

The Intel On Demand program involves paying a fee to activate specific features on chips. The on-demand service is like renting a movie – you pay a fee to unlock the content.

Intel in a press document explained on-demand as a feature to “expand and/or upgrade accelerators and hardware-enhanced features available on most 4th Gen Xeon processor SKUs.”

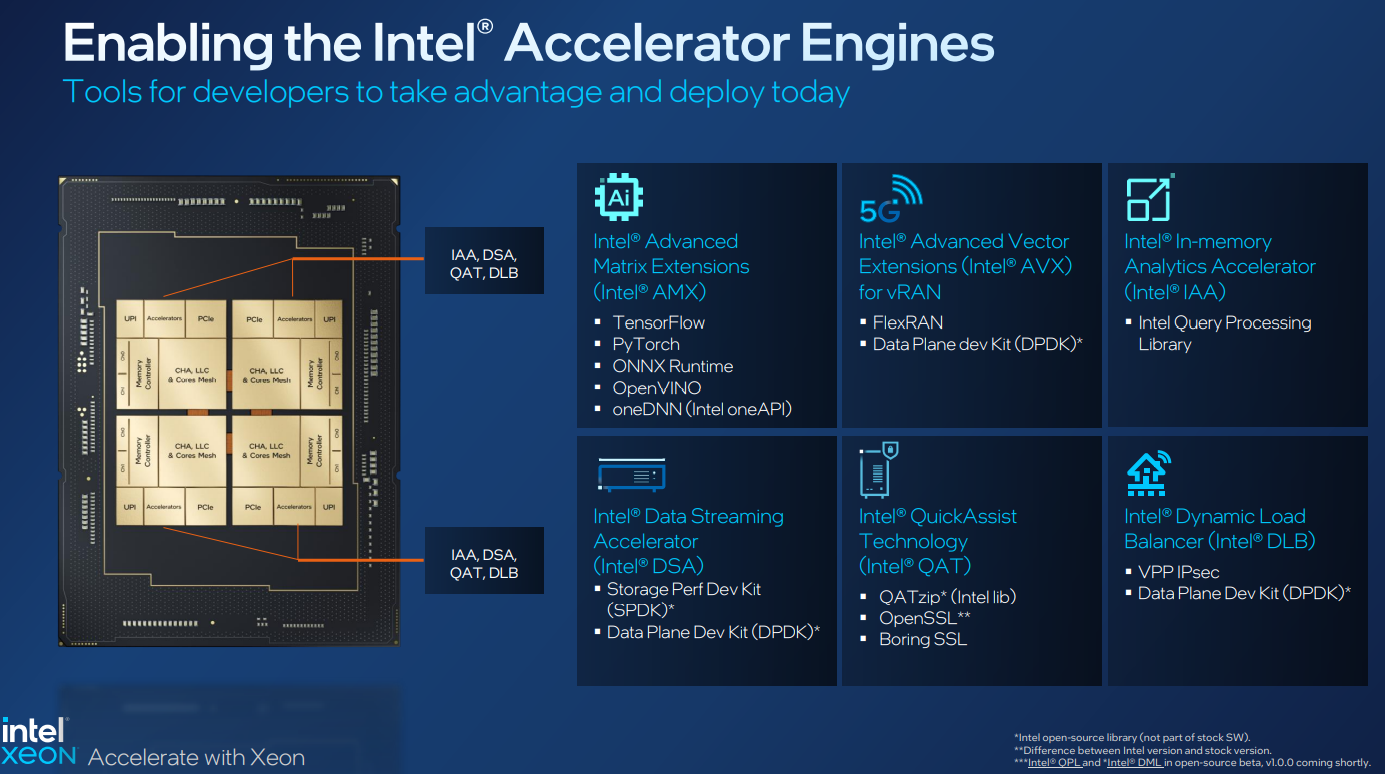

Some on-chip features that customers could rent include accelerators integrated into the CPU that provide extra juice to applications in artificial intelligence, analytics, networking and storage.

The on-demand features are available in 36 of the 52 new processors with Sapphire Rapids CPUs announced by the chipmaker.

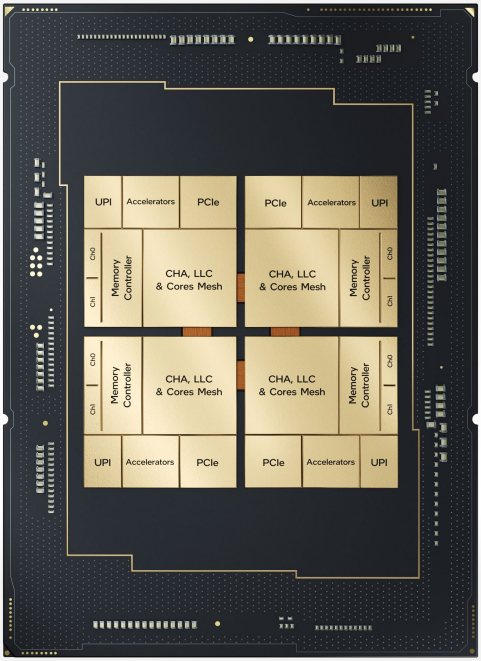

The chips with on-demand are for mainline servers, and big iron in public and private cloud server infrastructures. The chips with on-demand features have up to 56 cores and 105MB of cache, and are priced between $624 and $10,710.

The on-demand features are not available on the Xeon Max series high-performance computing chips, which are designed for supercomputers. The Xeon Max chips have tightly integrated components that include memory and accelerators.

The point of on-demand was to provide chips with certain features turned off, but can be activated when customers know the software development cycle is complete and ready to take advantage of the accelerators, said Ronak Singhal, a senior fellow at Intel.

Software development typically has a long tail and requires additional tuning to work with specific accelerators.

Customers may not know if applications would use those accelerators on day zero, Singhal said, adding that “the on-demand capability allows you to… not pay for something you’re not going to use.”

The cost of silicon is an important part of on-demand offerings, said Lisa Spelman, corporate vice president and general manager for Xeon at Intel, during a press briefing.

“It not only provides that flexibility for when you utilize that portion of the silicon, it’s not consuming energy as well,” Spelman said.

The on-demand feature is also an opportunity for Intel to explore and build up a new business model, Spelman said. The company has tried the on-demand business model with some customers with the previous two generations of Xeon chips.

“We’ve reached a more production level, so this is kind of the start of it. You’ll see it continue as we evolve into our next generations as well. It is in-step and in-line with the way that just about every other product is consumed. Even a car has become an on-demand type of offering,” Spelman said.

The additional benefit of the new business model is that it also lowers the cost of silicon ahead of customers deploying new servers, Intel officials said.

The pricing for on-demand services remains unclear, and Intel did not respond to requests for comment on how customers will be expected to pay for such services. But there were hints on how the services could be bundled through cloud offerings.

Microsoft announced it was bringing the fourth-gen Intel Xeon Scalable processors to its Azure cloud service with confidential computing features based on TDX (Trust Domain Extensions). TDX are on-chip instructions that create a trusted execution environment to securely store and move data and run applications. The instructions create a secure boundary in virtual machines hosting guest OS and applications, which can’t be accessed by hypervisors.

Microsoft didn’t provide pricing of the Sapphire Rapids instances with TDX. But it could be priced in line with Azure’s confidential computing instances such as DCasv5 and ECasv5 based on AMD Epyc chips, which have the SEV-SNP secure enclave feature. The DCasv5 starts at $69 per month with a barebone configuration, and goes up to $3,314 per month with 96 Epyc CPU cores and 384GB of memory.

Intel’s main rival AMD isn’t charging customers rental or subscription fees for features on its chips, and is taking more of a general-purpose computing approach with Epyc chips. Intel is focusing more silicon space on acceleration for specific workloads, and charging for it, which could negatively impact the business. But only time will tell if Intel’s gamble on renting out that real estate on silicon was a good move, analysts said.

“Maybe this is not a winning strategy, which is making users pay for silicon,” said Dylan Patel, who is founder of SemiAnalysis, a semiconductor research and consulting firm.

The next-generation Epyc chips from AMD, on the other hand, could have chiplets with Xilinx and XDNA AI accelerators, and customers could have more choice in customizing chips. AMD could retain its general-purpose computing message, while offering customers the choice of an AI chiplet or a plain CPU, while saying “we’re not going to make you pay for silicon that’s not used,” Patel said.

For Intel, which has its own factories, there are a lot more things to consider.

“At the end of the day, in your manufacturing costs, you put those circuits on the die, and you’re manufacturing them. In that case, it is a trade-off of less cores. Intel, with Sapphire Rapids, and obviously Granite Rapids, they will do the same with more accelerators. But with Sierra Forest, they will do the opposite, which is a bunch of lower performance cores, a ton of them. It is an interesting divergence,” Patel said.

The disparate approaches to server chips by AMD and Intel are stark, but it’s hard to tell which chipmaker holds the advantage. It depends on what applications emerge, and what kind of chips they require. Intel’s on-demand approach to accelerators may work out to the company’s advantage.

“These workloads don’t all exist today. But as they start to get developed, it will be a big deal,” Patel said.

This is not the first time Intel is charging customers to unlock features on CPUs. In 2010, Intel charged $50 to unlock features on the Pentium G6951 chip, which caused consumer backlash.

“In theory, it’s a good idea – you only pay for what you need. But we will have to wait and see how the market adapts to it,” said Jim McGregor, principal analyst at Tirias Research.

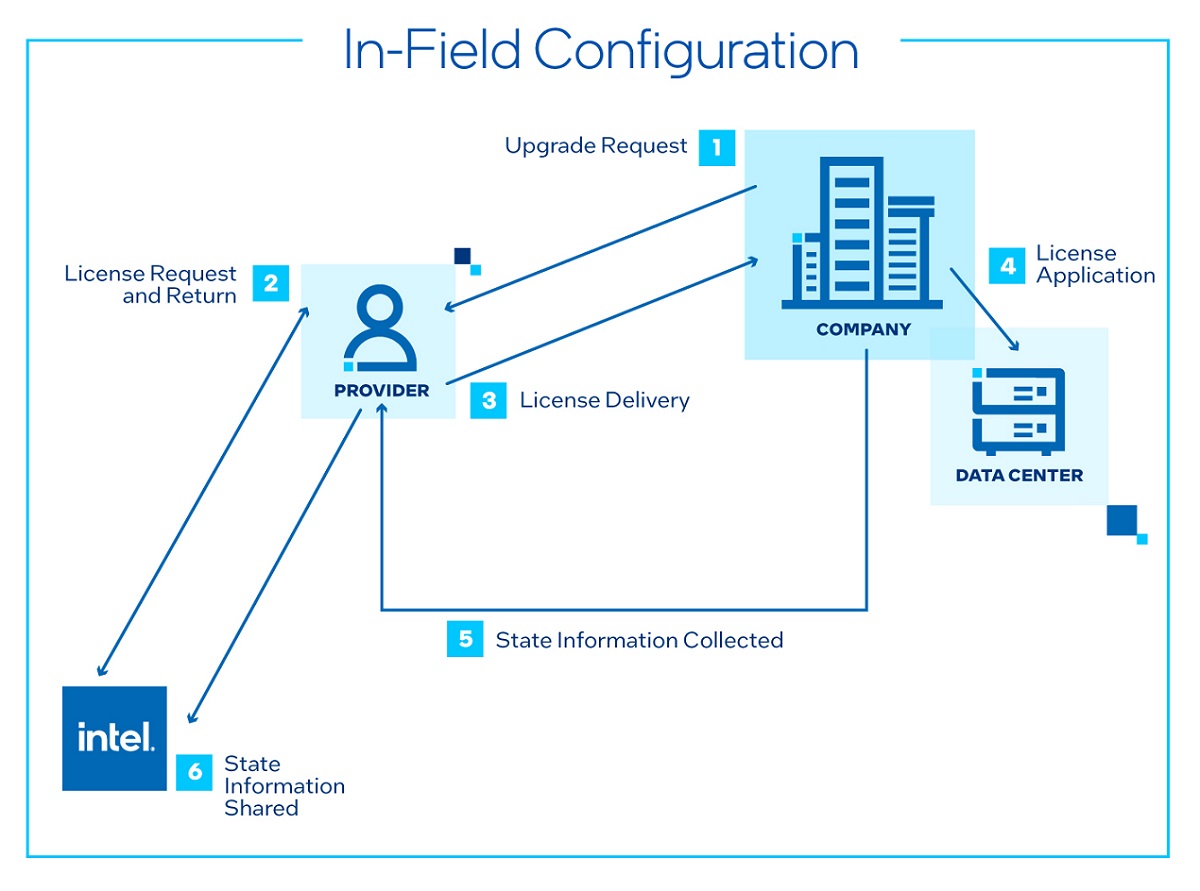

It may be difficult for Intel to justify on-demand features for customers buying silicon directly from the chipmaker or through its server partners for on-prem use. Switching features on and off in on-premises servers may present challenges as Intel may not have access to servers in such installations. (A license can also be activated at the time of purchase, according to Intel.)

“It’s easier for hyperscalers and IT service providers to charge for it. That makes more sense. If you go and get AWS or Azure, you pay for what you need, or you pay by the minute, or you pay for the resources. It is kind of hard to do that at the silicon level,” McGregor said.

The cost of renting silicon space on-demand will be tacked on to the base price of the Sapphire Rapids chips, but it is yet to be seen on how it will fit into Intel’s business model.

“It is going to be awkward to have to go back and decide ‘I need this accelerator.’ It will take several years for the IT community, especially the enterprise community, to become accustomed to that,” McGregor said.

Intel does not see a market for on-demand in the high-performance computing market as features like high-bandwidth memory and specific accelerators are core parts of the system, not an upgrade option, McGregor said.

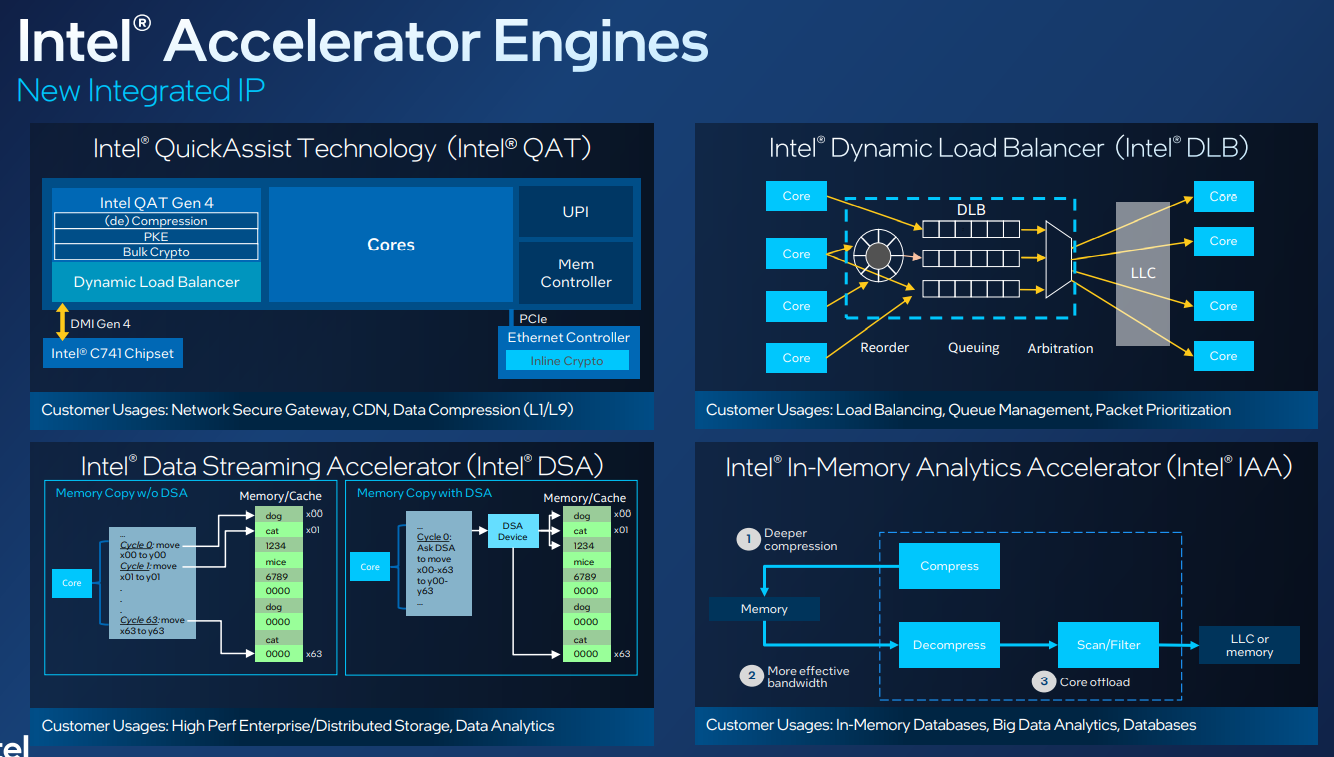

The accelerators that could be rented through on-demand include the In-Memory Analytics Accelerator (IAA), which is an engine that boosts database and big data performance by taking on analytics processing offloaded by CPUs. The accelerator focuses on analytics primitives, compression and decompression, and boosts performance by increasing the query throughput and decreasing the memory footprint, which allows for more effective bandwidth.

The IAA was able to boost the performance of RocksDB, which was created by Meta and stores values differently to relational databases, by up to three times, with 2.2x better performance per watt versus the 3rd Gen Xeon chips, Intel said during a press briefing.

Intel said the purchasing model also applies to its Dynamic Load Balancer (DLB), Data Streaming Accelerator (DSA), Quick Assist Technology (QAT) and Software Guard Extensions (SGX). Provider partners include H3C, HPE, Inspur, Lenovo, Supermicro, PhoenixNAP, and Variscale.