Bringing early visions of quantum technology into practical commercial reality will require many participants. How important will the big consulting firms be? Accenture, like many other major consulting organizations, has been steadily developing a quantum computing practice. Accenture, of course, is a goliath in the global sea of consultants. It has on the order of 700,000-plus employees worldwide and 2022 revenue of roughly $62 billion.

Here’s the Accenture pitch from Carl Dukatz, “We’ve been in quantum for a long time. We’ve used many, if not all, probably all of the devices that are available today. We’re experienced on them, have deep partnerships, and have done a lot of examples. We’ve thought about this a lot and can help propel anyone’s program forward. Or if you’re starting new, we’re happy to get you into a place where you’re comfortable and contributing to the ecosystem as a whole. And we’re absolutely here to help with the security challenges. There’s a lot of assessment and a lot of work that needs to be done. We think we have the right accelerators and tools to make that happen for many businesses at scale.”

Accenture is clearly a big company with big ambitions (company fact sheet).

Dukatz is the managing director overseeing next generation compute at Accenture and currently focuses on all things quantum computing. He recently briefed HPCwire on Accenture’s growing practice. Predictably, he was chary to share many details – a common trait in the consultant world. He did single out a couple of public use case examples. Here’s one:

- Accenture won one of BMW’s quantum challenges. “The Accenture team provided a holistic workflow for prototyping, from user input all the way to the final result, involving a detailed pipeline for (i) the definition of the input data, (ii) pre-processing steps, (iii) optimization of the underlying MaxCover problem and (iv) visualization of the results with an advanced sensor distribution visualization app. For the actual optimization problem, the Accenture team developed a general framework including four classes of algorithmic approaches. While classical custom algorithms delivered the best results today, the framework from the Accenture team comes with plugins for quantum methods to be elaborated in the future.”[i]

These are interesting times in the quantum computing realm. There’s enthusiasm around rising qubit counts, improved fidelity, and narrow quantum advantage POCs. Conversely, Riggetti’s recent stumbles – the threat of Nasdaq delisting, management shuffle, subsequent layoff – is clear evidence of ongoing risks to quantum technology developers and would-be quantum adopters. And Rigetti is not alone among quantum startups facing investor pressure and adopter wariness.

Can consultancies help quantum developers and hopeful adopters navigate the choppy quantum seascape? Time will tell. Presented here is a portion of Dukatz’s conversation with HPCwire.

HPCwire: Let’s start with how Accenture got into quantum. What drove it?

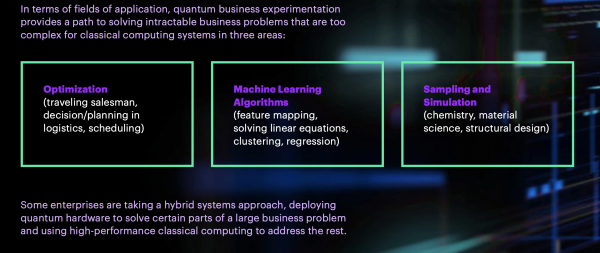

Carl Dukatz: Accenture has been investigating and working on quantum for decades, but really the practice took off about seven years ago. That was because of accessibility of not just quantum devices, but also the burst of variational algorithms that allowed for more flexible use of some of the machines. So we got in early, but not on the ground level. There are some startups that were first doing academic research and some use-case mapping for the variation algorithms. We quickly caught on to that and said, this is something that – if it proves out – can scale to our data science practice because they’re the ultimate users and implementers of the machine learning, optimization, and chemistry algorithms that would run on quantum devices as a replacement or an augmentation for high performance computing. What really kicked off our interest was when toolkits were becoming available and that translated into things that our people knew well.

From there we began building relationships and testing our own opinions on the devices as well as the algorithms themselves. We spent a lot of time mapping the algorithms and variations of those algorithms to use cases. Within the first year, I think we had 250 or so different use cases identified [and said] here are things that we want to test or that would be viable for what we call business experiments with quantum computing. This is the opportunity to take something you know, that you’re doing or is being done efficiently in the in the world today, but could potentially be done more efficiently using quantum. We have different levels of this idea. This is an idea that’s backed by paper. This is an idea that’s backed by code. This is an idea we have built ourselves and tested and validated with a client or internally with our experts and have a runtime for and can target to a quantum device of this size [and] it would surpass what we can do classically.

HPCwire: There’s a lot of talk about building an adequate quantum workforce. What’s your perspective?

Dukatz: We spend a lot of time on education. So, we have an internal-to-Accenture effort we call Learning Path and this allows us to take people from aware-to-converse [and then] from converse-to-job ready. We’ve had a lot of people go through already. We also contribute thinking in the consortiums we’ve joined, [such as] QED-C, etc., and QuIC (European Quantum Industry Consortium) to help enable those larger groups to think about [quantum issues] the way that we’re thinking about it. There are many educational institutions out there, helping people learn the basics of quantum and quantum physics. We’re trying to look at the workforce and say there are skilled experts in these different fields that just need to be augmented with some amount of knowledge about quantum to be effective in doing their jobs to help take the technology forward.

Dukatz: We spend a lot of time on education. So, we have an internal-to-Accenture effort we call Learning Path and this allows us to take people from aware-to-converse [and then] from converse-to-job ready. We’ve had a lot of people go through already. We also contribute thinking in the consortiums we’ve joined, [such as] QED-C, etc., and QuIC (European Quantum Industry Consortium) to help enable those larger groups to think about [quantum issues] the way that we’re thinking about it. There are many educational institutions out there, helping people learn the basics of quantum and quantum physics. We’re trying to look at the workforce and say there are skilled experts in these different fields that just need to be augmented with some amount of knowledge about quantum to be effective in doing their jobs to help take the technology forward.

HPCwire: How’s the Accenture quantum practice organized? Vertical segments? What services do you offer?

Dukatz: There’s a few things we offer and ways in which we approach clients. There are workshops and education, and use case identification and development. Then there is the business experiment, [which entails] running code, partnering with a software developer and also a hardware vendor to show execution runtime. We also have a program that we call Quantum Foundry. Essentially, that says you’re going to build this capability into your business so that you can test use cases and run these experiments on your own.

When we started out it was mainly education. People didn’t know what quantum was and they needed to understand it and what some of the use cases are. [That changed] about two or three years ago when quantum became much more accessible in the cloud. AWS started its Braket service and people could leverage it ubiquitously as part of their cloud environment. That changed a lot of the conversation from “what are we going to do with quantum?” to “how can you help me with quantum.”

No longer are we approaching clients and explaining how to get started. They’ve already started. They already have a team of people that’s fascinated with the topic and is moving it forward and experimenting. That’s been the transition of late. The good thing is we’ve built out examples and prototypes across all the different quantum computers [and] different industries. We have things to show, whether it is a runtime for financial services fraud detection or a prototype for housing market estimation or a chemistry simulation for molecular similarity. We actually have a great story for PFAS (Perfluoroalkyl and Polyfluoroalkyl Substances) molecular bond stretching for chemical destruction analysis.

HPCwire: What are the major practice areas? Are there like five big buckets you draw from or is it all ad hoc based on need? Which of these areas to do you think will start to produce so-called quantum advantage first?

Dukatz: I can pick any industry and give you five areas that we think machine learning, optimization, or simulation could be impactful. Personally, I think machine learning is the most interesting area for potential quantum advantage soon. That’s for a couple of different reasons. One is because modeling chemistry has to be done to a very exacting precision, and (existing) noisy devices with small qubit-counts don’t push the boundary on that quite yet – although I personally think that is the most promising and amazing area and will have the largest impact on what we do computationally.

I think [reaching advantage] near-term is more likely in the machine learning space. The optimization space is already an incredibly well-researched operations area; they are trying to play a lot of catch up with quantum algorithms right now. But machine learning is still a relatively new field with tons of invention and innovation going on. There’s also opportunity with some of the hybrid models and algorithms to do something more near-term [with] qubits as an augmentation to a [classical] machine learning runtime and to show interesting results today, maybe not full quantum advantage, but interesting results.

HPCwire: Most observers now say quantum will become part of a hybrid or blended architecture with classical computer systems. Moreover, the learnings have gone in both ways with quantum algorithms adapted to run on classical hardware, mostly GPUs, and classical algorithms adopted for use on quantum systems. Several companies, QC Ware and Terra Quantum, for example, emphasize running quantum-inspired algorithms on classical hardware. What’s your take?

Dukatz: We’ve fully embraced this quantum-inspired methodology. If we’re tackling a use case, we build it in a Python notebook first, then we build a runtime that has a UI, and an interface so that a non-quantum person can use it. Then we say, how do we make this run using quantum thinking [to determine] if this idea is good or if we would see a performance improvement. It can be as simple as [answering] a business question you didn’t model in your original assumptions. Let’s do a quantum-inspired version of this. It’s not quantum-inspired hardware; it’s quantum-inspired thinking. That’s something that we find incredibly interesting.

HPCwire: Do you think the diversity of approaches will persist or will one win out?

Dukatz: Look at the history of hardware. [Initially] there were specific systems that solved business specific groups of problems for a long time. Then we got into an area of general purpose computing and the sprawl of having more technology, diversity. Now we’re slowly moving back into more specific hardware fit-for-purpose, because more people are comfortable engineering hardware today.

I don’t think it’s a stretch to say that we’re going to continue to see more specialized devices and more people who operate a plethora of specialized devices to make greater efficiencies within their businesses. Extending that to quantum, there are a lot of people developing general purpose with quantum computers, but there are also companies building specific purpose quantum computers and trying to engineer them to solve individual problems. I don’t think that’s going to go away. I think what’s going to happen is everyone’s going to continue to explore [all options] and as soon as the advantage is found, it will be recognized and promoted, and it will uplift the entire industry.

HPCwire: Financial services is thought to be an early QC adopter. It has the resources and a history of quickly adapting any new technology to gain a competitive edge. Is that Accenture’s thinking?

Dukatz: Interestingly, I got to host a panel with some folks from the financial services sector recently, and they believe so. They think that because there is approximation inherent to some of the calculations they do now, that the noise of the quantum systems and the answers that you get from them even if they’re not the perfect, can be better or comparable at a larger scale to the activities that they now do on a daily basis. They say they’re comfortable with approximations and comfortable making business decisions based on those. That gives them a different perspective on how to use the [quantum] devices. I thought that was a pretty interesting perspective and I have to agree.

HPCwire: You’ve said the practice has really picked up in the last few years. How many clients do you have?

Dukatz: This is a sensitive topic. Historically, I’d say, between five and 10 active projects are going on with some type of exploration or use case development. It could be a workshop or ideation; there’s a spectrum of different things. One reason I’m hesitant is because the other topic that we haven’t talked about is quantum-safe security. As you know, with the new standards going into law, there’s tons of activity in that space, and it is a massive market and the wheels are starting to spin very quickly.

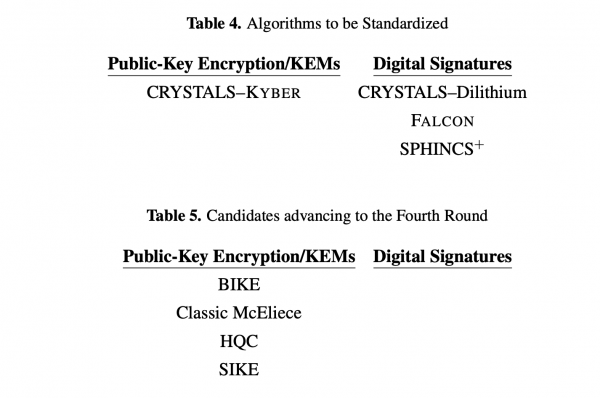

HPCwire: Ok, let’s turn to that. NIST released its first four official Post Quantum algorithms last July. It’s also coordinating efforts to build new deployment tools. What is Accenture doing in this area?

Dukatz: We have an eight-step process to helps businesses get through this from discovery to verification of cryptographic sources to decision making on implementation tooling and remediation activities, all the way through to what we call managed service so that it’s running effectively across the business. People don’t know what’s out there and what tools can tell you how you’re doing from a legacy perspective to how you’re doing from a new quantum algorithms perspective. You need those dashboards, you need that telemetry, and more importantly, there are different ways that you can upgrade your systems to be post-quantum-safe. We think that we’ve established the right partners to enable companies to do this very, very quickly, much more quickly than they would have thought they could, at the scale that they need to do it.

Dukatz: We have an eight-step process to helps businesses get through this from discovery to verification of cryptographic sources to decision making on implementation tooling and remediation activities, all the way through to what we call managed service so that it’s running effectively across the business. People don’t know what’s out there and what tools can tell you how you’re doing from a legacy perspective to how you’re doing from a new quantum algorithms perspective. You need those dashboards, you need that telemetry, and more importantly, there are different ways that you can upgrade your systems to be post-quantum-safe. We think that we’ve established the right partners to enable companies to do this very, very quickly, much more quickly than they would have thought they could, at the scale that they need to do it.

HPCwire: Who are some of the partners?

Dukatz: We haven’t announced any of the partnerships specifically in the space. But you probably know some of the major providers or major people doing scanning, for example. What I can say is we focus very heavily on crypto-agility. We think that is the right path forward and that even if you select from the algorithms are named (by NIST) today, hard coding them into your applications is not going to be the right approach going forward. This is a new paradigm for security and a new technology that is going to need to be offered ubiquitously is the ability to switch out the algorithms. (See HPCwire coverage: The Race to Ensure Post Quantum Security)

We have multiple teams working on different aspects of it. One is tied closely to our security practice, while the other is tied closely to our analytics practice. I’m in the tech incubation group and I get to work with those practices and help launch them.

HPCwire: Do you think the threat quantum computing pose needs addressing now?

Dukatz: Yeah, all indicators tell us that Harvest-Now-Decrypt Later is likely occurring. Is it something that businesses need to be worried about today, and [don’t forget] quantum technology advances are continuing at a very predictable pace at this point. Now, there could be some discussion about the qubit quality, but qubit-counts continue to increase. All in all, we see great progress across the board. Given that and the fact that more people are exploring decryption algorithms and more people are testing new case, the threat is only get worse, right? I think that I would be in the camp of saying that the timeline is shifting closer.

HPCwire: We haven’t really touched on Accenture example engagements. I know the big consultant firms tend to be close-mouthed about specifics. Can you share a couple of examples?

Dukatz: There are a couple of ones that we have published. We worked with Biogen and 1Qbit to do molecular similarity experiments. That one is a little old but it is public. We also won the BMW quantum computing challenges. There’s a financial services example, but I don’t want to say they wrong company. More recently we’ve been heavily involved in the PFAS experiments. PFAS is a selection of manmade chemicals that we’ve polluted the earth with and they’re inside basically everything and us and they don’t degrade. They can cause birth defects and cancer and other horrible things so we need to figure out a way to degrade so-called forever chemicals.

We’ve been thinking that maybe with a future quantum computer of sufficient size and scale, we can do the chemistry experiments we need to figure out how to break them down. We’ve analyzed all 4,000 different molecules, worked with the Irish Center for High-end Computing to build a workflow that would allow us to process all of them. And we ran some experiments with them and we ran some experiments on IonQ’s processor. We also did a very large scale experiment with Intel, AWS, and Good Chemistry. That experiment was basically the one of the largest Kubernetes clusters ever run across multiple Amazon datacenters, spiking up to over 1.1 million cores in order to do some of these bond-stretching experiments. That’s another example of us taking something that we learned from quantum and pushing it in back to classical technologies to see how far we could scale it.

HPCwire: Thanks for your time.

[i] Use Case: Vehicle Sensor Placement: Accenture

“Modern vehicles come with sensors to help provide safety and convenience to drivers. Vehicles need these sensors to gather data from as large a portion of their surroundings as possible, but each additional sensor adds costs. The goal of this use case was to optimize the positions of sensors to allow for maximum coverage while keeping the required number of sensors as low as possible. The Accenture team provided a holistic workflow for prototyping, from user input all the way to the final result, involving a detailed pipeline for (i) the definition of the input data, (ii) pre-processing steps, (iii) optimization of the underlying MaxCover problem and (iv) visualization of the results with an advanced sensor distribution visualization app. For the actual optimization problem, the Accenture team developed a general framework including four classes of algorithmic approaches. While classical custom algorithms delivered the best results today, the framework from the Accenture team comes with plugins for quantum methods to be elaborated in the future.” Source.