If you are a die-hard Nvidia loyalist, be ready to pay a fortune to use its AI factories in the cloud.

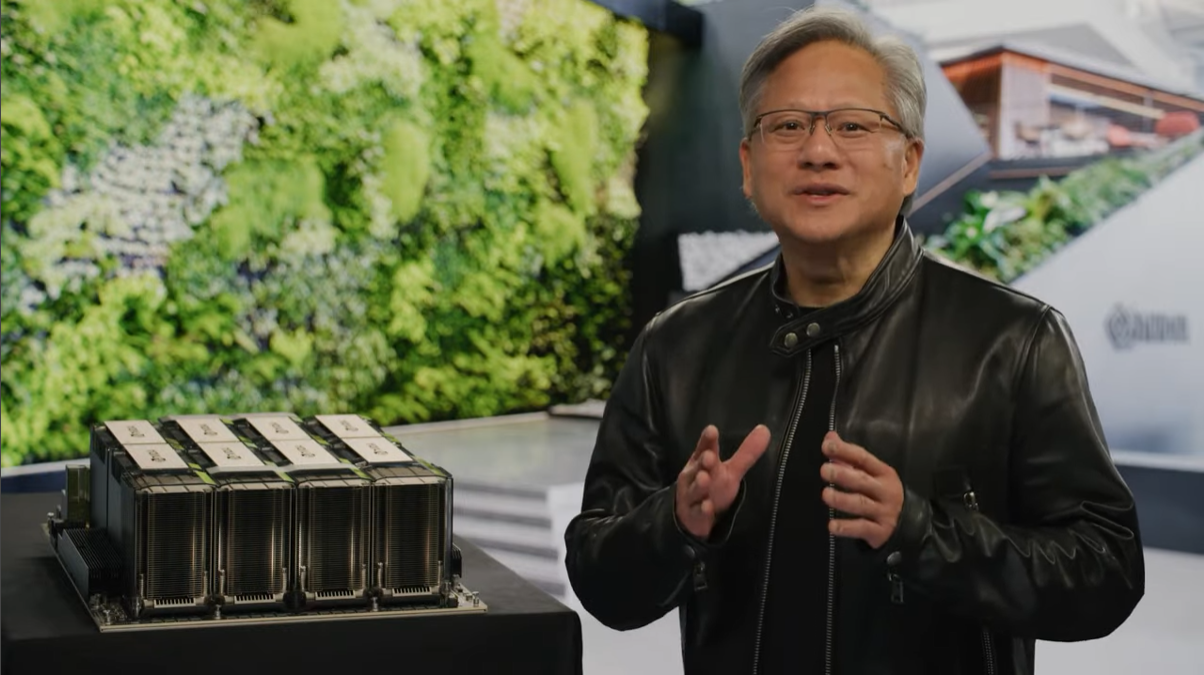

Renting the GPU company’s DGX Cloud, which is an all-inclusive AI supercomputer in the cloud, starts at $36,999 per instance for a month.

The rental includes access to a cloud computer with eight Nvidia H100 or A100 GPUs and 640GB of GPU memory. The price includes the AI Enterprise software to develop AI applications and large language models such as BioNeMo.

“DGX Cloud has its own pricing model, so customers pay Nvidia and they can procure it through any of the cloud marketplaces based on of the location they choose to consume it at, but it’s a service that is priced by Nvidia, all inclusive,” said Manuvir Das, vice president for enterprise computing at Nvidia, during a briefing with press.

The DGX Cloud starting price is close to double that of $20,000 charged by Microsoft Azure for a fully-loaded A100 instance with 96 CPU cores, 900GB of storage and eight A100 GPUs per month.

Oracle is hosting DGX Cloud infrastructure in its RDMA Supercluster, which scales to 32,000 GPUs. Microsoft will launch DGX Cloud next quarter, with Google Cloud’s implementation coming after that.

Customers will have to pay a premium for the latest hardware, but the integration of software libraries and tools may appeal to enterprises and data scientists.

Nvidia argues it provides the best available hardware for AI. Its GPUs are the cornerstone for high-performance and scientific computing.

But Nvidia’s proprietary hardware and software is like using the Apple iPhone – you are getting the best hardware, but once you are locked in, it will be hard to get out, and it will cost a lot of money in its lifetime.

But paying a premium for Nvidia’s GPUs could bring long-term benefits. For example, Microsoft is investing in Nvidia hardware and software because it presents cost savings and larger revenue opportunities through Bing with AI.

The concept of an AI factory was floated by CEO Jensen Huang, who envisioned data as raw material, with the factory turning it into usable data or a sophisticated AI model. Nvidia’s hardware and software are the main components of the AI factory.

“You just provide your job, point to your data set and you hit go and all of the orchestration and everything underneath is taken care of in DGX Cloud. Now the same model is available on infrastructure that is hosted at a variety of public clouds,” said Manuvir Das, vice president for enterprise computing at Nvidia, during a briefing with press.

Millions of people are using ChatGPT-style models, which require high-end AI hardware, Das said.

DGX Cloud furthers Nvidia’s goal to sell its hardware and software as a set. Nvidia’s is moving into the software subscription business, which has a long tail that involves selling more hardware so it can generate more software revenue.

A software interface, the Base Command Platform, will allow companies to manage and monitor DGX Cloud training workloads.

The Oracle Cloud has clusters of up to 512 Nvidia GPUs, with a 200 gigabits-per-second RDMA network. The infrastructure supports multiple file systems including Lustre and has 2 terabytes per second throughput.

Nvidia also announced that more companies had adopted its H100 GPU. Amazon is announcing their EC2 “UltraClusters” with P5 instances, which will be based on the H100.

“These instances can scale up to 20,000 GPUs using their EFA technology,” said Ian Buck, vice president of hyperscale and HPC computing at Nvidia during the press briefing.

The EFA technology refers to Elastic Fabric Adapter, which is a networking implementation orchestrated by Nitro, which is an all-purpose custom chip that handles networking, security and data processing.

Meta Platforms has begun the deployment of H100 systems in Grand Teton, the platform for the social media company’s next AI supercomputer.