As the 2023 EuroHPC Summit opened in Gothenburg on Monday, Herbert Zeisel – chair of EuroHPC’s Governing Board – commented that the undertaking had “left its teenage years behind.” Indeed, a sense of general maturation has settled over the European Union’s supercomputing play, which has spent the four and a half years since its debut in an impressive HPC growth spurt. Now, with six supercomputers under its belt (including two in the Top500’s top five) and more on the way, EuroHPC is facing challenges befitting a supercomputing leader as it prepares to enter the exascale era.

A brief recap

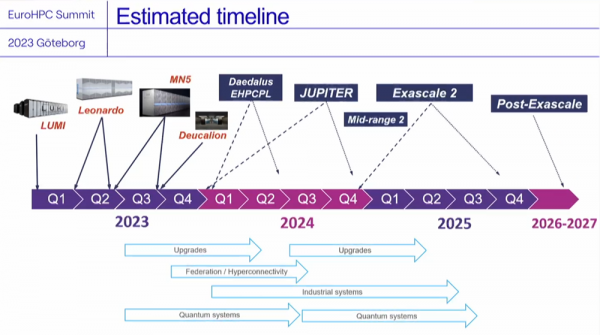

The EuroHPC Joint Undertaking JU) took over the organization of the European Union’s supercomputing efforts from the Horizon 2020 program in September 2018. In June 2019, the new JU – backed by 28 countries – announced sites for its first eight supercomputers. Detailed over the subsequent months, this initial list included five petascale systems and three larger “pre-exascale” systems. Now, less than four years later, four of the five petascale systems and two of the three pre-exascale systems are online. Those pre-exascale systems, of course, include Finland’s LUMI supercomputer (third on the Top500) and the newest of EuroHPC’s systems, Italy’s Leonardo supercomputer (fourth on the Top500). Still in the works: Portugal’s petascale Deucalion system and Spain’s pre-exascale MareNostrum 5 system – more on those later.

Last June, the JU – which is now backed by 33 countries, with more expected to join – announced plans and sites for five new systems: four “mid-range” systems (petascale or pre-exascale) and its first exascale system, JUPITER, which will be hosted by Germany’s Jülich Supercomputing Centre (FZJ). The JU also, in October 2022, selected six host sites for its first quantum computers.

Where do the systems stand?

The EuroHPC Summit didn’t offer tremendous updates or shock announcements. In general, it was a thoughtful exploration of how the JU could move itself forward in an environmentally, economically and politically sustainable manner. With that said, there were quiet roadmap updates for a variety of the JU’s undertakings.

Leonardo: While Leonardo did debut on the last Top500 list, the system hadn’t, at 174.7 Linpack petaflops, quite reached its full power yet. At the summit, it was confirmed that EuroHPC expects the system to hit 240 Linpack petaflops for the next Top500 list.

Deucalion: The lone straggler of EuroHPC’s first five petascale systems, EuroHPC had slated Deucalion for delivery in late 2021. The system’s schedule has slipped a few times, but it is now being “finalized” and is estimated for acceptance in “early autumn” of this year. The delays are, perhaps, not too surprising given the system’s oddball architecture: the Fujitsu-manufactured supercomputer is using AMD CPUs, Fujitsu’s Arm CPUs and Nvidia GPUs.

MareNostrum 5: The long-beleaguered third pre-exascale system is finally being installed in the new Barcelona Supercomputing Center (BSC) headquarters. Anders Jensen (pictured in the header), executive director of EuroHPC, confirmed that the system will be inaugurated in 2023 and that it will have a peak performance in excess of 300 petaflops, which lines up with the prior estimate we heard (314 peak petaflops, 205 Linpack petaflops).

Evangelos Floros, head of infrastructure for EuroHPC, elaborated that MareNostrum 5’s network, storage and management nodes have been installed and that BSC is targeting acceptance of the general-purpose partition in June of this year. That partition will be powered by Intel’s Sapphire Rapids CPUs and, Floros said, will constitute one of the largest CPU-only partitions in the world at 90 racks, 6,480 CPUs and 36 Linpack petaflops. The much larger main accelerated partition (Nvidia Hopper GPUs and Sapphire Rapids CPUs, 163 Linpack petaflops) is expected – like Deucalion – to debut in “early autumn” of this year. No word on the two partitions that account for the six straggler petaflops, one of which is up in the air after Intel axed its Rialto Bridge GPU plans earlier this month – the other, based on Nvidia’s Grace Superchips, is probably a safer bet.

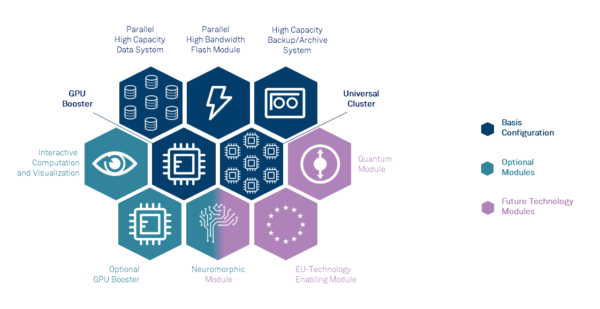

JUPITER: EuroHPC’s first exascale supercomputer was targeted for installation in 2023 when it was first announced; much like the U.S. exascale timelines, EuroHPC’s exascale timeline has slipped. Floros explained that the candidate vendors had just been narrowed down and that EuroHPC is aiming to sign the contract for JUPITER by Q4 of this year, with installation beginning in Q1 2024. The JU wants to have at least one “big partition” ready for acceptance by Q4 2024.

A couple other soft details emerged on JUPITER. EuroHPC is aiming for sustained 1 exaflops performance in its primary GPU-accelerated partition; elsewhere, FZJ director Thomas Lippert cited a general target of 1.3 peak exaflops for the system – 20× the peak of its predecessor, JUWELS. JUPITER will be hosted in a containerized datacenter – Floros said that “no concrete building” will be built for hosting JUPITER. The system will be accompanied by over an exabyte of storage. Floros also said that it’s still possible that European technologies, like Europe-built CPUs, could be “part of a potential solution” for “one of the modules” of JUPITER. Thomas Skordas, deputy director-general for communications networks, content and technology for the European Commission, said that the integration of European processors in JUPITER would likely take place next year.

The four new mid-range systems: This is a murky one. Four mid-range systems were announced alongside JUPITER: Daedalus (Greece), Levente (Hungary), CASPIr (Ireland) and EHPCPL (Poland). However, of those four, Jensen only discussed Daedalus and made reference to “four [systems] on the way” – presumably, those four are Deucalion, MareNostrum 5, JUPITER and Daedalus. Elsewhere, Floros made a reference to two mid-range systems on the way in the 2023-2025 window, each with >20-30 petaflops of performance: one in Greece (Daedalus) and one at CYFRONET (EHPCPL in Poland). As far as we saw, the Hungarian and Irish systems were not discussed, though they were included on a map shared in one of the presentations.

Vis-à-vis Daedalus: the hosting agreement has been signed and the JU is looking to move forward with procurement. To our knowledge, the performance target (>20-30 petaflops) is new information, as well.

The second exascale system and additional mid-range systems: EuroHPC has long planned an initial set of two exascale machines, with the ambition of powering a substantial portion of the second using homegrown technologies. Those plans and ambitions remain, with Floros saying that the aim is to have the second exascale system operational by 2025 and to make that system “complementary” to the first system and more reliant on European technology. Daniel Opalka, head of research and innovation for EuroHPC, said that they hope to include general-purpose processors from the European Processor Initiative (EPI) in that system.

Jensen said that disclosures on that system and additional mid-range systems could be expected in the coming months: “[We] hope to be able to announce both mid-ranges and the second European exascale system in the not-too-distant future.”

The post-exascale era: Even ahead of its first exascale system, EuroHPC is targeting a post-exascale era beginning in 2026. Details on the JU’s vision for this era were scant, save for repeated references to ever-increasing amounts of homegrown technology powering post-exascale EuroHPC systems, with “sovereign EU HPC” around 2029. Sergi Girona, operations director for BSC, at one point discussed MareNostrum 6 as a post-exascale supercomputer targeted for installation and production in the 2029-2030 range, citing the need to have a long-term achievable vision. Perhaps the most telling quote on the post-exascale era, though, came from Lippert: “Please: let’s get exascale right.”

Priorities and challenges

The pushes toward exascale capability and sovereignty should be clear from the above, but the summit also saw a variety of other issues raised. Zeisel outlined a series of challenges as the JU moves toward updating its strategic plan: faster development (“We need more speed”), user representation, considering the communities served by PRACE, establishing an experimental system and more. Two themes in particular pervaded the talks: industry and energy.

Industry: Skordas discussed plans to expand industrial access to supercomputers through EuroHPC, including a call for expression of interest in hosting and operating an industrial-grade supercomputer. That computer, he said, will be specifically designed for industrial requirements like secure access, protected data and increased usability. “Here, there is an increasing interest within the private sector to have dedicated supercomputing infrastructure for industry needs,” Skordas said, “including specialized capacities for large AI models.”

The summit included a plenary on the “appetite for industrial-grade supercomputers in the EU.” During the talks and discussions, the participants made clear that the appetite for such machines was real, but that their success depended significantly on price and security. (NCSA’s Brendan McGinty was on hand to discuss the U.S. perspective, referencing the data security-targeted Nightingale system that we just covered.)

Energy: Two of Zeisel’s priorities focused on energy and climate: first, reducing EuroHPC’s energy costs; second, reducing its carbon emissions. Throughout the summit, panelists and speakers were extremely vocal about the need to manage energy use by forthcoming systems, citing the staggering energy budgets for systems like Frontier and Fugaku and casting dire projections of future HPC energy use based on current trends.

Consensus, it seemed, was building around the importance of location. “Technical solutions, such as processors … are important,” Zeisel said. “But as the LUMI example shows to us, comprehensive system-level solutions are essential and may be the key for that.” LUMI, sited in northern Finland, is colocated with fully renewable hydropower and warms nearby houses with its waste heat. Due to its far-north location, it also requires less cooling.

Pekka Lehtovuori, director of operations for CSC – LUMI’s host – naturally agreed. “The most important [choice] is to choose to use green energy,” Lehtovuori said. “Everything after that is a plus, but can’t compensate [for] the effect of the original choices.” In his presentation, Lehtovuori argued that location mattered “many orders of magnitude more than system- and operational-level optimization.”

Tor Björn Minde, a director at the Research Institutes of Sweden (RISE), concurred – “Location is very important” – and illustrated how the same 10MW datacenter in different parts of Europe produced extraordinarily different carbon emissions. “The Finns did the right thing,” Minde said.

Of course, other strategies were proposed since, as Lehtovuori noted, the “political choices” for supercomputer sites still led to large systems that could not sit idly for long periods of time and need to be operated where they are installed. To that end, various speakers discussed code optimization, load shaping in response to grid prices, incentivizing users by prioritizing energy-to-solution and utilizing in-memory computing to minimize data movement. Interestingly, Floros noted that the JUPITER procurement includes options for vendors to leverage energy efficiency to move expected operations savings into the acquisition costs.

EuroHPC as a supercomputing leader

Despite the lack of major announcements, the EuroHPC Summit had an air of triumph about it. It was a well-earned victory lap: apart from the impressive system launches over the past few years, Jensen noted that the JU had doubled its staff last year and, to date, had awarded over 1.5 billion core-hours on its systems. For the first time, calls to establish Europe as a “world power in HPC” sounded a little strange – after all, hasn’t it become one?