About two years ago, the Swiss National Supercomputing Centre (CSCS), HPE and Nvidia announced plans to launch a powerful new supercomputer in 2023 to replace Piz Daint, CSCS’ current flagship system. In the subsequent years, CSCS has been insistent that Alps is more than just a supercomputer. At a session at Nvidia’s March GTC event, Thomas Schulthess – director of CSCS – took the virtual stage to issue an update on what he calls the “Alps infrastructure,” elucidating some details about the hardware, software and philosophy behind the new installation.

Some understandable confusion arose when CSCS revealed that Alps installation had already begun by late 2020, seemingly contrasting with the planned completion date in 2023. “I’m talking about a new system that is two years old,” Schulthess said at GTC. “So: what exactly is new?”

Alps’ hardware

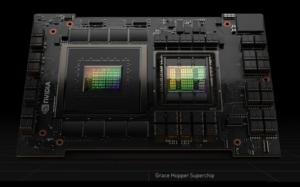

First, the new hardware. We had known for about a year that most of Alps’ computing power would come from Nvidia’s novel Grace Hopper Superchips, each of which contains an Arm-based Nvidia Grace CPU and an Nvidia Hopper GPU. In his GTC presentation, Schulthess elaborated slightly on that information: Alps will contain “about 5,000 Grace Hopper modules,” with four modules per node. He confirmed the system uses Slingshot networking.

Schulthess called the Grace Hopper Superchips the “special feature” of Alps and said that they would be scaled out in the system by the end of the year. “[The Superchip] exists,” Schulthess said. “I’ve seen it. I’ve seen engineers testing it. We are expecting to get some engineering samples ourselves. The results that I have seen look extremely promising.”

An aside on Superchips and the Venado system…

Nvidia says that Alps will be the first system to deploy the Grace Hopper Superchip. Previously, Nvidia had announced that the Venado system at Los Alamos National Laboratory (LANL) would mark the U.S. debut of the Superchip. Per a recent presentation by LANL’s Gary Grider, that system is still on track to be delivered, installed, accepted and host early users by Q4 of this year, with general availability in Q1 2024 – so Venado should be following hot on Alps’ heels. During that presentation, Grider also shared that Venado will be around 80% Grace Hopper Superchips, 20% Grace CPU Superchips. “This will be the largest U.S. instance of a Grace H100 solution for quite a while, we think, in the U.S.,” Grider said. “There will be a bigger one in Europe.”

…before we return to Alps.

Back to that “bigger one.” Alps also contains a CPU partition with dual AMD Epyc “Rome” CPUs on each node. It is this partition – which measures about 1,000 nodes and which delivers 3.09 Linpack petaflops – that has appeared on the Top500 since late 2020 and which is operational today.

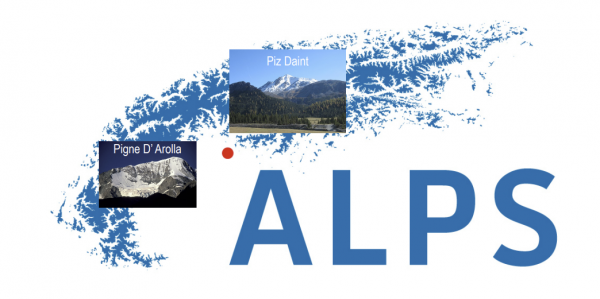

Schulthess compared the Alps hardware infrastructure to Piz Daint, which consists of roughly 5,000 nodes, each with an Intel Haswell CPU and an Nvidia P100 GPU – roughly comparable to the 5,000 Grace Hopper Superchips that will power most of Alps – plus a number of dedicated Intel-powered CPU nodes (again comparable to the dedicated AMD-powered CPU nodes in Alps).

A new path in supercomputing

Alps, Schulthess explained, represented a “new path in supercomputing” – and to understand that, it’s actually crucial to keep talking about Piz Daint and the systems before it. Per Schulthess, the journey toward Alps started about ten years ago.

“We had another two systems – this was Monte Rosa, the flagship supercomputer at that time, and Phoenix cluster, which was the Swiss tier-two cluster for the World [Large Hadron Collider] Compute Grid [WLCG],” Schulthess recalled. Around 2014 or 2015, they had the idea to try to move the middleware for the WLCG workloads to Piz Daint, which was replacing Monte Rosa. Over the next several years, CSCS worked on making it possible to concurrently run a second software environment on Piz Daint – a difficult, manual process, as the underlying architecture was not designed for such software-defined infrastructure.

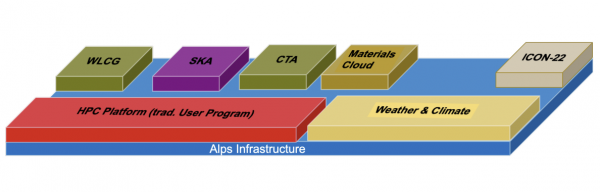

For the past four or five years, Piz Daint has been operating these two platforms – traditional HPC and the WLCG platform – at the same time: per Schulthess, both capability computing and high-throughput computing.

Alps is an expansion of that approach.

Currently, Piz Daint operates alongside a system called Pigne D’Arolla, which hosts the operational weather forecasting workloads for MeteoSwiss on the COSMO platform. Those MeteoSwiss workloads are migrating to Alps, where they will be run on the ICON-22 platform (the successor to COSMO, expected to start running this summer and serve operational forecasting needs by Q1 2024).

Schulthess said that while the two setups may look similar on paper, they are “fundamentally different” in that Piz Daint and Pigne D’Arolla are discrete, closed systems, while Alps’ various platforms are not.

“The way you have to imagine Alps is … we are building an infrastructure that combines all these different supercomputers, and so this also explains the name,” Schulthess said. “We’ve been naming our computers after mountains in the Swiss Alps, and we will continue to do that, but the infrastructure itself [is] ‘the Alps.’”

The view from the mountain

Schulthess acknowledged that this might be confusing, but affirmed that there is “real work – engineering work” happening to support that vision. Alps makes heavy use of Docker containers and the Sarus container engine to deliver its separate software environments, with Firecrest facilitating interface and access needs. CSCS is able to define virtual clusters for its computing clients, who can then decide if they want to operate the cluster themselves or have CSCS operate it for them.

Both the HPC platform and the WLCG platform are operational on Alps today, Schulthess said, and they’re working on platforms for the Square Kilometre Array (SKA), the Cherenkov Telescope Array (CTA), the Materials Cloud program and the aforementioned ICON-22 weather forecasting platform. Schulthess also made frequent reference to ambitious weather and climate uses – no definite word as yet on whether those will be formally associated with the European Union’s DestinE program.

To learn more about CSCS’ software-defined approach to supercomputing, read the interview by Simone Ulmer here.