June 7, 2023 — A dataset on ‘plausible worst-case scenario’ flooding in California has received a 2023 DesignSafe Dataset award, given in recognition of the dataset’s diverse contributions to natural hazards research.

The dataset’s story begins in 2010, when the U.S. Geological Survey (USGS) conducted a ‘what-if’ scenario of an extremely powerful rainstorm that strikes California, which they called ARkStorm (1.0), short for Atmospheric River (1k). The USGS’ motivation came from their sediment research showing historical recurrence of a ‘megastorm’ every 100-200 years.

ARkStorm 1.0 found that widespread flooding and wind damage along the Central Valley of California from a 25-day deluge of atmospheric rivers would cause at the least hundreds of billions of dollars of damage to property and infrastructure, and widespread evacuations of millions of people.

ARkStorm 1.0 was meant as a stress test for emergency response systems to reveal weak points in an emergency, and then hopefully take steps to address them.

But one thing that ARkStorm 1.0 didn’t account for was climate change, which scientists predict increases atmospheric water vapor and therefore could increase the intensity of megastorms.

Climate Makes ARkStorm 2.0

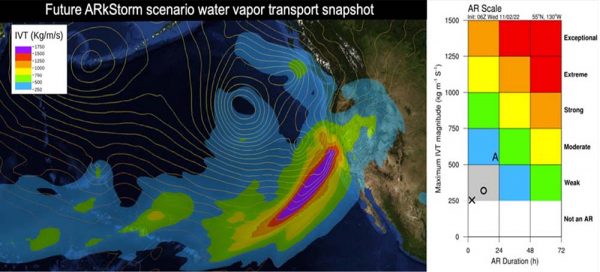

Enter ARkStorm 2.0 — an update to ARkStorm 1.0 that takes climate change into account by embedding a high-resolution weather model inside of a climate model, using the climate model conditions as the boundary conditions.

Scientists embarked on the first of three phases of ARkStorm 2.0 in 2022, the development of the atmospheric scenario. They completed a dataset that includes the initial condition, forcing, and configuration files for the Weather Research and Forecasting Model (WRF) simulations used to develop the hypothetical extreme storms.

It also includes WRF output meteorological data such as precipitation, wind speed, snow water equivalent, and surface runoff to characterize natural hazard impacts from the storm simulations.

Award-Winning Dataset

The dataset, PRJ-3499 | ARkStorm 2.0: Atmospheric Simulations Depicting Extreme Storm Scenarios Capable of Producing a California Megaflood, received a 2023 DesignSafe Dataset award, given in recognition to the dataset’s diverse contributions to natural hazards research. It is publicly available on the NHERI DesignSafe cyberinfrastructure (https://doi.org/10.17603/ds2-mzgn-cy51).

The ARkStorm 2.0 dataset team consisted of Xingying Huang (NCAR) and Daniel Swain of the University of California, Los Angeles (UCLA).

“The ARkStorm 2.0 dataset is curated from an impacts-based perspective,” said climate scientist Daniel Swain of UCLA’s Institute of the Environment and Sustainability; Swain also is with the National Center for Atmospheric Research’s Capacity Center for Climate and Weather Extremes; and is a California Climate Fellow with The Nature Conservancy.

“We tried to include the variables that are most important for folks who might be doing follow-on analyses to understand the impacts to people, infrastructure, ecosystems of these scenarios,” Swain said.

“Practically, this dataset has been shared with the U.S. Federal Emergency Management Agency (FEMA), the California Department of Water Resources, the Governor’s Office of Emergency Services, and other entities because there is real interest in gaming this scenario out from an infrastructure and risk assessment perspective,” Swain added.

Published Results

The ARkStorm 2.0 dataset is the primary result of a study published August 2022 in Science Advances. The study received widespread attention, including coverage by the New York Times.

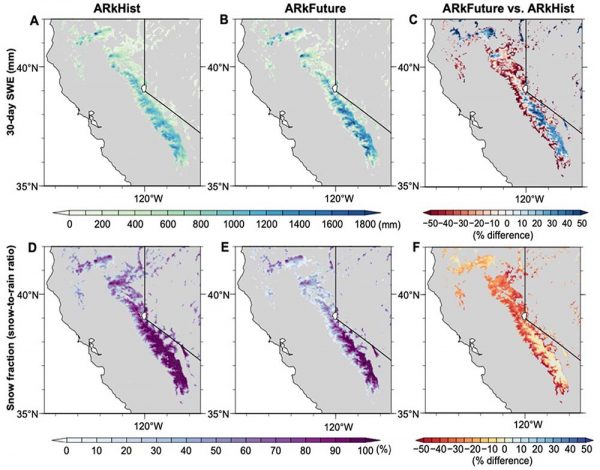

“The key findings are twofold. One is, we assess this as a scenario and study what it actually looks like. Yes, clearly, it rains a lot. But how much is a lot, how quickly, and which areas are hit hardest,” Swain said.

In this scenario and datasets, the scientists focused on the precipitation intensity on an hourly basis, an interesting finding for the future relative to the historical scenarios.

“It’s not just that the future scenario is wetter overall,” Swain said. “The heaviest localized downpours also get considerably more intense. Some of the peaks of the future scenario look like the heavy Texas-style downpours. These intense hourly rainfall rates — unusual for California — can cause big problems in urban and other populated areas.”

The study also conducted a broader assessment of the changing risk of a warming climate, narrowing down the likelihood of a megastorm in the past, present, and future.

”We found that even in this era today of severe droughts and wildfires in California, the risk of a mega flood has probably doubled for the present relative to about a century ago,” Swain said.

What that translates to is that a 200-year flood event is now more likely to happen once in 100 years, and because of climate change it could become a once in a 50-year flood event.

“That’s a profound difference with just a few degrees of warming,” Swain said.

DesignSafe Assistance

The datasets produced by the ARkStorm 2.0 scenario are voluminous, making DesignSafe a good choice to host and share the data, since there are no direct caps on the size.

“DesignSafe — it’s in the name. This is very much infrastructure, design and risk-assessment relevant. The repository is well-designed to accommodate sharing with various state and federal agencies,” Swain said.

Initially, the dataset didn’t meet DesignSafe’s strict curation criteria, and it wasn’t accepted. Fortunately, Swain and Huang reached out to DesignSafe staff, who worked with them to improve the dataset’s structure and accessibility.

“It helped to have professional data curators at DesignSafe available for things like this. With their help, we increased the value of the repository from an open science perspective, and we made it easier for scientists to engage with our data. We can just send people a direct URL where they can click on a file name and download it,” he added.

Climate Signal

Said Swain: “Climate change is here. It’s no longer a prediction about the future, but it’s a is an observable reality in the present. The changes that we expect to see — revealed in the data — when it comes to certain kinds of extreme events, especially temperature and precipitation related, which, of course, precipitation is most directly related to flooding — are large, because the atmospheric response to warming, following the Clausius–Clapeyron curve, is exponential in the case of moisture. This climate signal is becoming increasingly obvious, even in places like California that people more often associate with water scarcity, drought and wildfires.”

About DesignSafe

DesignSafe is a comprehensive cyberinfrastructure that is part of the NSF-funded Natural Hazard Engineering Research Infrastructure (NHERI) and provides cloud-based tools to manage, analyze, understand, and publish critical data for research to understand the impacts of natural hazards. The capabilities within the DesignSafe infrastructure are available at no-cost to all researchers working in natural hazards. The cyberinfrastructure and software development team is located at the Texas Advanced Computing Center (TACC) at The University of Texas at Austin, with a team of natural hazards researchers from the University of Texas, the Florida Institute of Technology, and Rice University comprising the senior management team. NHERI is supported by multiple grants from the National Science Foundation, including the DesignSafe Cyberinfrastructure, Award #2022469.

Source: DesignSafe