Dec. 19, 2022 — Oak Ridge National Laboratory’s next major computing achievement could open a new universe of scientific possibilities accelerated by the primal forces at the heart of matter and energy.

The world’s first publicly revealed exascale supercomputer kicked off a new generation of computing in May 2022 when scientists at the U.S. Department of Energy’s ORNL set a record for processing speed. As Frontier opens to full user operations, quantum computing researchers at ORNL and the DOE’s Quantum Science Center, or QSC, continue working to integrate classical computing with quantum information science to develop the world’s first functional quantum computer, which would use the laws of quantum mechanics to tackle challenges beyond even the fastest supercomputers in operation.

“We believe that quantum computers will be able to simulate quantum systems that are intractable to simulate with classical methods and thereby advance science that will be foundational for the future economy and national security of the U.S.,” said Nick Peters, who leads ORNL’s Quantum Information Science, or QIS, Section.

The year of that quantum milestone could be like none before — at least since 1947. That’s when scientists at Bell Labs invented the transistor, the three-legged electronic semiconductor that ultimately replaced the cumbersome vacuum tubes relied on by computers of the previous generation. The leap in technology enabled the microchip, the electronic calculator and the computing revolution that followed.

Researchers believe they could be approaching a similar pivot point that would kick-start the quantum computing revolution and transform the world again — this time with the potential for unprecedented computing horsepower and ultra-secure communications.

The DOE’s Office of Science launched the QSC, a DOE National Quantum Information Science Research Center headquartered at ORNL, in 2020 in part to help speed toward those goals. The QSC combines resources and expertise from national laboratories, universities and industry partners, including ORNL, Los Alamos National Laboratory, Fermi National Accelerator Laboratory, Purdue University and Microsoft.

Any quantum revolution won’t happen all at once.

“A lot of people anticipate we’ll have a eureka moment when quantum computing takes over high-performance computing,” said ORNL’s Travis Humble, director of the QSC. “But real scientific progress usually happens slowly and incrementally, in stages you can measure over time. We may now be inching up on that tipping point when quantum computing offers an advantage and a quantum computer surpasses the classical computers we’ve relied on for so long.

“But it won’t happen overnight, and it’s going to take a lot of long, hard work.”

The Quantum Shift

Quantum computing uses quantum bits, or qubits, to store and process quantum information. Qubits aren’t like the bits used by classical computers, which can store only one of two potential values — 0 or 1 — per bit.

A qubit can exist in more than one state at a time by using quantum superposition, which allows combinations of distinct physical values to be encoded on a single object.

“Superposition is like spinning a coin on its edge,” Peters said. “When it’s spinning, the coin is neither heads nor tails.”

A qubit stores information in a tangible degree of freedom, such as two possible frequency values. Superposition means the qubit, like the spinning coin, can exist in both frequencies at the same time. Measuring the frequency determines the probability of measuring either of the two values, such as a coin’s likelihood to land on heads or tails.

The more qubits, the greater the possible superposition and degrees of freedom, for an exponentially larger quantum computational framework. That difference could fuel such innovations as vastly more powerful supercomputers, incredibly precise sensors and impenetrably secure communications.

But those superpowers come with a cost. Quantum superposition lasts only as long as a qubit remains unexamined. Only a finite amount of information can be extracted from a qubit once it’s measured.

“When you measure a qubit, you destroy the quantum state and convert it to a single bit of classical information,” Peters said. “Think about the spinning coin. If you slap your hand down on the coin, it will be either heads or tails, so you get only one classical bit out of the measurement. The trick is to use qubits in the right way, such that the measurement turns into useful classical results.”

Finding that trick could deliver huge payoffs. A quantum supercomputer, for example, could use the laws of quantum physics to probe fundamental questions of how matter and energy work, such as what makes certain materials act as superconductors of electricity. Questions like those have so far eluded the best efforts of scientists and existing supercomputing systems like Frontier, the first exascale supercomputer and fastest in the world, and its predecessors.

“A development like this would be such a shift as to be a new tool in the box that we theoretically could use to fix almost anything,” Humble said.

But first scientists must answer basic questions about how to make that new tool work. A true quantum computer won’t be like any computer that’s ever come before.

“The great tension right now is this tightrope between quantum computing as an exciting new field of research and these tremendous technical challenges that we’re not sure how to solve,” said Ryan Bennink, who leads ORNL’s Quantum Computational Science Group. “How do you even think about programming a quantum computer? Everything we know about programming is based on classical computers. That’s why our understanding must be evolutionary. We’re building on what others have done with quantum so far, one step at a time.”

Those steps include projects supported by ORNL’s Quantum Computing User Program, or QCUP. The program awards time on privately owned quantum processors around the country to support independent quantum study. The computers used aren’t quite what quantum computing’s advocates have in mind for the revolution.

“I wouldn’t compare the quantum computers we have now with supercomputers,” said Humble, who oversees QCUP. “These quantum computers are basically systems we experiment with to show how quantum mechanics can be used to perform simple calculations on test problems. Conventional computers can do most of these calculations easily. The researchers testing these machines are doing the best science to gain insight into how we can make quantum computing work for scientific discovery and innovation.

“For a future quantum supercomputer, we need a machine that meets a threshold of accuracy, reliability and sustainability that we just haven’t seen yet.”

Turning Down the Noise

The main obstacle for useful quantum simulations so far has been the relatively high error rate from noise degrading qubit quality. Those kinds of simulations won’t be ready for prime time until scientists achieve the same level of real-world consistency and accuracy offered by standard supercomputers.

“Qubits acquire these errors just sitting there,” Bennink said. “Every time we operate the quantum computer, we introduce error. When we read out the values generated by the calculations, we introduce more error. That doesn’t mean the simulations are all wrong. We can perform some quantum simulations with an error rate of 1% per operation. But if we need to do 10,000 operations in a simulation, that’s going to be more errors than we can fix. So right now, we’re limited in the operations we can run before the amount of quantum noise renders the results useless. We need to get the error rate below a reliable threshold — preferably a tenth of a percent or lower.”

Researchers keep inching closer, study by study. A team led by the University of Chicago’s Giulia Galli recently used an allocation from QCUP to simulate quantum spin defects in a crystal and balance the error rate to a level deemed acceptable for scientific use.

“The results were not perfect, but we were able to cut down the errors to such a point that the results became scientifically useful,” Galli said.

Another QCUP study led by Argonne National Laboratory’s Ruslan Shaydulin used quantum state tomography, which estimates the properties of a quantum state, to correct noise on a study using five qubits and reach a 23% improvement in quantum state fidelity.

“We achieved a much larger-scale validation on this hardware with more qubits than had been done before,” Shaydulin said. “These results put us one step closer to realizing the potential of quantum computers.”

As refinements continue, researchers suggest incorporating qubits into larger supercomputing systems might act as a bridge to fully quantum systems. That doesn’t mean classical computers would go extinct.

“Ultimately, quantum computing will most likely become an essential element in high-performance computing, but it’s unlikely to replace classical computing altogether,” Peters said.

“I expect we’ll see the rise of hybrid computing, where classical systems use quantum computing as an accelerator, similar to GPUs in a supercomputer like Frontier. I’d even expect hybrid systems to be the primary way we leverage the power of quantum computers as they mature. Algorithm-optimized quantum processors could help simulate parts of problems too challenging for purely classical machines until we find a seamless way to integrate both types of computing.”

Connecting the Qubits

The next step from a true quantum computer would be a quantum network — and ultimately a quantum internet — of such computers that would enable communication through qubits.

Efforts by quantum information scientists at ORNL seek to establish entanglement between remote quantum objects, a process that could be used for computing or for building quantum sensor networks. Along with classical communications, entanglement — which means two objects intertwine so closely that one can’t be described independently of the other no matter how far apart — enables distant users to move quantum information over a network by quantum teleportation, or the transmission of a qubit from one place to another without physical travel through space.

“Entanglement is often the key resource needed to carry out a desired quantum application, and it needs to be done in an error-free or nearly error-free way,” Peters said. “We tend to lose most of the qubits carrying this information as they’re transmitted. That’s a big challenge that requires us to develop quantum repeaters, which you could consider a special type of quantum computer, to correct for loss and other errors.

“Ultimately, we’ll need to develop not just new technology but new concepts to make a quantum internet a reality, but entanglement is a necessary step.”

Scientists at ORNL and the QSC have made cracking the code to that entanglement a top priority.

“We’re not committed to a single type of quantum technology,” Humble said. “We think there’s value in variety. Two approaches have emerged as leading favorites so far, but we’re open to all possibilities.”

One approach focuses on harnessing dim beams of light to connect quantum machines for secure and lightning-fast communications.

A light particle, or photon, can exist in two frequencies at a time, like the ability of a qubit to hold more than one value at once. Photons could be used as the vessels for encoding information that could then be transmitted across hundreds or thousands of miles — from a satellite to separate ground stations, for example — at the speed of light. A cryptographic key based on quantum mechanical principles could be delivered and used to encrypt the messages for virtually unbreakable security.

“We already know how to send these light particles over long distances — think about a TV or radio signal — so now we just need to figure out how to use their inherent properties to encode them and enable networked quantum computing,” said Raphael Pooser, an ORNL quantum research scientist. “Photons are just pieces of electromagnetic field floating in space, like a pendulum swinging back and forth. That gives us good variables for computing because photons have an infinite number of possible values that would allow us to store large or small amounts of information.”

Pairing Photons

As with most aspects of quantum computing, the theory’s not easy to put into practice.

“The really difficult thing about photons is that they don’t interact naturally,” said Joe Lukens, senior director of quantum networking at Arizona State University and a frequent collaborator with ORNL. “As I’ve heard it stated simply, put two flashlights together, and you have two light beams, not a particle accelerator. The beams just fly past each other. From a computing perspective, you want your qubits to have a high degree of interaction to achieve that necessary entanglement.”

ORNL’s photonics researchers could be closing in on a way to bring that vision to life. The approach, known as frequency bin coding, focuses on using pulse shapers, which manipulate the frequencies of light waves, and phase modulators, which manipulate photonic oscillation cycles, to encode and entangle particles, imprint them on light beams and then transmit them over optical fiber.

A 2020 experiment by Lukens and fellow quantum researchers at Purdue University demonstrated the approach could be used to control frequency bin qubits in an arbitrary manner, laying the groundwork for the types of quantum operations needed in quantum networking.

“That’s the basic building block of a quantum computing network,” Lukens said. “If we put a pulse shaper and phase modulator back to back, in principle we could build any kind of quantum gate for a universal quantum computer.”

A 2021 study led by ORNL successfully used photons to share entanglement among three quantum nodes in separate buildings linked by a quantum local area network.

“Now we need to figure out how to scale up,” Lukens said. “There are still a lot of questions about the best path to a quantum network, but I think frequency-based photonics has a good shot.”

Promising Platforms

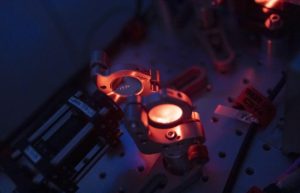

The other main target of ORNL’s quantum simulation research focuses on trapping and controlling ions, atoms charged by a loss of electrons. Each ion carries a positive charge that can be used to move the ion around in a radio-frequency trap. The quantum state of the ion can be controlled for quantum applications through such means as lasers and microwaves.

“One of the advantages of working with trapped ions is they’re natural qubits,” said Chris Seck, an ORNL quantum research scientist leading the ion-trap effort. “Each trapped ion of a specific species is identical (in the same environment), and the physics of trapping and manipulating their quantum states has been well understood for decades. That’s part of what makes this such a promising platform.”

ORNL has invested more than $3 million in its ion-trap efforts so far, mainly through the QSC.

“We’re still starting up, and as with any new effort, especially one started just before the COVID-19 pandemic, there have been growing pains,” Seck said. “We’re excited about the possibilities for further exploration.”

ORNL continues to expand its quantum efforts, including the creation of the QIS Section in 2021.

“The QIS section is home to ORNL’s research groups devoted to developing the tools and techniques for quantum sensing, computing and networking,” Peters said. “The QIS staff collaborate broadly across ORNL, in the region and across the U.S.”

Researchers can’t predict which strategy might lead to that quantum watershed moment or when it might come. Industry partners of the QSC have taken up other approaches. Discoveries could lead in directions yet to be considered.

“We’re learning more every day about what works and what doesn’t,” Humble said. “It’s akin to the late 1940s of computing, when the invention of the transistor didn’t bring a digital revolution overnight. It was another decade before the invention of the microchip and even longer before we saw the rise of modern computers, cellphones and the internet. So we’re prepared for a sustained commitment to develop quantum computing and the remarkable opportunities it affords.”

The QSC, a DOE National Quantum Information Science Research Center led by ORNL, performs cutting-edge research at national laboratories, universities and industry partners to overcome key roadblocks in quantum state resilience, controllability, and ultimately the scalability of quantum technologies. QSC researchers are designing materials that enable topological quantum computing; implementing new quantum sensors to characterize topological states and detect dark matter; and designing quantum algorithms and simulations to provide a greater understanding of quantum materials, chemistry, and quantum field theories. These innovations enable the QSC to accelerate information processing, explore the previously unmeasurable, and better predict quantum performance across technologies. For more information, visit qscience.org.

UT-Battelle manages ORNL for the Department of Energy’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. The Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.

Source: ORNL