Tips for keeping workloads open and portable and maximizing GPU investments

Ask any teenager, and you can learn a lot about the importance of GPUs. When trekking across the island in Fornite Battle Royale, out-living 99 other gamers requires a qualitative edge. Running in “epic mode” (with render-scale at 100% with 1440p resolution) ups your game considerably. You can spot opponents at a distance and react that much faster.

While this virtual arms race among gamers (now the world’s largest entertainment industry at $100B annually), helped push GPUs to the mainstream, the action has shifted to HPC and AI datacenters. NVIDIA reported 133% growth in its AI-fuelled datacenter business in 2018, exceeding the growth rate of its gaming business by nearly a factor of four. AI-optimized NVIDIA Telsa V100 GPUs each deliver a staggering 125 TFlops of TensorFlow performance, dwarfing the 8.1 TFlops in PC-based GTX 1070 Ti hardware used by serious gamers. In the article, we’ll explore large-scale GPU workloads in the cloud and offer strategies to simplify management and help ensure cross-cloud compatibility for GPU workloads.

New applications for GPUs

Applications in HPC and AI such as training deep neural networks require a massive amount of computing power. A proof-of-concept may start small, but for production AI applications a single GPU is seldom enough. HPC and AI model training models frequently run across many hosts, each with multiple GPUs connected via fast NVIDIA NVLink interconnects.

[Read HPCwire article “Taking the AI Training Wheels Off: From PoC to Production”]

While applications like computer vision and autonomous vehicles get much of the attention, Machine Learning (ML) has become pervasive across many industries. For example, online advertisers will constantly run real-time predictive models to determine optimal banner ad placements to maximize click-through rates (CTRs). In 2018, IBM announced a world record result in training predictive models of this type. Using IBM’s Snap ML running across four IBM Power Systems AC922 servers and 16 NVIDIA V100 Tensor Core GPUs, IBM trained a model based on a benchmark dataset of over 4 billion samples in just 91.5 seconds – 46 times faster than the previous record. Distributed GPU clusters have become mainstream in applications from computational chemistry to fraud analytics to natural language processing.

New challenges – GPU driver and CUDA version purgatory

For readers not familiar with GPU software, NVIDIA CUDA is a parallel programming environment and run-time that simplifies the development of GPU applications.

Even in the cloud, issues such as GPU-hardware, host topology, OS versions, and driver versions still matter. For example, Tensorflow has dependencies that include the GPU driver version, the CUDA toolkit version, and other libraries and SDKs. Consider that each HPC and AI application has a set of similar dependencies and you can see the complexity that results – administrators running HPC and AI applications on GPU clusters can find themselves in driver and CUDA version purgatory.

Cloud providers sometimes mask this complexity by providing GPU-powered “ML-as-a-service” offerings. While convenient, these services usually support only a single machine learning framework, are inflexible, and can lock users into a single cloud provider.

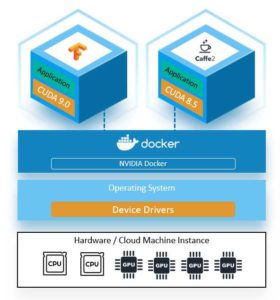

While containers help with portability generally, this isn’t always true with GPUs. Since Docker is hardware agnostic, it’s unaware of the GPUs and drivers running on an underlying host. This means that to use a GPU, libraries and drivers need to be present in each container. The requirement that library and device driver versions inside and outside the container precisely match is a big issue inhibiting container portability across GPU machine instances and cloud providers.

[Read HPCwire article “The Perils of Becoming Trapped in the Cloud”]

NVIDIA Docker to the rescue

To solve this problem and enable containerized GPU applications that are portable across machine instances and clouds, NVIDIA developed NVIDIA Docker. NVIDIA Docker provides driver agnostic CUDA images and a Docker command line wrapper that mounts user-space components of the GPU driver into the container automatically. NVIDIA Docker also transparently maps devices inside the container to devices on the underlying GPU machine instance. This means that containers running different versions of CUDA libraries can coexist and share the same machine either on-premises or in your favorite cloud provider’s infrastructure service.

NVIDIA Docker allows application containers to share GPU-capable Docker hosts regardless of the CUDA library version

HPC and AI-ready containers

NVIDIA Docker has quickly become a standard for building and deploying containerized GPU applications. With NVIDIA Docker, customers and software providers can pull the container with the CUDA version required by their application, add additional components and application-level software, and derive their own ready-to-run HPC or AI application containers.

NVIDIA’s GPU Cloud (NGC) and IBM’s ibmcom/powerai repository on Docker Hub both provide containerized GPU-applications based on NVIDIA Docker for disciplines that include HPC, Machine Learning, Inference, Visualization, and other applications.

By containerizing GPU-optimized workloads with NVIDIA Docker, GPU applications become portable across clouds. The next challenge is making these containerized, GPU applications manageable in multi-cloud environments and this is where workload and resource management comes in.

Managing multi-cloud HPC and AI workloads at scale

Managing HPC and AI workloads at scale is considerably more challenging than running workloads on just one or a handful of hosts. Production environments typically involve multiple users and groups, diverse hardware and operating environments, and a wide variety of workloads with different business priorities and resource requirements. Managing workloads and infrastructure efficiently requires sophisticated management tools.

IBM Spectrum Computing products are both GPU and container aware and support automated policy-based bursting across hybrid multi-cloud environments. IBM Watson Machine Learning Accelerator leverages Spectrum Computing technology to support multiple machine learning frameworks with elastic resource sharing. For users running HPC or machine learning applications, IBM Spectrum LSF provides native support for NVIDIA Docker along with sophisticated topology-aware and affinity-based scheduling that can place applications such as TensorFlow, Caffe, and other distributed frameworks optimally to maximize performance and resource use.

Cross-cloud GPU container portability with IBM Spectrum Computing and NVIDIA Docker

Both IBM Spectrum LSF and IBM Spectrum Symphony can connect seamlessly to multiple cloud providers including IBM Cloud, Amazon Web Services, Google Cloud Platform, and Microsoft Azure. Based on configurable policies, both workload managers can dynamically add cloud-resident machine instances using a built-in resource connector, run containerized GPU workloads in a fashion that is transparent to end users and release cloud-resident hosts when they are no longer needed.

While gaming remains a key application for GPUs, GPUs are also helping enterprises “up their game” in other ways with new HPC applications and AI-powered services running on distributed cloud-based GPU clusters. The combination of NVIDIA Docker and IBM Spectrum Computing helps keep your environment flexible and portable enabling seamless cross-cloud compatibility for the full range of distributed GPU workloads.

For the latest information about IBM Spectrum LSF family including technical blogs and how-to videos, join the IBM Spectrum LSF online user community. This is a community to share your experiences, connect with your peers and learn from others. We welcome your participation.