Cost-effective Fork of GPT-3 Released to Scientists

March 28, 2023

Researchers looking to create a foundation for a ChatGPT-style application now have an affordable way to do so. Cerebras is releasing open-source learning models for researchers with the ingredients necessary to cook up their own ChatGPT-AI applications. The open-source tools include seven models that form a learning... Read more…

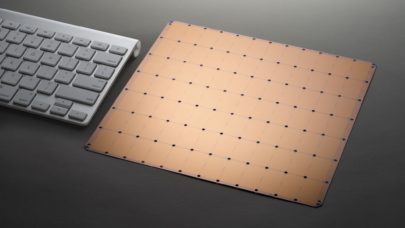

Researchers Can Get Free Access to World’s Largest Chip Supporting GPT-3

February 28, 2023

Since ChatGPT took the world by storm, companies opened pocketbooks to explore the tech. It also brought attention to OpenAI's GPT-3, the large-language model b Read more…

Gordon Bell Special Prize Goes to LLM-Based Covid Variant Prediction

November 17, 2022

For three years running, ACM has awarded not only its long-standing Gordon Bell Prize (read more about this year’s winner here!) but also its Gordon Bell Spec Read more…

Gordon Bell Nominee Used LLMs, HPC, Cerebras CS-2 to Predict Covid Variants

November 17, 2022

Large language models (LLMs) have taken the tech world by storm over the past couple of years, dominating headlines with their ability to generate convincing hu Read more…

Cerebras Builds ‘Exascale’ AI Supercomputer

November 14, 2022

Cerebras is putting down stakes to be a player in the AI cloud computing with a supercomputer called Andromeda, which achieves over an exaflops of "AI performan Read more…

Cerebras Chip Part of Project to Spot Post-exascale Technology

October 19, 2022

Cerebras Systems has secured another U.S. government win for its wafer scale engine chip – which is considered the largest chip in the world. The company's chip technology will be part of a research project sponsored by the National Nuclear Security Administration to find... Read more…

Computer History Museum Honors Cerebras Systems – Watch a Replay of the Event

August 3, 2022

When Cerebras Systems had its coming out at Hot Chips in August 2019, the hardware community wasn't sure what to think. Attendees were understandably skeptical of the novel "wafer-scale" technology, not to mention an estimated power envelope of ~15 kilowatts for the chip alone. In the intervening three years, the company... Read more…

LRZ Adds Mega AI System as It Stacks up on Future Computing Systems

May 25, 2022

The battle among high-performance computing hubs to stack up on cutting-edge computers for quicker time to science is getting steamy as new chip technologies become mainstream. A European supercomputing hub near Munich, called the Leibniz Supercomputing Centre, is deploying Cerebras Systems' CS-2 AI system as part of an internal initiative called Future Computing to assess alternative computing... Read more…

- Click Here for More Headlines

Whitepaper

Transforming Industrial and Automotive Manufacturing

In this era, expansion in digital infrastructure capacity is inevitable. Parallel to this, climate change consciousness is also rising, making sustainability a mandatory part of the organization’s functioning. As computing workloads such as AI and HPC continue to surge, so does the energy consumption, posing environmental woes. IT departments within organizations have a crucial role in combating this challenge. They can significantly drive sustainable practices by influencing newer technologies and process adoption that aid in mitigating the effects of climate change.

While buying more sustainable IT solutions is an option, partnering with IT solutions providers, such and Lenovo and Intel, who are committed to sustainability and aiding customers in executing sustainability strategies is likely to be more impactful.

Learn how Lenovo and Intel, through their partnership, are strongly positioned to address this need with their innovations driving energy efficiency and environmental stewardship.

Download Now

Sponsored by Lenovo

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.