ExaFLOPS; You Keep Using That Word

October 12, 2023

A recent article on Tom's Hardware began with the headline "China Wants 300 ExaFLOPS of Compute Power by 2025." Intrigued, further reading finds the following l Read more…

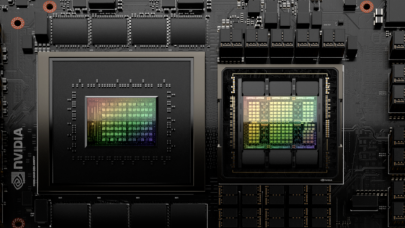

Nvidia Adds Faster HBM3e Memory to the GH200 Grace Hopper Platform

August 9, 2023

Nvidia Grace-Hopper offers a tightly integrated CPU + GPU solution for what is becoming a generative AI dominated market. To increase performance, the graphics Read more…

What’s New in HPC Research: September (Part 1)

September 18, 2018

In this new bimonthly feature, HPCwire will highlight newly published research in the high-performance computing community and related domains. From exascale Read more…

Evolving Exascale Applications Via Graphs

April 29, 2014

There is little point to building expensive exaflop-class computing machines if applications are not available to exploit the tremendous scale and parallelism. Read more…

Experts Discuss the Future of Supercomputers

January 29, 2013

Noted HPC pioneers weigh in on the coming class of exascale systems. Read more…

Petaflop In a Box

June 6, 2012

As we move down the road toward exascale computing and engage in discussion of zettascale, one issue becomes increasingly obvious: we are leaving a large part of the HPC community behind. But it needn't be so. If we developed compact, power efficient petascale computers, not only could we help broaden the base of high-end users, but we could also provide a foundation for future bleeding-edge supercomputers. Read more…

Preoccupied with Exascale

March 31, 2011

Is the HPC community too focused on the 10-year milestone? Read more…

Compilers and More: Expose, Express, Exploit

March 28, 2011

In Michael Wolfe's second column on programming for exascale systems, he underscores the importance of exposing parallelism at all levels of design, either explicitly in the program, or implicitly within the compiler. Wolfe calls on developers to express this parallelism, in a language and in the generated code, and to exploit the parallelism, efficiently and effectively, at runtime on the target machine. He reminds the community that the only reason to pursue parallelism is for higher performance. Read more…

- Click Here for More Headlines

Whitepaper

Transforming Industrial and Automotive Manufacturing

In this era, expansion in digital infrastructure capacity is inevitable. Parallel to this, climate change consciousness is also rising, making sustainability a mandatory part of the organization’s functioning. As computing workloads such as AI and HPC continue to surge, so does the energy consumption, posing environmental woes. IT departments within organizations have a crucial role in combating this challenge. They can significantly drive sustainable practices by influencing newer technologies and process adoption that aid in mitigating the effects of climate change.

While buying more sustainable IT solutions is an option, partnering with IT solutions providers, such and Lenovo and Intel, who are committed to sustainability and aiding customers in executing sustainability strategies is likely to be more impactful.

Learn how Lenovo and Intel, through their partnership, are strongly positioned to address this need with their innovations driving energy efficiency and environmental stewardship.

Download Now

Sponsored by Lenovo

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.