STEM-Trek NRG@SC23 Workshop: Inspiring and Enlightening!

December 18, 2023

STEM-Trek, a nonprofit that supports scholarly travel, mentoring, and advanced skills training for science, technology, engineering, and mathematics, hosted a p Read more…

Intel’s PSG Spin-off Hints at a Detached Future

October 9, 2023

Intel has been clear about its intent to be a manufacturing-first company, but the big question was: how would it get there? Especially when the cost of new fac Read more…

The New Velocity Bench for GPU Offload Performance Data

July 9, 2023

Performance benchmarking is the hallmark of HPC. One need to look no further than the Top500 list which has been recording HPC performance since 1993. Historica Read more…

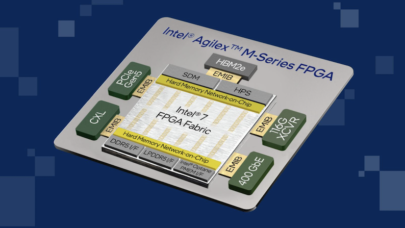

FPGA Development Brings AMD and Intel Competition to the Forefront

June 28, 2023

AMD has maintained steady progress in the Field Programmable Gate Array (FPGA) market, gaining market share where Intel has faltered. While Intel is plannin Read more…

Intel’s New Programmable Chips Next Year to Replace Aging Products

September 27, 2022

Intel shared its latest roadmap of programmable chips, and doesn't want to dig itself into a hole by following AMD's strategy in the area. "We're thankfully not matching their strategy," said Shannon Poulin, corporate vice president for the datacenter and AI group at Intel, in response to a question posed by HPCwire during a press briefing. The updated roadmap pieces together Intel's strategy for FPGAs... Read more…

AMD/Xilinx Takes Aim at Nvidia with Improved VCK5000 Inferencing Card

March 8, 2022

AMD/Xilinx has released an improved version of its VCK5000 AI inferencing card along with a series of competitive benchmarks aimed directly at Nvidia’s GPU line. AMD says the new VCK5000 has 3x better performance than earlier versions and delivers 2x TCO over Nvidia T4. AMD also showed favorable benchmarks against several Nvidia GPUs, claiming its VCK5000 achieved... Read more…

Xilinx Targets HPC and Datacenter with New Alveo U55C FPGA-Card

November 15, 2021

At SC21 today, Xilinx launched its most powerful FPGA-based accelerator card – the Alveo U55C – specifically targeting HPC workloads and the datacenter. FPGAs (field programmable gate arrays) have a long productive history as customized accelerator chips used in many embedded applications. It’s only in the last few years that FPGA suppliers have begun... Read more…

Xilinx Expands Versal Chip Family With 7 New Versal AI Edge Chips

June 10, 2021

FPGA chip vendor Xilinx has been busy over the last several years cranking out its Versal AI Core, Versal Premium and Versal Prime chip families to fill customer compute needs in the cloud, datacenters, networks and more. Now Xilinx is expanding its reach to the booming edge... Read more…

- Click Here for More Headlines

Whitepaper

Transforming Industrial and Automotive Manufacturing

In this era, expansion in digital infrastructure capacity is inevitable. Parallel to this, climate change consciousness is also rising, making sustainability a mandatory part of the organization’s functioning. As computing workloads such as AI and HPC continue to surge, so does the energy consumption, posing environmental woes. IT departments within organizations have a crucial role in combating this challenge. They can significantly drive sustainable practices by influencing newer technologies and process adoption that aid in mitigating the effects of climate change.

While buying more sustainable IT solutions is an option, partnering with IT solutions providers, such and Lenovo and Intel, who are committed to sustainability and aiding customers in executing sustainability strategies is likely to be more impactful.

Learn how Lenovo and Intel, through their partnership, are strongly positioned to address this need with their innovations driving energy efficiency and environmental stewardship.

Download Now

Sponsored by Lenovo

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.