Google’s DeepMind Has a Long-term Goal of Artificial General Intelligence

September 14, 2022

When DeepMind, an Alphabet subsidiary, started off more than a decade ago, solving some most pressing research questions and problems with AI wasn’t at the top of the company’s mind. Instead, the company started off AI research with computer games. Every score and win was a measuring stick of success... Read more…

Using Exascale Supercomputers to Make Clean Fusion Energy Possible

September 2, 2022

Fusion, the nuclear reaction that powers the Sun and the stars, has incredible potential as a source of safe, carbon-free and essentially limitless energy. But Read more…

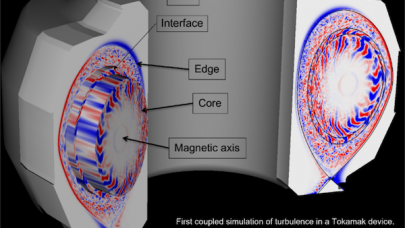

Fusion Plasma Simulation Software Couples Tokamak Core to Edge Physics

July 15, 2022

The development of a whole device model (WDM) for a fusion reactor is critical for the science of magnetically confined fusion plasmas. In the next decade, the Read more…

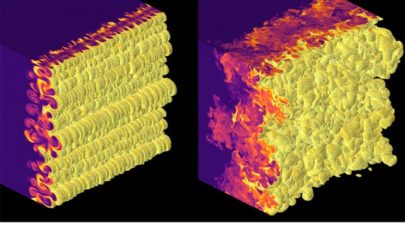

Supercomputer Research Investigates Fusion Instabilities

May 26, 2021

Inertial confinement fusion (ICF) experiments is a speculative method of fusion energy generation that would compress a fuel pellet to generate fusion energy ju Read more…

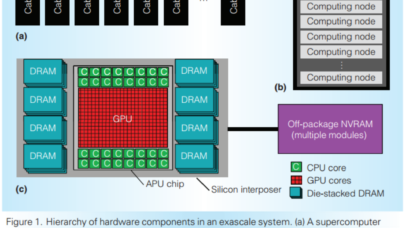

AMD’s Exascale Strategy Hinges on Heterogeneity

July 29, 2015

In a recent IEEE Micro article, a team of engineers and computers scientists from chipmaker Advanced Micro Devices (AMD) detail AMD's vision for exascale computing, which in its most essential form combines CPU-GPU integration with hardware and software support to facilitate the running of scientific workloads on exascale-class systems. Read more…

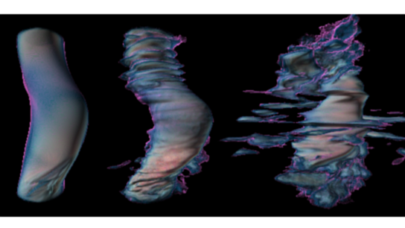

Supercomputing Propels Fusion Science

September 29, 2014

University of Texas at Austin physicist Wendell Horton has been using the resources of the Texas Advanced Computing Center (TACC) to study the full 3D structure Read more…

One Step Closer to Fusion Energy

August 27, 2013

Fusion science, which seeks to recreate the energy of the stars for use on Earth, has long been the holy grail of energy researchers. A recent experiment at Lawrence Livermore's National Ignition Facility puts fusion energy one step closer. Read more…

Sequoia Goes Core-AZY

March 20, 2013

LLNL researchers have successfully harnessed all 1,572,864 of Sequoia's cores for one impressive simulation. Read more…

- Click Here for More Headlines

Whitepaper

Transforming Industrial and Automotive Manufacturing

In this era, expansion in digital infrastructure capacity is inevitable. Parallel to this, climate change consciousness is also rising, making sustainability a mandatory part of the organization’s functioning. As computing workloads such as AI and HPC continue to surge, so does the energy consumption, posing environmental woes. IT departments within organizations have a crucial role in combating this challenge. They can significantly drive sustainable practices by influencing newer technologies and process adoption that aid in mitigating the effects of climate change.

While buying more sustainable IT solutions is an option, partnering with IT solutions providers, such and Lenovo and Intel, who are committed to sustainability and aiding customers in executing sustainability strategies is likely to be more impactful.

Learn how Lenovo and Intel, through their partnership, are strongly positioned to address this need with their innovations driving energy efficiency and environmental stewardship.

Download Now

Sponsored by Lenovo

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.