More Cores, Higher Prices: Intel’s Server Revenue Expected to Increase

November 3, 2023

Intel's data center business is recovering, showing healthy margins despite growing competitive pressure. The healthy margins result from higher average se Read more…

Intel Plans Falcon Shores 2 GPU Supercomputing Chip for 2026

August 8, 2023

Intel is planning to onboard a new version of the Falcon Shores chip in 2026, which is code-named Falcon Shores 2. The new product was announced by CEO Pat Gel Read more…

Cerebras Has Big Plans for Big AI Chips: Build Your Own Cloud

July 20, 2023

Hyping an AI chip is one thing, but proving its usability in the commercial market is a bigger challenge. Some AI chip companies -- which are still prov Read more…

Intel Admits GPU Mistakes, Reveals New Supercomputing Chip Roadmap

May 22, 2023

Intel has finally provided specific details on wholesale changes it has made to its supercomputing chip roadmap after an abrupt reversal of an ambitious plan to Read more…

Intel’s Habana Labs Takes on Prominent Role as Generative AI Surges

May 9, 2023

Intel acquired AI chipmaker Habana Labs just four years ago; now, the division is serving – per Habana COO Eitan Medina – as “effectively the center of ex Read more…

Intel Issues Roadmap Update, Aims for ‘Scheduled Predictability’

March 30, 2023

Intel held an investor webinar yesterday, with the chip giant working to project consistency and confidence amid slipping roadmaps and market share. At the even Read more…

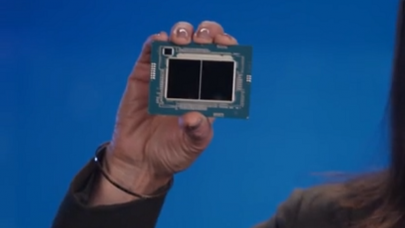

Intel’s Server Chips Are ‘Lead Vehicles’ for Manufacturing Strategy

March 30, 2023

…But chipmaker still does not have an integrated product strategy, which puts the company behind AMD and Nvidia. Intel finally has a full complement of server and PC chips it will release in the coming years, which will determine whether it has regained its leadership in chip manufacturing. The chipmaker this week... Read more…

Intel’s Gaudi3 AI Chip Survives Axe, Successor May Combine with GPUs

February 1, 2023

Intel's paring projects and products amid financial struggles, but AI products are taking on a major role as the company tweaks its chip roadmap to account for Read more…

- Click Here for More Headlines

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Whitepaper

Transforming Industrial and Automotive Manufacturing

Divergent Technologies developed a digital production system that can revolutionize automotive and industrial scale manufacturing. Divergent uses new manufacturing solutions and their Divergent Adaptive Production System (DAPS™) software to make vehicle manufacturing more efficient, less costly and decrease manufacturing waste by replacing existing design and production processes.

Divergent initially used on-premises workstations to run HPC simulations but faced challenges because their workstations could not achieve fast enough simulation times. Divergent also needed to free staff from managing the HPC system, CAE integration and IT update tasks.

Download Now

Sponsored by TotalCAE

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.