Google Cloud Waives Cloud Exit Fees, Throws Down Gauntlet to AWS and Azure

January 17, 2024

In a bold move by the number three public cloud company yesterday, Google Cloud announced it will no longer charge customers extra to move their data out of its Read more…

Finding Opportunity in the High-Growth ‘AI Market’

December 6, 2023

“What’s the size of the AI market?” It’s a totally normal question for anyone to ask me. After all, I’m an analyst, and my company, Intersect360 Research, specializes in scalable, high-performance datacenter segments, such as AI, HPC, and Hyperscale. Read more…

Grace Hopper’s Big Debut in AWS Cloud While Graviton4 Launches

November 29, 2023

Editors Note: Additional Coverage of the AWS-Nvidia 65 Exaflop ‘Ultra-Cluster’ and Graviton4 can be found on our sister site Datanami. Amazon Web Service Read more…

Google AI Supercomputer Shows the Potential of Optical Interconnects

April 10, 2023

There are limits on the speed of how fast copper wires can move data between computers, and a transition to light speed will ultimately drive AI and high-performance computing forward. Every major chipmaker is in agreement that optical interconnects will be needed to reach zettascale computing in an energy-efficient way. That opinion was... Read more…

DGX Cloud Is Here: Nvidia’s AI Factory Services Start at $37,000

March 21, 2023

If you are a die-hard Nvidia loyalist, be ready to pay a fortune to use its AI factories in the cloud. Renting the GPU company's DGX Cloud, which is an all-inclusive AI supercomputer in the cloud, starts at $36,999 per instance for a month. The rental includes access to a cloud computer with eight Nvidia H100 or A100 GPUs and 640GB... Read more…

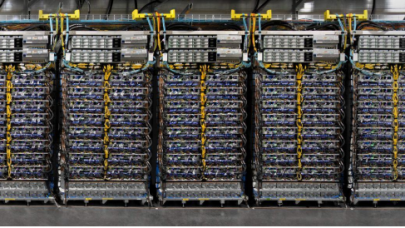

Google Cloud’s New TPU v4 ML Hub Packs 9 Exaflops of AI

May 16, 2022

Almost exactly a year ago, Google launched its Tensor Processing Unit (TPU) v4 chips at Google I/O 2021, promising twice the performance compared to the TPU v3. At the time, Google CEO Sundar Pichai said that Google’s datacenters would “soon have dozens of TPU v4 Pods, many of which will be... Read more…

Big Three Cloud Providers Halt New Business in Russia

March 10, 2022

Add Amazon Web Services to the growing list of companies (tech and otherwise) that are curtailing business with Russia in opposition to President Putin’s invasion of Ukraine. As reported in the New York Times and then by Amazon itself, Amazon Web Services is blocking new sign-ups from Russia and Belarus. Existing customers are not impacted. “We’ve suspended shipment of retail... Read more…

Q&A with Google’s Bill Magro, an HPCwire Person to Watch in 2021

June 11, 2021

Last Fall Bill Magro joined Google as CTO of HPC, a newly created position, after two decades at Intel, where he was responsible for the company's HPC strategy. Read more…

- Click Here for More Headlines

Whitepaper

Transforming Industrial and Automotive Manufacturing

In this era, expansion in digital infrastructure capacity is inevitable. Parallel to this, climate change consciousness is also rising, making sustainability a mandatory part of the organization’s functioning. As computing workloads such as AI and HPC continue to surge, so does the energy consumption, posing environmental woes. IT departments within organizations have a crucial role in combating this challenge. They can significantly drive sustainable practices by influencing newer technologies and process adoption that aid in mitigating the effects of climate change.

While buying more sustainable IT solutions is an option, partnering with IT solutions providers, such and Lenovo and Intel, who are committed to sustainability and aiding customers in executing sustainability strategies is likely to be more impactful.

Learn how Lenovo and Intel, through their partnership, are strongly positioned to address this need with their innovations driving energy efficiency and environmental stewardship.

Download Now

Sponsored by Lenovo

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.