Tesla Bulks Up Its GPU-Powered AI Super – Is Dojo Next?

August 16, 2022

Tesla has revealed that its biggest in-house AI supercomputer – which we wrote about last year – now has a total of 7,360 A100 GPUs, a nearly 28 percent uplift from its previous total of 5,760 GPUs. That’s enough GPU oomph for a top seven spot on the Top500, although the tech company best known for its electric vehicles has not publicly benchmarked the system. If it had, it would... Read more…

Enter Dojo: Tesla Reveals Design for Modular Supercomputer & D1 Chip

August 20, 2021

Two months ago, Tesla revealed a massive GPU cluster that it said was “roughly the number five supercomputer in the world,” and which was just a precursor to Tesla’s real supercomputing moonshot: the long-rumored, little-detailed Dojo system. Read more…

Ahead of ‘Dojo,’ Tesla Reveals Its Massive Precursor Supercomputer

June 22, 2021

In spring 2019, Tesla made cryptic reference to a project called Dojo, a “super-powerful training computer” for video data processing. Then, in summer 2020, Tesla CEO Elon Musk tweeted: “Tesla is developing a [neural network] training computer... Read more…

Industry Veteran Jim Keller Joins Tenstorrent as President and CTO

January 6, 2021

Jim Keller has already had a storied career. Over the past few decades, Keller (pictured above) has worked everywhere from AMD to Tesla, helping to develop new Read more…

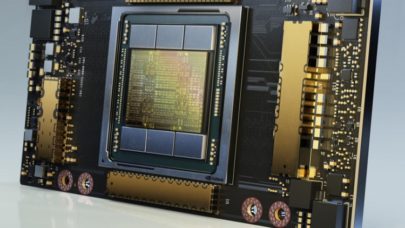

Nvidia’s Ampere A100 GPU: Up to 2.5X the HPC, 20X the AI

May 14, 2020

Nvidia's first Ampere-based graphics card, the A100 GPU, packs a whopping 54 billion transistors on 826mm2 of silicon, making it the world's largest seven-nanom Read more…

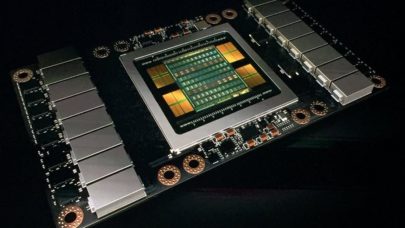

Nvidia’s Mammoth Volta GPU Aims High for AI, HPC

May 10, 2017

At Nvidia's GPU Technology Conference (GTC17) in San Jose, Calif., this morning, CEO Jensen Huang announced the company's much-anticipated Volta architecture a Read more…

Nvidia Launches Pascal GPUs for Deep Learning Inferencing

September 12, 2016

Already entrenched in the deep learning community for neural net training, Nvidia wants to secure its place as the go-to chipmaker for datacenter inferencing. At the GPU Technology Conference (GTC) in Beijing Tuesday, Nvidia CEO Jen-Hsun Huang unveiled the latest additions to the Tesla line, Pascal-based P4 and P40 GPU accelerators, as well as new software all aimed at improving performance for inferencing workloads that undergird applications like voice-activated assistants, spam filters, and recommendation engines. Read more…

NVIDIA Unleashes Monster Pascal GPU Card at GTC16

April 5, 2016

Tuesday at the seventh-annual GPU Technology Conference (GTC) in San Jose, Calif., NVIDIA revealed its first Pascal-architecture based GPU card, the P100, calling it "the most advanced accelerator ever built." The P100 is based on the NVIDIA Pascal GP100 GPU... Read more…

- Click Here for More Headlines

Whitepaper

Transforming Industrial and Automotive Manufacturing

In this era, expansion in digital infrastructure capacity is inevitable. Parallel to this, climate change consciousness is also rising, making sustainability a mandatory part of the organization’s functioning. As computing workloads such as AI and HPC continue to surge, so does the energy consumption, posing environmental woes. IT departments within organizations have a crucial role in combating this challenge. They can significantly drive sustainable practices by influencing newer technologies and process adoption that aid in mitigating the effects of climate change.

While buying more sustainable IT solutions is an option, partnering with IT solutions providers, such and Lenovo and Intel, who are committed to sustainability and aiding customers in executing sustainability strategies is likely to be more impactful.

Learn how Lenovo and Intel, through their partnership, are strongly positioned to address this need with their innovations driving energy efficiency and environmental stewardship.

Download Now

Sponsored by Lenovo

Whitepaper

How Direct Liquid Cooling Improves Data Center Energy Efficiency

Data centers are experiencing increasing power consumption, space constraints and cooling demands due to the unprecedented computing power required by today’s chips and servers. HVAC cooling systems consume approximately 40% of a data center’s electricity. These systems traditionally use air conditioning, air handling and fans to cool the data center facility and IT equipment, ultimately resulting in high energy consumption and high carbon emissions. Data centers are moving to direct liquid cooled (DLC) systems to improve cooling efficiency thus lowering their PUE, operating expenses (OPEX) and carbon footprint.

This paper describes how CoolIT Systems (CoolIT) meets the need for improved energy efficiency in data centers and includes case studies that show how CoolIT’s DLC solutions improve energy efficiency, increase rack density, lower OPEX, and enable sustainability programs. CoolIT is the global market and innovation leader in scalable DLC solutions for the world’s most demanding computing environments. CoolIT’s end-to-end solutions meet the rising demand in cooling and the rising demand for energy efficiency.

Download Now

Sponsored by CoolIT

Advanced Scale Career Development & Workforce Enhancement Center

Featured Advanced Scale Jobs:

HPCwire Resource Library

HPCwire Product Showcase

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.