On Wednesday, the National Science Foundation (NSF) announced the award recipients for two highly coveted petascale supercomputers. The NSF selected the University of Illinois at Urbana-Champaign (UIUC) for the “Track 1” grant, while the University of Tennessee was selected for “Track 2.” The Track 1 system represents a multi-petaflop supercomputer; Track 2 represents a smaller system that’s expected to come in at just shy of a petaflop. The National Science Board met on Monday to approve the funding for the two supercomputers.

Specific information about the machines was not revealed and will not be forthcoming until the award process is completed — probably sometime in the fall.

UIUC is slated to receive $208 million over four and a half years to acquire and deploy the multi-petaflop machine, code named “Blue Waters.” It will be operated by the National Center for Supercomputing Applications (NCSA) and its academic and industry partners in the Great Lakes Consortium for Petascale Computation. The system is expected to go online in 2011.

The sub-petaflop will be installed at the University of Tennessee at Knoxville Joint Institute for Computational Science. The $65 million, five-year project will include partners at Oak Ridge National Laboratory (ORNL), the Texas Advanced Computing Center (TACC), and the National Center for Atmospheric Research (NCAR).

Here’s where it gets interesting. Most of the information stated above was already known last week when an NSF staffer accidentally posted the names of the winning proposals on an NSF website. Before the information could be removed, the supercomputing community had gotten wind of the decisions. And, as you might imagine, a lot of people on the losing end of the awards are already questioning the selections.

One could pass this off as sour grapes by the losers, but I have a sense something else is going on here. According to my sources, people have been concerned about the NSF petascale awards process almost from the start. In a New York Times piece on the NSF grants earlier in the week, Lawrence Berkeley National Laboratory’s Horst Simon was quoted as saying:

“Several government supercomputing scientists said they were concerned that the decision might raise questions about impartiality and political influence. The process needs to be above all suspicion. It’s in the interest of the national community that there is not even a cloud of suspicion, and there already is one.”

Although nobody was willing to go on the record with me, I learned some interesting tidbits from a few individuals who were close to the proposals. Since there is no way to confirm any of this, take all of the following with a grain of salt.

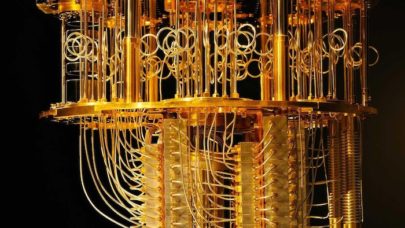

To be begin with, the Track 1 supercomputer bid by UIUC appears to be an IBM PERCS system — the same system being developed for DARPA’s High Productivity Computing Systems (HPCS) program. The Track 2 supercomputer bid by the University of Tennessee appears to be a Baker-class Cray machine, essentially a precursor to the company’s HPCS Cascade architecture. I’ll get to why this may be significant in just a moment.

Putting aside the Track 2 award, let’s look at the Track 1 proposals. According to my sources, there were four bids:

1. Carnegie Mellon University/Pittsburgh Supercomputing Center (plus partners?): This group bid a system based on Intel’s future terascale processors. Intel has demonstrated an 80-core processor prototype that has achieved a teraflop. I’m not sure of the peak performance for the proposed system; it may be as high as 40 petaflops.

2. University of California, San Diego/San Diego Supercomputing Center along with Lawrence Berkeley National Laboratory and others: The “California” bid was a million-core IBM Blue Gene/Q system, reputed to be in the 20-petaflop range. The host site is rumored to be Lawrence Livermore National Laboratory.

3. University of Tennessee/ORNL (plus others?): This group proposed a 20-petaflop Cray machine. If true, we can assume it was a Cascade machine (Marble- or Granite-class) .

4. University of Illinois at Urbana-Champaign/NCSA along with the Great Lakes Consortium for Petascale Computation: They proposed and won with an IBM PERCS. It’s thought to be a 10-petaflop system.

As it turned out, at 10 petaflops the winning bid was the least powerful machine in the bunch, peak performance-wise. Even at that, if the system goes live in 2011 as planned, it may very well be the most powerful supercomputer in the world. Keep in mind though that the Japanese are also planning to launch a 10-petaflop machine in the same timeframe.

There may be a number of reasons why the NSF made the selection in favor of PERCS, and I sure would be interested to know what they are. The system is almost certainly not the best in the group in terms of performance-per-watt. I would guess both the Blue Gene/Q and the Intel Wonder machine would be more energy-efficient. Since we don’t know enough about software support for any of these multi-petaflop systems, it’s difficult to compare them on their ability to field big science applications.

One other unusual aspect to the Track 1 selection is that, as HPC centers go, UIUC/NCSA doesn’t have an established reputation for cutting-edge supers. It’s been content to do its work with a number of smaller HPC systems. The PERCS machine is supposed to be housed at UIUC, but no facility yet exists that can accommodate it. We have to assume that all this is going to change.

In defense of the selection, NCSA is one of the five big regional supercomputing centers in the United States and could conceivably grow into this role. The PERCS machine is a pretty safe bet, technology-wise, since DARPA HPCS is helping to fund this effort and investing in IBM is usually a conservative strategy. Certainly, IBM is enthusiastic about the PERCS architecture and especially the POWER7 processor that it is to be based on.

Perhaps the most unfortunate aspect to this process is that a lot of questions will remain unanswered. This is a result of the rather opaque nature of the NSF review process. To be sure, the review criteria are spelled out in Section VI of the NSF Track 1 solicitation, but the actual process is not. Who are the reviewers and how did they qualitatively balance the different criteria? One assumes that the reviewers composed responses to each proposal, but only the awarded proposals go into the public record, and I’m not sure if the feedback from the NSF will be included.

There has been some talk that there were too few qualified proposal reviewers. The argument was that because most of the HPC brain trust had a vested interest in one of the four proposals, there were no qualified reviewers without conflict of interest baggage. I’m not sure if I buy that. The HPC population seems too large and spread out for that to be an issue. Nonetheless, this seems to be an issue with some in the community.

There is also speculation that the review group was influenced by one or more individuals who were (or are) involved in the HPCS program. If true, this could have unfairly steered the selection toward the HPCS systems from Cray and IBM, instead of more speculative architectures. There’s no way to tell if this occurred, but the results suggest this is a possibility.

I suppose it could be argued that what’s good enough for DARPA is good enough for the NSF. But keep in mind that the HPCS mission is to create productive and commercially viable supercomputing systems for a range of government and industrial applications; the NSF petascale goal is to find big systems to do big science. Obviously, there’s some overlap here, but it’s reasonable to imagine that these two missions could lead to different computing platforms.

For its part, the NSF sticks by its reviewers and its selection process. Leslie Fink, representing NSF’s Office of Legislative and Public Affairs sent me the following response to my inquiry about the review process:

“Identities of the reviewers are … confidential,” said Fink. “NSF has a strict conflict of interest policy, and heroic efforts are made to ensure panel members are not in conflict with the proposers. Basically, what happens in review stays in review.”

I guess the big frustration here is that because of the lack of transparency, much of the story will remain hidden. Short of a Congressional inquiry, the NSF isn’t obligated to provide the rationale for awarding these grants, and the losing bids will never be made public. It’s possible that the reviewers did manage to find the best way to spend the taxpayer’s money. I hope so. But since the process takes place behind closed doors, we’ll never know.

——

As always, comments about HPCwire are welcomed and encouraged. Write to me, Michael Feldman, at [email protected].