At Oak Ridge National Laboratory this week, 131 HPC User Forum participants from the U.S. and Europe discussed current examples of leadership computing and challenges in moving toward petascale computing by the end of the decade.

Vendor updates were given by Cray Inc., Hewlett Packard Co., Intel Corp., Level 5 Networks Inc., Liquid Computing Corp., Panasas Inc., PathScale Inc., Silicon Graphics Inc. and Voltaire Inc.

According to IDC vice president Earl Joseph, who serves as executive director of the HPC User Forum, the buying power of users at the meeting exceeded $1 billion. In his update on the technical market, he noted that revenue grew 49 percent during the past two years, reaching $7.25 billion in 2004. Clusters have redefined pricing for technical servers. The new IDC Balanced Rating tool (www.idc.com/hpc) allows users to custom-sort and rank the performance of 2,500 installed HPC systems on a substantial list of standard benchmarks, including the HPC Challenge tests.

Paul Muzio, steering committee chairman and vice president of government programs for Network Computing Services, Inc. and Support Infrastructure Director of the Army High Performance Computing Research Center, said the HPC User Forum's overall goal is to promote the use of HPC in industry, government and academia. This includes addressing important issues for users.

Jim Roberto, ORNL Deputy for Science and Technology, welcomed participants to the lab and gave an overview. ORNL is DOE's largest multipurpose science laboratory, with a $1.05 billion annual budget, 3,900 employees and 3,000 research guests annually. A $300 million modernization is in progress. ORNL's new $65 million nanocenter begins operating in October and complements the lab's neutron scattering capabilities.

Thomas Zacharia, ORNL's associate director for Computing and Computational Sciences, said computational science will have a profound impact in driving science forward. ORNL, selected to be the DOE's main facility for Leadership Computing, plans to grow its machines to 100 teraflops, then to a petaflop by the close of the decade. Researchers have made fundamental new discoveries with the help of the Cray X1 and X1E systems. The lab expects to put its Cray XT3 into production in the October-November timeframe. Based on estimates from vendors, Zacharia expects a petascale system to have about 25,000 processors, 200 cabinets and power requirements of 20-40 megawatts.

According to Jack Dongarra, University of Tennessee, the HPC Challenge benchmark suite stresses not only the processors, but the memory system and interconnect. The suite describes architectures with a wider range of metrics that look at spatial and temporal locality within applications. The goal is for the suite to take no more than twice as long as Linpack to run. At SC2005, HPCC Awards sponsored by HPCwire and DARPA will be given in two classes: performance only and productivity (elegant implementation). Future goals are to reduce execution time, expand the set to include additional things such as sparse matrix operations, and develop machine signatures.

Muzio chaired a session on government leadership and partnerships, asking each speaker to comment on organizational mission, funding and outreach. Rupak Biswas, from NASA Ames Research Center, reviewed NASA's four mission directorates and said his organization, which hosts the Columbia system, has special expertise in shared memory systems.

Cray Henry said the DoD High Performance Computing Modernization Program (HPCMP) focuses on science and technology for testing and evaluation. HPCMP wants machines in production within three months of buying them and uses funds for specific projects, software portfolios (applications development), partner universities, and the annual technology insertion process, which expends $40 million to $80 million per year to acquire large HPC systems for the HPCMP centers. The program works with other agencies on benchmarking, partners with industry and other defense agencies on applications development, and maintains academic partnerships.

Steve Meacham said NSF wants input from the HPC community on how best to develop a census of science drivers for HPC at NSF, and on how the science community would like to measure performance. NSF's goal is to create a world-class HPC environment for science. HPC-related investments are made primarily in science-driven HPC systems, systems software, and applications for science and engineering research. In 2007, NSF will launch an effort to develop at least one petascale system by 2010 and invites proposals from any organization with the ability to deploy systems on this scale.

Gary Wohl explained NOAA is a purely operational shop that does numerical weather prediction and short-term numerical climate prediction. The primary HPC goal is reliability for on-time NOAA products. NCEP and IBM share responsibility for 99 percent on-time product generation. Changes in the HPC landscape include greater stress on reliability, a dearth of facility choices, and burgeoning bandwidth requirements.

In the ensuing panel discussion, participants stressed that the federal government needs to recognize HPC as a national asset and a strategic priority. Non-U.S. panelists echoed the message.

Suzy Tichenor, vice president of the Council on Competitiveness, showed a video produced in collaboration with DreamWorks Animation to explain and excite non-technical people about HPC. Meeting attendees applauded the video, which can be ordered at www.compete.org. Tichenor reviewed the Council's HPC Project and its surveys that found, among other things, that HPC is essential to urvival for U.S. businesses that exploit it.

DARPA's Robert Graybill updated attendees on the HPCS program, noting Japan plans to develop a petascale computer by 2010-2011 that will have a heterogeneous architecture (vector/scalar/MD).

In related presentations, Michael Resch of HLRS, Michael Heib from T-Systems and Joerg Stadler of NEC described their successful partnership in Germany, which includes a joint venture company to buy and sell CPU time and the innovative Teraflop Workbench Project, whose goal is to sustain teraflop performance on 15 selected applications.

Sharan Kalwani from General Motors reviewed the auto maker's business transformation, noting that GM is involved with one of every six cars in the world. Today, GM can predict how much compute time and money it will need to develop a new car. Senior management is convinced about the value of HPC, Kalwani said.

David Torgersen's role is to bring shared IT infrastructures to Pfizer. Challenges include vendors selling directly to business units for point solutions that don't reflect the company's needs; differing business needs at various points in the drug development process; and the fact that grid technology is mature in some respects, not in others.

Jack Wells of ORNL, Thomas Hauser of Utah State, Jim Taft of NASA and Dean Hutchings of Linux Networx explored possibilities for partnering to boost the performance of the Overflow code on clusters. They explained why none of their organizations would do this on its own, then reviewed the challenges and potential next steps.

Jill Feblowitz of IDC's Energy Insights group said the financial health of the utility industry has been slowly improving since Enron. In contrast, the oil and gas industries have had a run-up in profits, although these profits have not yet translated into an increased appetite for technology and investments. The Energy Policy Act of 2005 specifically includes HPC provisions for DOE. She described the concepts of “the digital oilfield” and the Intelligent Grid.

Marie-Christine Sawley, director of the Swiss National Supercomputer Center (CSCS), described her organization and its successful, pioneering use of the HPC Challenge benchmarks in the recent procurement of a large-scale (5.7 teraflops) HPC system in conjunction with Switzerland's Paul Scherrer Institute.

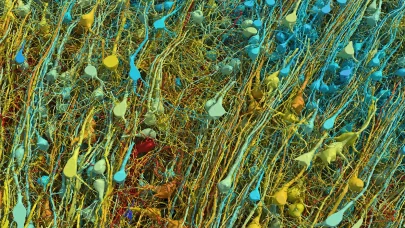

Thomas Schulthess reviewed ORNL's material science work on superconductivity, which has revolutionary implications for electricity generation and transmission. Two decades after the discovery of higher-temperature superconductors, they still remain poorly understood. Using quantum Monte Carlo techniques, the team of ORNL users explicitly showed for the first time that superconductivity is accurately described in the 2D Hubbard model.

Bill Kramer said NERSC focuses on capability computing, with 70 percent of its time going to jobs of 512 processors or larger. NERSC has won numerous awards for its achievements in the DOE's INCITE and predecessor “Big Splash” programs. In the related panel discussion, participants from industry, government and academia stressed the need for better algorithms and methods.

Frank Williams from ARSC is chair of the Coalition for the Advancement of Scientific Computation, whose members represent 42 centers in 28 states. CASC disseminates information about HPC and communications and works to affect the national investment in computational science and engineering on behalf of all academic centers. Williams invited HPC User Forum participants to attend a CASC meeting and to contact him at [email protected].

IDC's Addison Snell moderated a panel discussion on leadership computing in academia. HPC leaders from the University of Cincinnati, Manchester University (UK), ICM/Warsaw University and Virginia Tech discussed their organizations, leadership computing achievements and the challenges of moving toward petascale computing. In another panel discussion moderated by Snell, HPC vendors debated the issues with cluster data management and what needs to be done to improve the handling of data in large HPC installations.

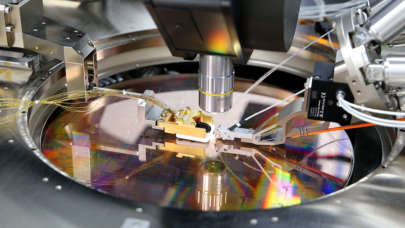

Phil Kuekes of HP gave a talk on molecular electronics and nanotechnology (“nanoelectronics”), summarizing HP's progress toward developing a nanoscale switch with the potential to overcome the limitations of existing IC circuit technology.

Muzio and Joseph facilitated a session on architectural challenges in moving toward petascale computing. According to Muzio, an application engineer's ideal petascale system would have a single processor, uniform memory, Fortran or C, Unix, a fast compiler, an exact debugger and the stability to enable applications growth over time. By contrast, a computer scientist's ideal petascale system would have tens of thousands of processors, different kinds of processors, non-uniform memory, C++ or Java, innovative architecture and radically new programming languages.

“Unfortunately, for many users, the computer scientist's system may be built in the near future,” he said. “The challenge is to build this kind of system but make it look like the kind the applications software engineer wants.”

According to Robert Panoff of the Shodor Educational Foundation, math and science is more about pattern recognition and characterization than mere symbol manipulation. He said the lag time between discoveries and their application.

“The people who will use petascale computers are now in high school to grad school, while most of us are approaching retirement,” he added. “You don't need petascale computing for this teaching, but this will help produce the people needed to do petascale computing.”

David Probst of Concordia University argued scaling to petaflop capability cannot be done without embracing heterogeneity. Global bandwidth is the most critical and expensive system resource, so he said we need to use it well throughout each and every computation. “Heterogeneity is a precondition for this in the face of application diversity, including diversity within a single application,” Probst added. “Every petascale application is a dynamic, loosely coupled mix of high thread-state, temporally local, long-distance computing and low thread- state, spatially local, short-distance computing.”

Burton Smith, chief scientist at Cray, challenged the popular definitions of “petascale,” “scale” and “local.” The popular definition of scale “doesn't mean much, maybe that I ran it on a few systems and it seemed to go fast,” he said. “You probably mean it message-passes with big messages that don't happen very often. Also, people say 'parallel' when they mean 'local.'” He concluded that parallel computing is just becoming important; we know how to build good petascale systems if the money is there; and sloppy language interferes with our ability to think.

According to Michael Resch of HLRS, there needs to be a “move on from MPI to a real programming language or model. I hear people complaining about how hard it is to program systems with large numbers of processors. What about buying systems with a smaller number of more-powerful processors? Why not buy high-quality systems?”

Muzio introduced the companion panel discussion on “software issues in moving toward petascale computing” by reviewing the HPC User Forum's achievements in promoting better benchmarks and underscoring the limited scalability and capabilities of ISV application software.

Suzy Tichenor reviewed the Council on Competitiveness' recent “Study of ISVs Serving the HPC Market: The Need For Better Application Software.” The study found the business model for HPC-specific application software has evaporated, leaving most applications unable to scale well. Market forces alone will not address this problem and need to be supplemented with external funding and expertise. Most ISVs are willing to partner with other organizations to accelerate progress.

DARPA's Robert Graybill said the HPCS program is looking at how to measure productivity, and that he believes new programming languages are needed. We need time to experiment before deciding which are the right HPC language attributes. The goal by 2008 is to put together an industry consortium to pursue this. I/O is another major challenge.

BAE Systems' Steve Finn, chair of the HPCMP's User Advocacy Group, said continuous improvements are still occurring to legacy codes and large investments have been made in scalable codes. “We need to prioritize which codes to rewrite first [for petascale systems],” he added. “UPC and CAF won't be the final languages. It's good to try them out, but if you rewrite them now, you may need to rewrite them again in a few years.”

The next HPC User Forum meeting will take place April 10-12, 2006 in Richmond, Va. The meetings are co-sponsored by HPCwire.